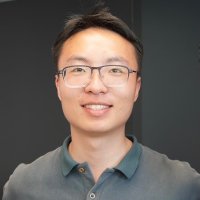

Sarvesh Patil

@servo97

Your friendly neighborhood PhD in Robotics @CMU. Soft robots for Manipulation | Causal Inference | MARL.

ID: 2891434130

http://servo97.github.io 06-11-2014 01:16:06

855 Tweet

276 Followers

550 Following

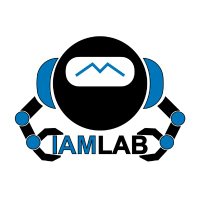

Can robots make pottery🍵? Throwing a pot is a complex manipulation task of continuously deforming clay. We will present RoPotter, a robot system that uses structural priors to learn from demonstrations and make pottery IEEE-RAS Int. Conf. on Humanoid Robots (HUMANOIDS) CMU Robotics Institute 👇robot-pottery.github.io 1/8🧵

A dream I've had for five years is finally coming true: I'll be co-teaching a course next sem. on the algorithmic foundations of imitation learning / RLHF with my advisors, Drew Bagnell and Steven Wu! Sign up if you're at CMU (17-740) or follow along at interactive-learning-algos.github.io!

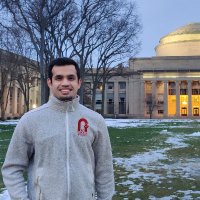

I am on the industry job market, and am planning to interview around next March. I am attending NeurIPS Conference, and I hope to meet you there if you are hiring! My website: soyeonm.github.io Short bio about me: I am a 5th year PhD student at CMU MLD, working with Russ Salakhutdinov

Thrilled to join Virginia Tech as an assistant professor in Virginia Tech Mechanical Engineering this fall! At the TEA lab (tealab.ai), we’ll explore hybrid AI systems for efficient and adaptive agents and robots 🤖 Thank you to everyone who has supported me along the way!

Spot dressed up for Halloween!🎃 It's on a mission for its favorite 'candy'! 🔋 But two 'ghosts' were blocking the path… A fun demo of our new paper on how robots can intelligently 'make way' on cluttered stairs! (1/4) CMU Robotics Institute