Sedrick Keh

@sedrickkeh2

research engineer @ToyotaResearch interested in pre-training, post-training, and multimodality

ID: 1574303953960968193

https://sedrickkeh.github.io 26-09-2022 07:45:14

156 Tweet

315 Followers

262 Following

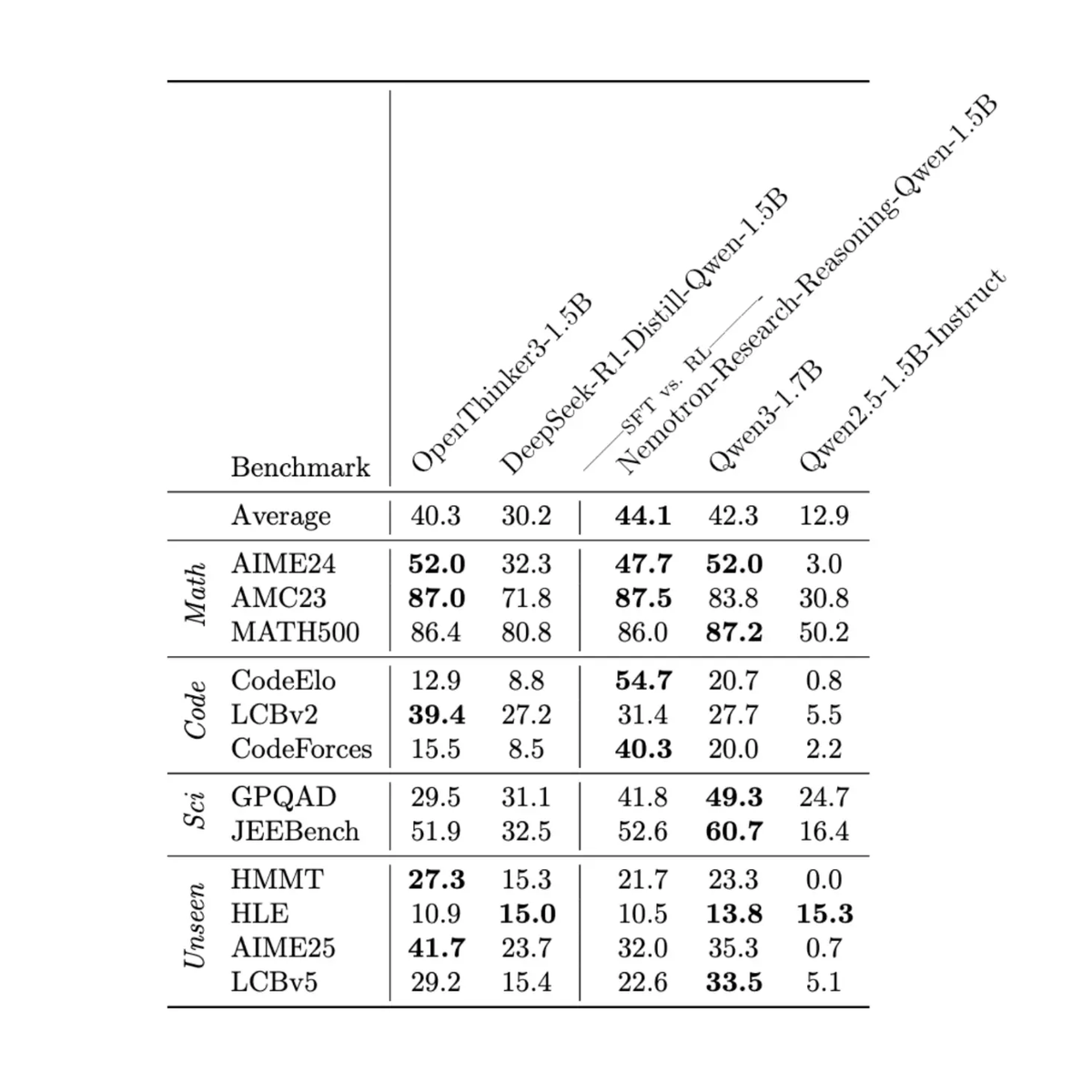

This plot is a thing of beauty. Great visualization by Jean Mercat! One of many cool artifacts that arose from conducting 1000+ experiments for OpenThoughts 😀

If you’re working on robotics and AI, the recent Stanford talk from Russ Tedrake on scaling multitask robot manipulation is a mandatory watch, full stop. No marketing, no hype. Just solid hypothesis driven science, evidence backed claims. A gold mine in today’s landscape!

TRI's latest Large Behavior Model (LBM) paper landed on arxiv last night! Check out our project website: toyotaresearchinstitute.github.io/lbm1/ One of our main goals for this paper was to put out a very careful and thorough study on the topic to help people understand the state of the

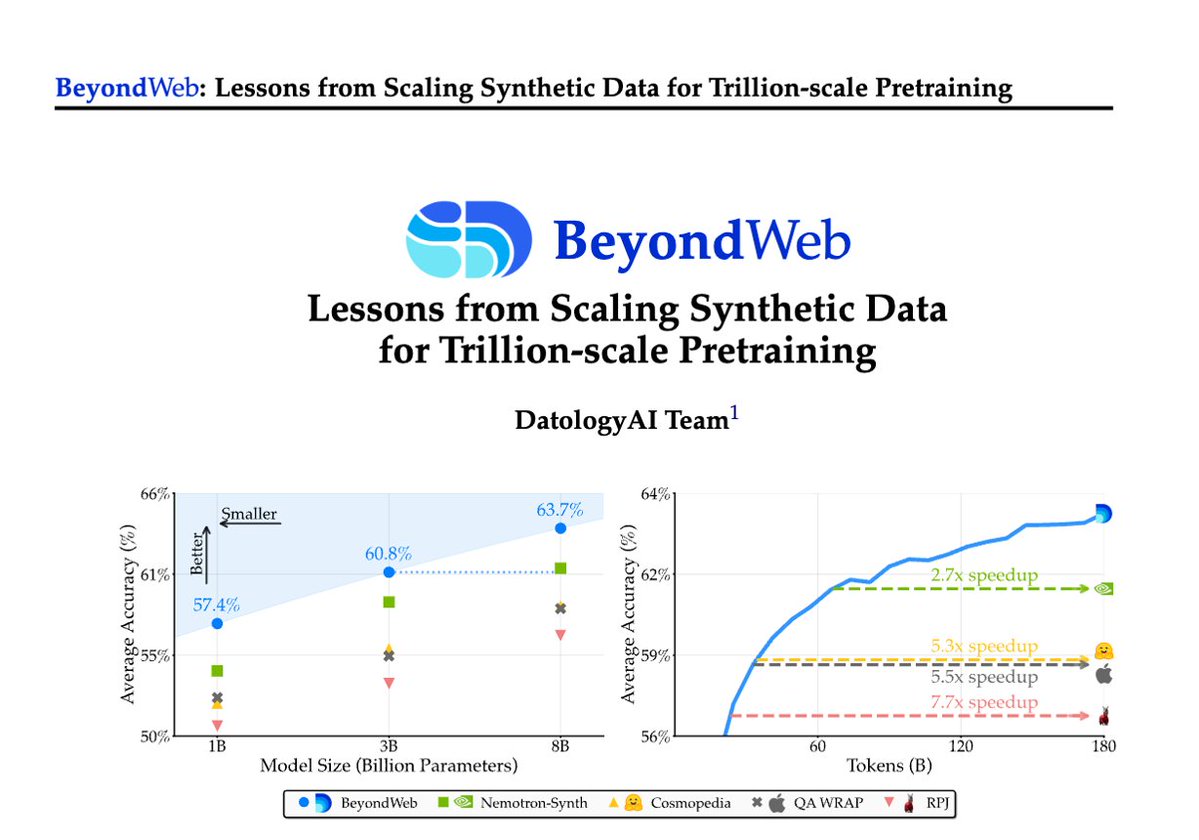

1/Pretraining is hitting a data wall; scaling raw web data alone leads to diminishing returns. Today DatologyAI shares BeyondWeb, our synthetic data approach & all the learnings from scaling it to trillions of tokens🧑🏼🍳 - 3B LLMs beat 8B models🚀 - Pareto frontier for performance