Srilakshmi Chavali

@schavalii

dev rel @ Arize || uc berkeley alum 🎓

ID: 1879242181896200192

14-01-2025 19:00:38

14 Tweet

13 Followers

31 Following

🔧 arize-phoenix mcp gets phoenix-support tool for Cursor / Anthropic Claude / windsurf ! You now can click the add to cursor button on phoenix and get a continuously updating MCP server config directly integrated into your IDE. @arizeai/[email protected] also comes

🍳Cooking up a great virtual workshop for this Thursday with our friend Tony Kipkemboi from @crewaiinc and our own Srilakshmi Chavali! Register👇 to learn how to train agents automatically and evaluate agentic workflows. bit.ly/3IrvGx5

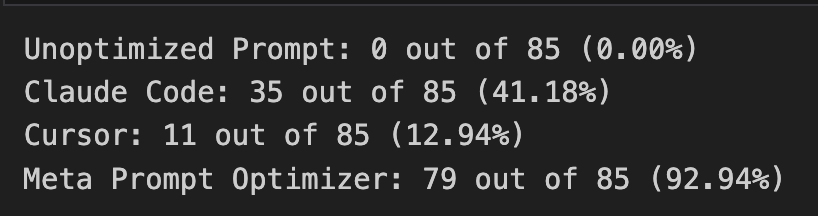

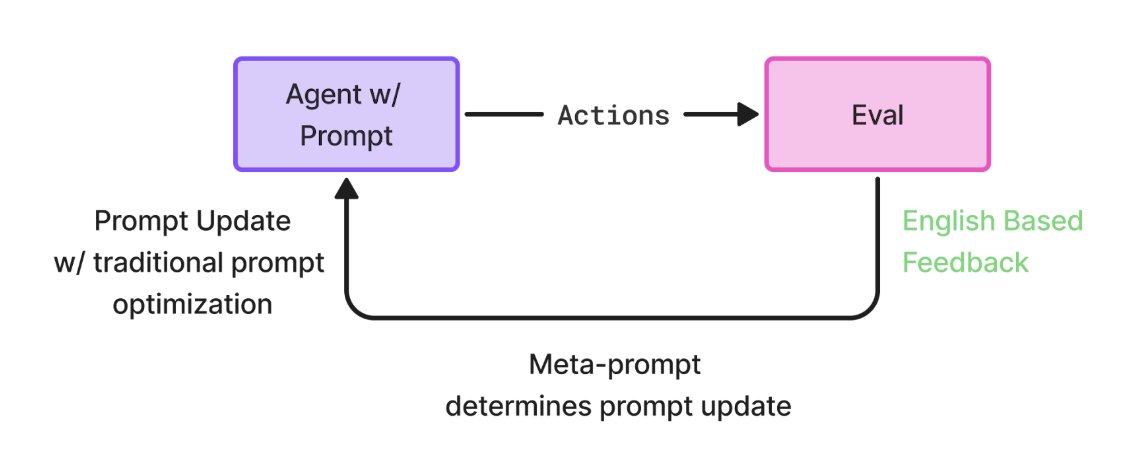

Reinforcement Learning in English – Prompt Learning Beyond just Optimization Andrej Karpathy tweeted something this week that I think many of us have been feeling: the resurgence of RL is great, but it’s missing the big picture. We believe that the industry chasing traditional RL is

Andrej Karpathy This really resonates deeply – we’ve been building almost exactly that loop: rollout → reflection via English feedback → distilled lesson → prompt update. We’ve been using English feedback, via explanations, annotations, and rules, as the core signal for improvement. The