Satyapriya Krishna

@satyascribbles

Explorer. @ai4life_harvard @hseas @googleAI @MetaAI @SCSatCMU @AmazonScience @ml_collective @D3Harvard @HarvardAISafety

ID: 1267551086010867713

https://satyapriyakrishna.com/ 01-06-2020 20:18:32

460 Tweet

471 Followers

245 Following

Sharing our work at NeurIPS Conference on reasoning with EBMs! We learn an EBM over simple subproblems and combine EBMs at test-time to solve complex reasoning problems (3-SAT, graph coloring, crosswords). Generalizes well to complex 3-SAT / graph coloring/ N-queens problems.

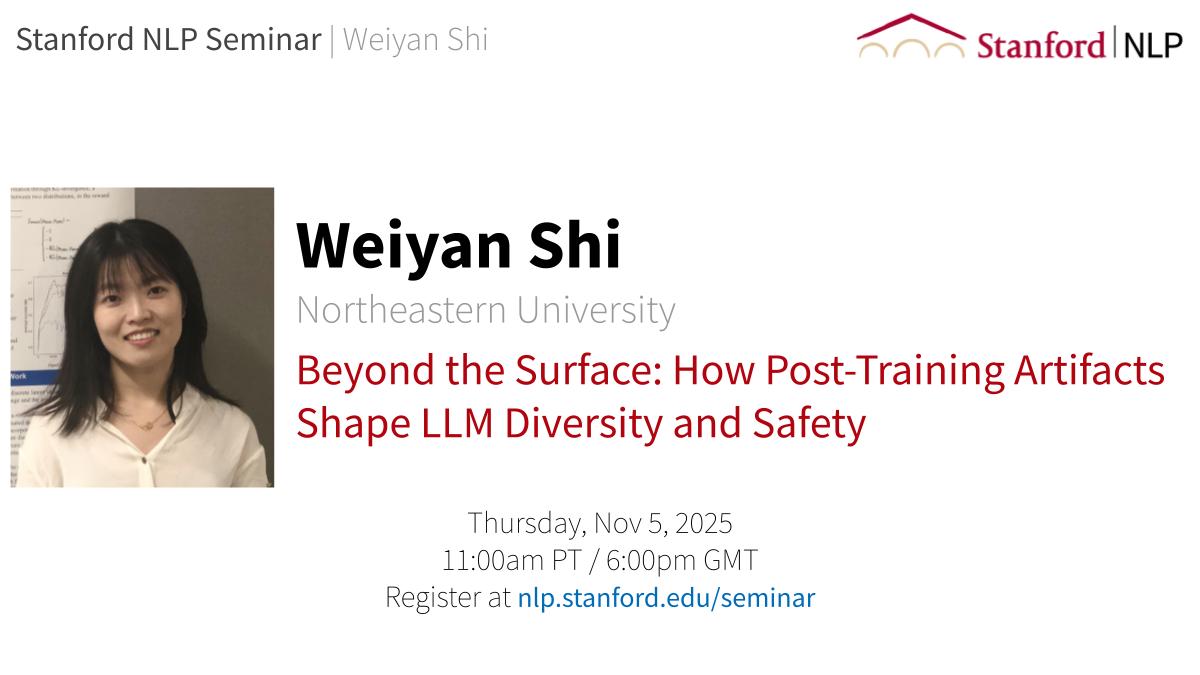

Tomorrow, we are excited to welcome Weiyan Shi to the Stanford NLP Seminar! Date and Time: Thursday, November 6, 11:00AM — 12:00 PM Pacific Time. Zoom Link: stanford.zoom.us/j/93941842999?… Title: Beyond the Surface: How Post-Training Artifacts Shape LLM Diversity and Safety

✨ New course materials: Interpretability of LLMs✨ This semester I'm teaching an active-learning grad course at Tel Aviv University on LLM interpretability, co-developed with my student Daniela Gottesman. We're releasing the materials as we go, so they can serve as a resource for anyone