Richard Kuzma

@rskuzma

GenAI @googlecloud, ex-LLMs @CerebrasSystems, ML @USSOCOM Tech for Public Good @DIU_x and Harvard @Kennedy_School

ID: 887464277615013888

19-07-2017 00:09:06

233 Tweet

438 Followers

1,1K Following

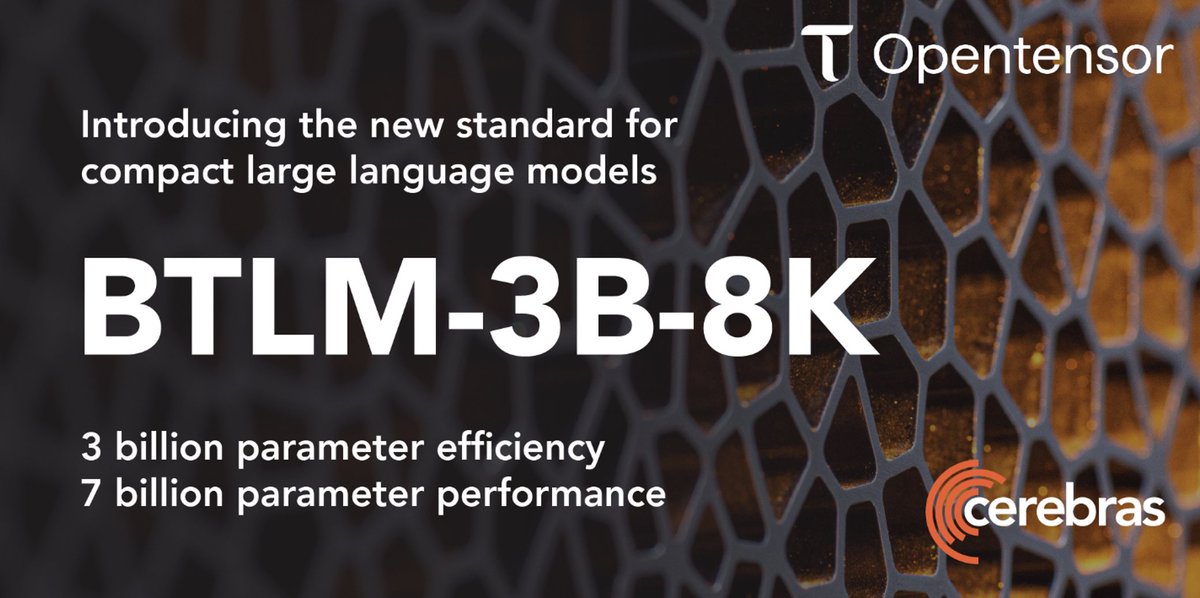

Announcing BTLM-3B-8k-base! - 7B performance in a 3B model ✅ - 8k context length ✅ - quantize to fit in 3GB of memory ✅ - trained on high quality data ✅ - apache 2.0 license ✅ huggingface.co/cerebras/btlm-… Great work by my colleagues Daria Soboleva Nolan Dey Faisal Al-khateeb and others 👏

I read Leopold Aschenbrenner's essay on the future of AI research and geopolitical competition. It's well-researched, well-presented, and passionate. However, Leopold advocates for an unreasonably strict and exclusionary future for AI development—a view that's gaining traction. (1/9)