Rui Lu

@raylu_thu

PhD student in @Tsinghua_Uni studying machine learning theory, graduate from Yao class. Also a youtuber @ 漫士沉思录 manshi_math

ID: 1578007763015311369

06-10-2022 13:02:44

22 Tweet

119 Followers

116 Following

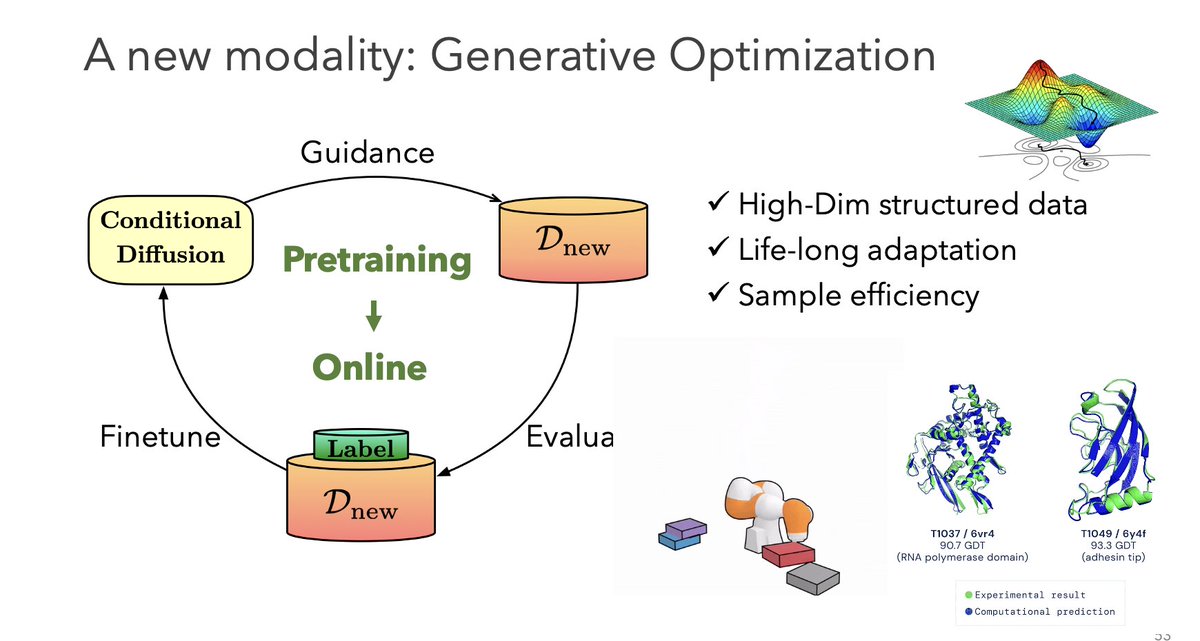

How to capitalize #GenerativeAI and #diffusion models for modeling complex data and structured optimization? From images to proteins? Check my talk "Diffusion models for Generative Optimization" at Broad Institute , Harvard, MIT last week. Youtube: youtube.com/watch?v=hDRDx5…