Rareș Ambruș

@raresambrus

Computer Vision Research Lead @ToyotaResearch. Previously robotics PhD @ KTH. Working on robotics, computer vision and machine learning.

ID: 2821606207

http://www.csc.kth.se/~raambrus/ 20-09-2014 10:04:59

60 Tweet

384 Followers

548 Following

Is monocular 3D perception needed? Is the field making meaningful progress? What do you think? Tell us below and join our Mono3D #CVPR2021 workshop on Friday 25 8am-12pm PT sites.google.com/view/mono3d-wo…! We have great speakers, 2 panels, and we'll announce the DDAD challenge winners!

Proud to announce that our paper “Single-Shot Scene Reconstruction” is accepted to #CoRL2021! We use transformers and implicit representations to infer a fully editable 3D scene from a single image. Collaboration between Toyota Research Institute (TRI), Stanford University and Massachusetts Institute of Technology (MIT).

TRI-ML Toyota Research Institute (TRI) is going to #CVPR! Join us at our poster sessions or pass by booth #715 to chat and find out more about our work! #CVPR2022

Very happy to share our #ECCV2022 oral “Particle Video Revisited: Tracking Through Occlusions Using Point Trajectories” Fine-grained tracking of anything, outperforming optical flow. project: particle-video-revisited.github.io abs: arxiv.org/abs/2204.04153 code: github.com/aharley/pips

SUPER excited to present ShAPO🎩, our #ECCV2022 work on real2sim asset creation and category-level 3D object understanding! Done during my internship at Toyota Research Institute (TRI)! Webpage: zubair-irshad.github.io/projects/ShAPO… Robotics@GT Georgia Tech School of Interactive Computing Machine Learning at Georgia Tech European Conference on Computer Vision #ECCV2026 ⬇️(1/9)

Wanna switch from academia to industry but unsure how? Here's a guide! rowanmcallister.github.io/post/industry/ Thanks to Greg Kahn, Kate Rakelly, Boris Ivanovic, Rebekah Baratho, Ashwin Balakrishna, Jessica Yin, Nick Rhinehart, Jessica Cataneo, Rareș Ambruș

Our #ECCV2022 workshop, "Frontiers of Monocular 3D Perception" is about to start! If you are interested in recent developments in monocular perception be sure to join us at sites.google.com/view/mono3d-ec… Adrien Gaidon Vitor Guizilini Greg Shakhnarovich Matt Walter Igor Vasiljevic

Interested in object-centric scene reconstruction from an RGB-D image? Come talk to us today at 11 at poster 34 about ShAPO - our work on category-level 3D object understanding #ECCV2022 Toyota Research Institute (TRI) Zubair Irshad Sergey Zakharov Adrien Gaidon Zsolt Kira Thomas Kollar

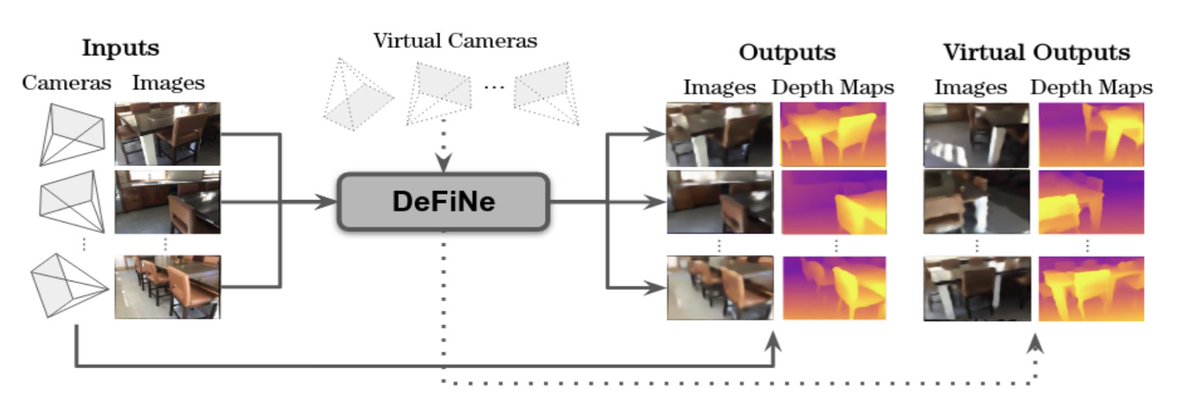

Want to find out the latest from our team on monodepth? Come talk to us today at poster 98 at #ECCV2022 about Depth Field Networks - a generalizable neural field architecture for depth estimation Vitor Guizilini Igor Vasiljevic Fang Jiading Matt Walter Greg Shakhnarovich Adrien Gaidon

Come by poster 50 this afternoon European Conference on Computer Vision #ECCV2026 to learn about SpOT: Spatiotemporal Modeling for 3D Object Tracking! Colton will also be presenting it in the afternoon oral session 3.B.1. Paper: arxiv.org/abs/2207.05856

ML is continuing to grow at TRI! Foundation models are a game changer for the web, and we are excited to push further for embodied systems that amplify us in the real world. It is challenging, meaningful, and very exciting. Come join us! jobs.lever.co/tri/51ffd422-0… Toyota Research Institute (TRI)

I'm super excited about NeO-360, our #ICCV23 work on outdoor view synthesis from sparse views + we release a challenging dataset for this task! Congrats Zubair Irshad Zsolt Kira Sergey Zakharov Katherine Liu Vitor Guizilini Thomas Kollar Adrien Gaidon and colleagues at Parallel Domain

🌟We have released NeO-360 code -github.com/zubair-irshad/…. NeO-360 achieves generalizable outdoor 360-degree novel view synthesis. We hope the code and NERDS360 dataset advances digital twins of unbounded spaces! Toyota Research Institute (TRI) Georgia Tech School of Interactive Computing Robotics@GT Machine Learning at Georgia Tech Neural Fields

I'm super excited about our work with Woven by Toyota on object-pose estimation: using diffusion we estimate multiple poses from a single observation and can handle ambiguity in the input. We achieve strong generalization on real data despite training only on sim Toyota Research Institute (TRI)