Radek Bartyzal

@radekbartyzal

Recommendation Team Lead at GLAMI. Building production ML systems for millions of users.

ID: 1908291367

https://github.com/BartyzalRadek 26-09-2013 15:26:52

348 Tweet

179 Followers

170 Following

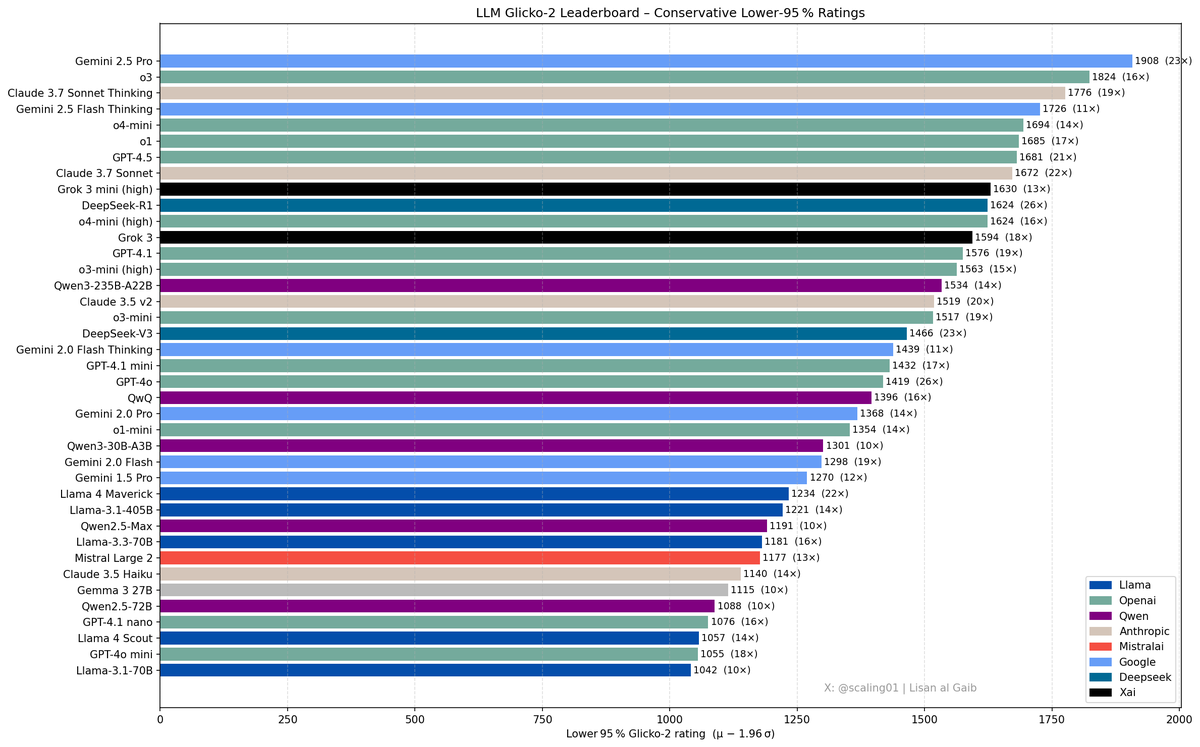

It is critical for scientific integrity that we trust our measure of progress. The lmarena.ai has become the go-to evaluation for AI progress. Our release today demonstrates the difficulty in maintaining fair evaluations on lmarena.ai, despite best intentions.

![Essential AI (@essential_ai) on Twitter photo [1/5]

Muon has recently emerged as a promising second-order optimizer for LLMs. Prior work (e.g. Moonshot) showed that Muon scales. Our in-depth study addresses the practicality of Muon vs AdamW and demonstrates that Muon expands the Pareto frontier over AdamW on the [1/5]

Muon has recently emerged as a promising second-order optimizer for LLMs. Prior work (e.g. Moonshot) showed that Muon scales. Our in-depth study addresses the practicality of Muon vs AdamW and demonstrates that Muon expands the Pareto frontier over AdamW on the](https://pbs.twimg.com/media/GqR7u-JaUAEiXP8.jpg)