Prasann Singhal

@prasann_singhal

4th-year undergrad #NLProc Researcher at UT Austin, advised by @gregd_nlp

ID: 1349785093510934528

https://prasanns.github.io/ 14-01-2021 18:27:08

82 Tweet

274 Followers

722 Following

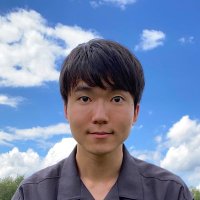

I won't be at #EMNLP2024, but my students & collaborators are presenting: 🔍 Detecting factual errors from LLMs Liyan Tang 🛠️ Detect, critique, & refine pipeline Manya Wadhwa Lucy Zhao 🏭 Synthetic data generation Abhishek Divekar 📄 Fact-checking @anxruddy at FEVER. Links🧵

🔔 I'm recruiting multiple fully funded MSc/PhD students University of Alberta for Fall 2025! Join my lab working on NLP, especially reasoning and interpretability (see my website for more details about my research). Apply by December 15!

🌟Job ad🌟 We (Greg Durrett, Matt Lease and I) are hiring a postdoc fellow within the CosmicAI Institute, to do galactic work with LLMs and generative AI! If you would like to push the frontiers of foundation models to help solve myths of the universe, please apply!

Are LMs sensitive to suspicious coincidences? Our paper finds that, when given access to knowledge of the hypothesis space, LMs can show sensitivity to such coincidences, displaying parallels with human inductive reasoning. w/Kanishka Misra 🌊, Kyle Mahowald, Eunsol Choi

New work led by Liyan Tang with a strong new model for chart understanding! Check out the blog post, model, and playground! Very fun to play around with Bespoke-MiniChart-7B and see what a 7B VLM can do!

Happy to announce Bespoke-Minichart-7B! This was a tough cookie to crack, and involved a lot of data curation and modeling work, but overall very happy with the results! Congrats to the team and especially to Liyan Tang for running so many experiments that helped us understand

![Anirudh Khatry (@anirudhkhatry) on Twitter photo 🚀Introducing CRUST-Bench, a dataset for C-to-Rust transpilation for full codebases 🛠️

A dataset of 100 real-world C repositories across various domains, each paired with:

🦀 Handwritten safe Rust interfaces.

🧪 Rust test cases to validate correctness.

🧵[1/6] 🚀Introducing CRUST-Bench, a dataset for C-to-Rust transpilation for full codebases 🛠️

A dataset of 100 real-world C repositories across various domains, each paired with:

🦀 Handwritten safe Rust interfaces.

🧪 Rust test cases to validate correctness.

🧵[1/6]](https://pbs.twimg.com/media/GpO9WCJXMAAstQm.jpg)