Noetic

@noetic_labs

The experiential learning company

ID: 1925778757492625408

https://www.noeticlabs.co 23-05-2025 05:00:28

17 Tweet

99 Followers

0 Following

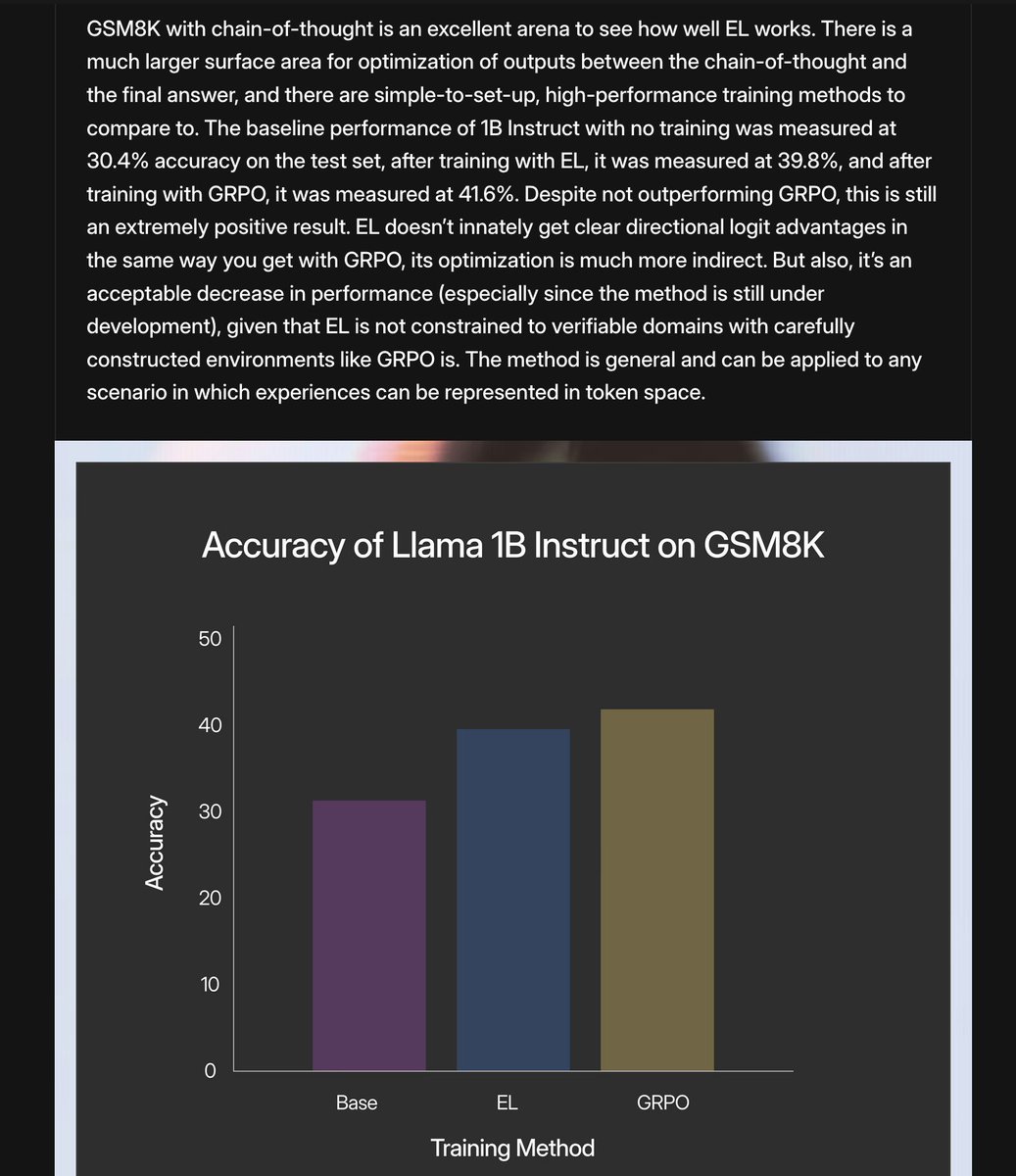

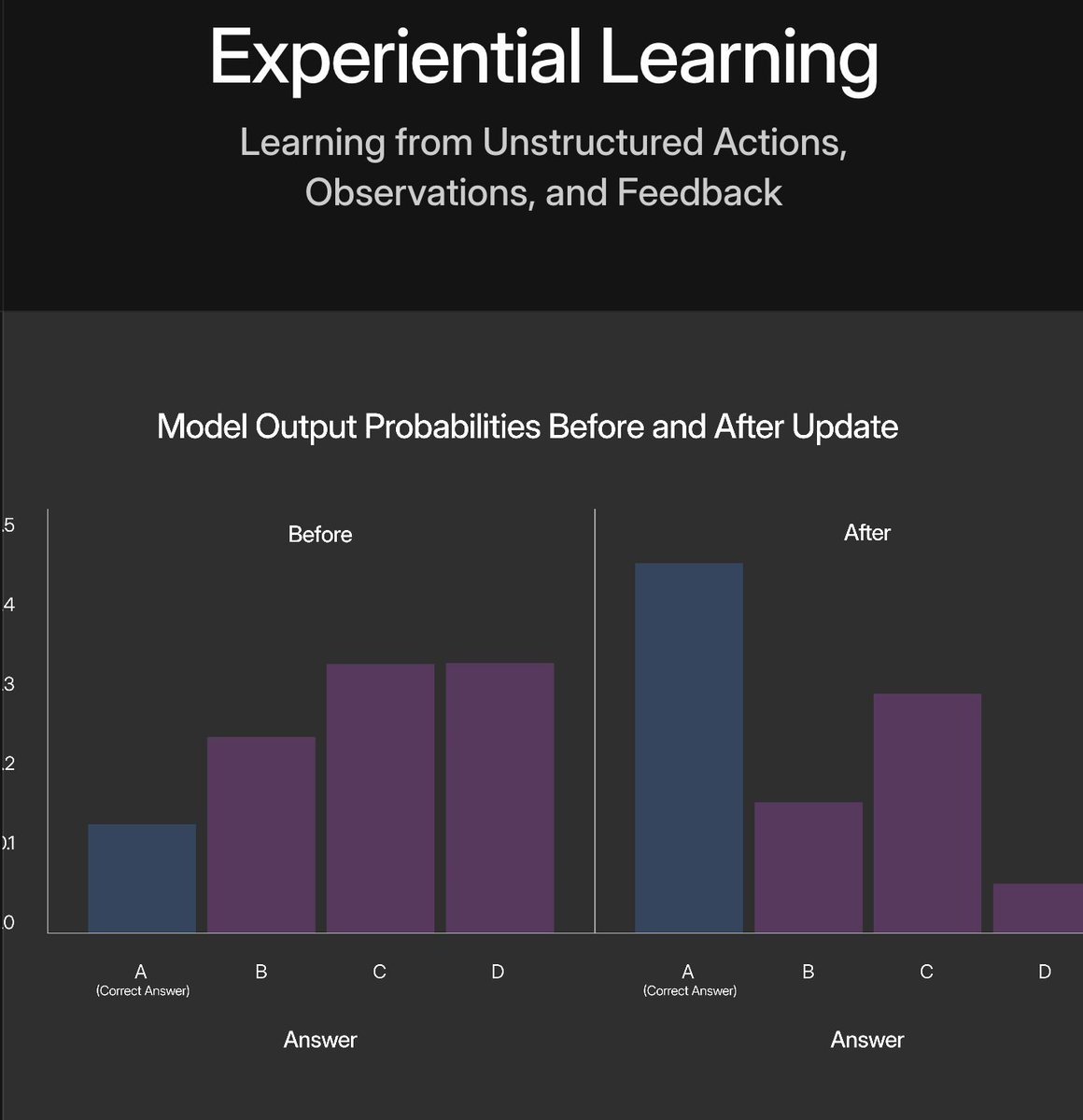

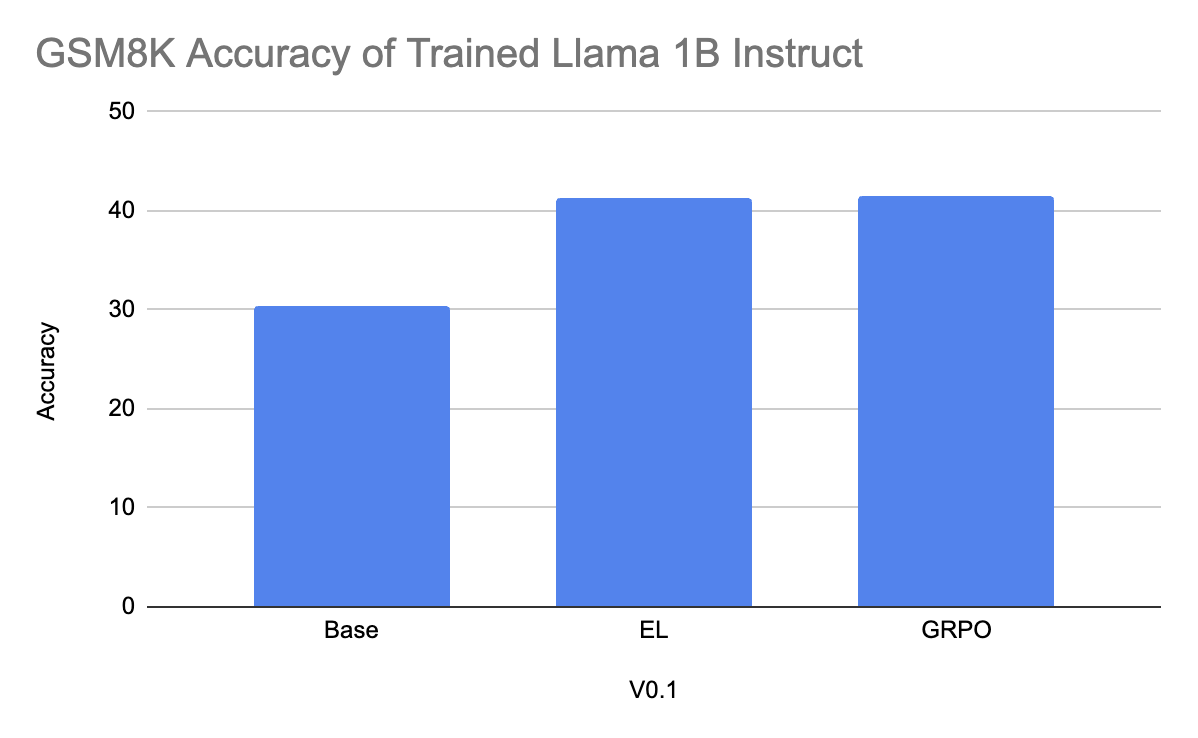

Noetic develops «experiential learning framework», with the goal to move beyond RLVR or VR-CLI and their dependence on gold standard answers, make proper use of rich feedback for the general case, and usher in Richard Sutton's Era of Experience.