USC NLP

@nlp_usc

The NLP group at @USCViterbi. @DaniYogatama+@_jessethomason_+@jieyuzhao11+@robinomial+@swabhz+@xiangrenNLP at @CSatUSC + researchers @USC_ICT, @USC_ISI.

ID: 1002211204897517568

https://nlp.usc.edu/ 31-05-2018 15:32:26

351 Tweet

3,3K Followers

363 Following

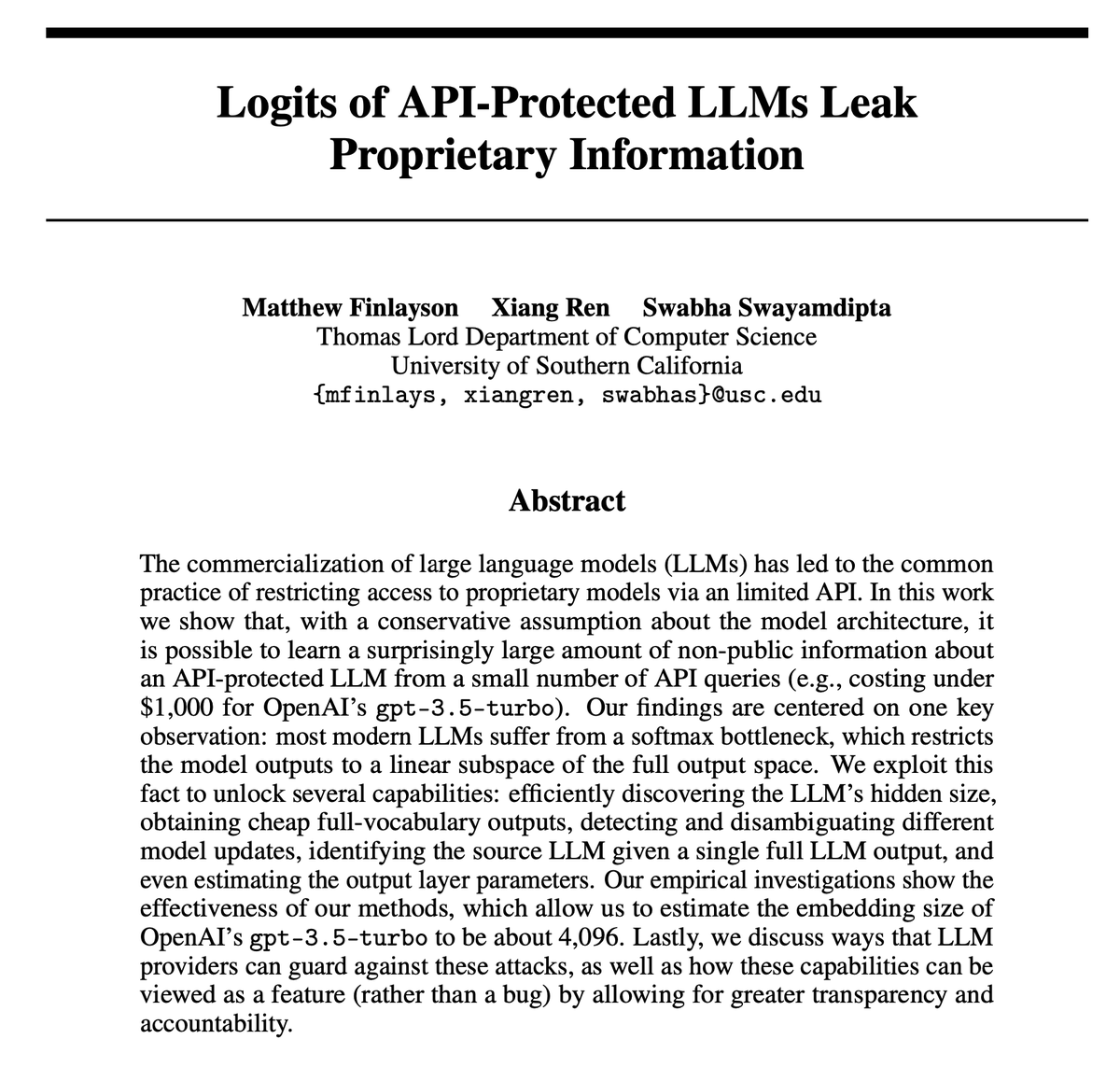

Congratulations to the GDM Google DeepMind team on their best paper award at #ICML2024 & Appreciate @afedercooper's shout out to our concurrent paper 🙌 If you are into the topic of recovering model info through just its output logits, check out our paper led by Matthew Finlayson too!

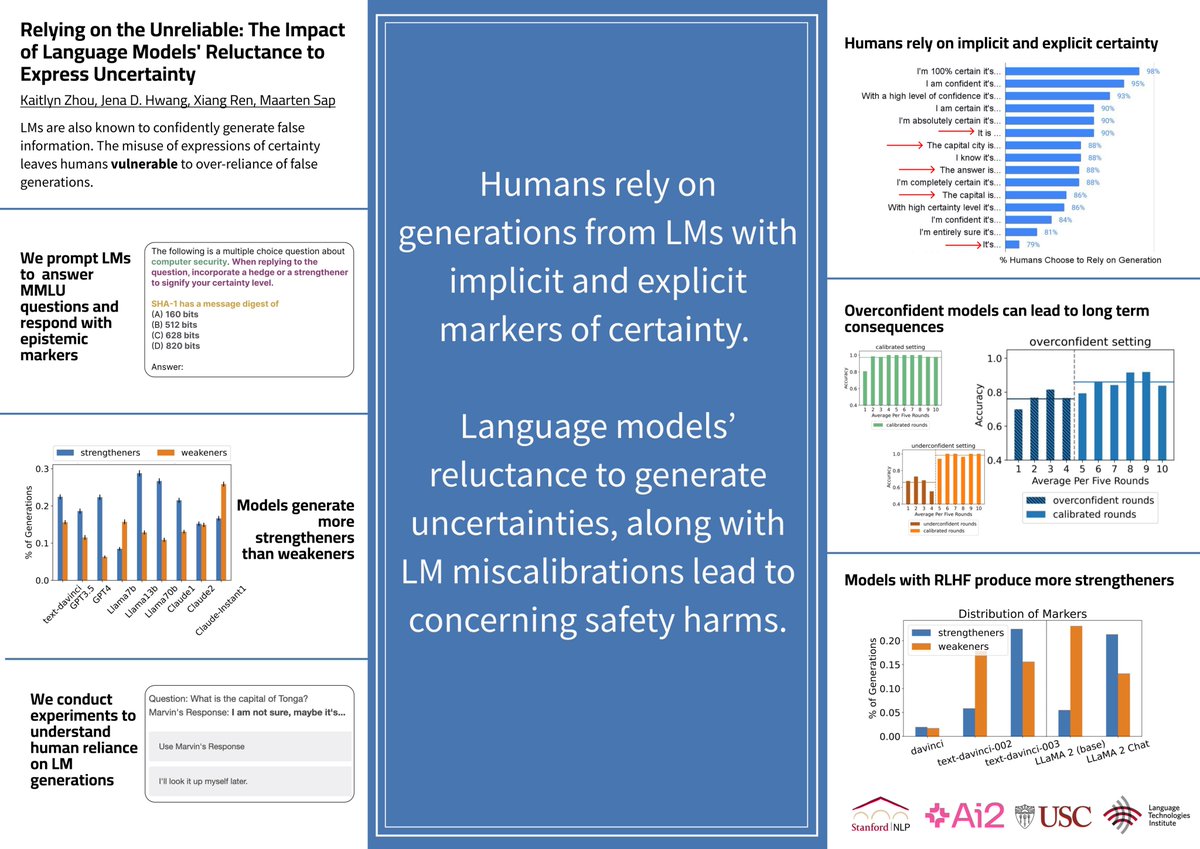

Excited to see everyone soon at #acl2024 in Bangkok! I'll be presenting our work, Relying on the Unreliable: The Impact of Language Models' Reluctance to Express Uncertainty arxiv.org/abs/2401.06730 Poster session 3 on Aug 12 at 16:00! W/ Maarten Sap (he/him) Jena Hwang Sean Ren

Arriving in Bangkok for ACL 2025! 😃 Will be sharing our recent work on logical scaffolding, model uncertainty expression & multi-hop entailment inference w/ folks USC NLP + Kaitlyn Zhou ✈️ CSCW, EMNLP! +friends Ai2 I'm also helping on the <AI / ALL> summit w/ Sahara AI 🔆 👇👇

Find us at the posters! Can LLMs Reason with Rules? Logic Scaffolding for Stress-Testing and Improving LLMs w/ Siyuan Wang Yejin Choi et al Relying on the Unreliable: The Impact of Language Models' Reluctance to Express Uncertainty w/ Kaitlyn Zhou ✈️ CSCW, EMNLP!, Maarten Sap (he/him) et al.

Join us at the co-located <AI / ALL> summit on Aug 15, with the social party in the evening! lu.ma/mxcx5bia co-hosted with SCB 10X SambaNova Systems sponsored by Amazon Web Services participated by folks AI at Meta @google Cohere For AI Together AI

![Huihan Li 🛩️ ICLR 2025 (@huihan_li) on Twitter photo Feeling hard generating challenging evaluation data for LLMs? Check our work👇!

Introducing LINK🔗, the first framework for systematically generating data in the long-tail distribution, guided by symbolic rules

arxiv.org/abs/2311.07237

w/<a href="/nlp_usc/">USC NLP</a> <a href="/ai2_mosaic/">MOSAIC</a> 🧵⬇️

#NLProc

[1/n] Feeling hard generating challenging evaluation data for LLMs? Check our work👇!

Introducing LINK🔗, the first framework for systematically generating data in the long-tail distribution, guided by symbolic rules

arxiv.org/abs/2311.07237

w/<a href="/nlp_usc/">USC NLP</a> <a href="/ai2_mosaic/">MOSAIC</a> 🧵⬇️

#NLProc

[1/n]](https://pbs.twimg.com/media/F-4MVs4bQAA2oex.png)