Nitish Joshi

@nitishjoshi23

PhD student at NYU | CS undergrad @IITBombay '20 | Research in Natural Language Processing (#NLProc)

ID: 1005935875556036608

https://joshinh.github.io/ 10-06-2018 22:12:56

181 Tweet

940 Followers

792 Following

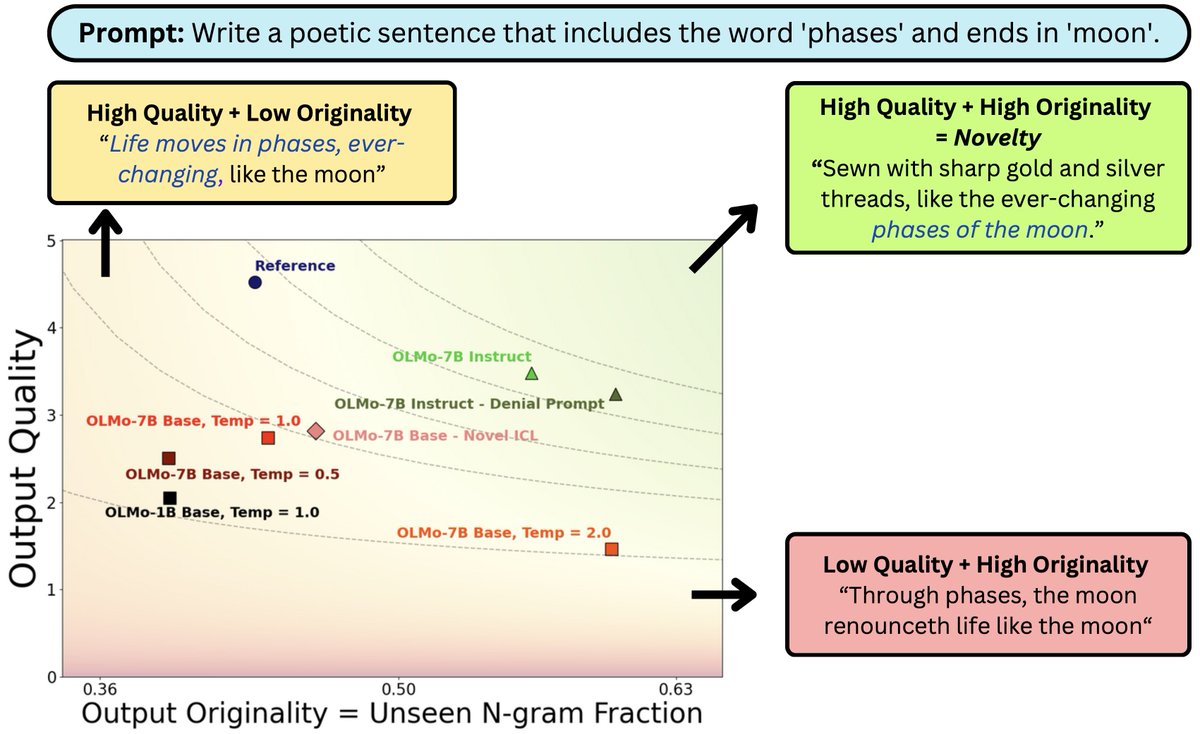

What does it mean for #LLM output to be novel? In work w/ John(Yueh-Han) Chen, Jane Pan, Valerie Chen, He He we argue it needs to be both original and high quality. While prompting tricks trade one for the other, better models (scaling/post-training) can shift the novelty frontier 🧵

Honored to get the outstanding position paper award at ICML Conference :) Come attend my talk and poster tomorrow on human centered considerations for a safer and better future of work I will be recruiting PhD students at Stony Brook University Stony Brook University Dept. of Computer Science coming fall. Please get in touch.

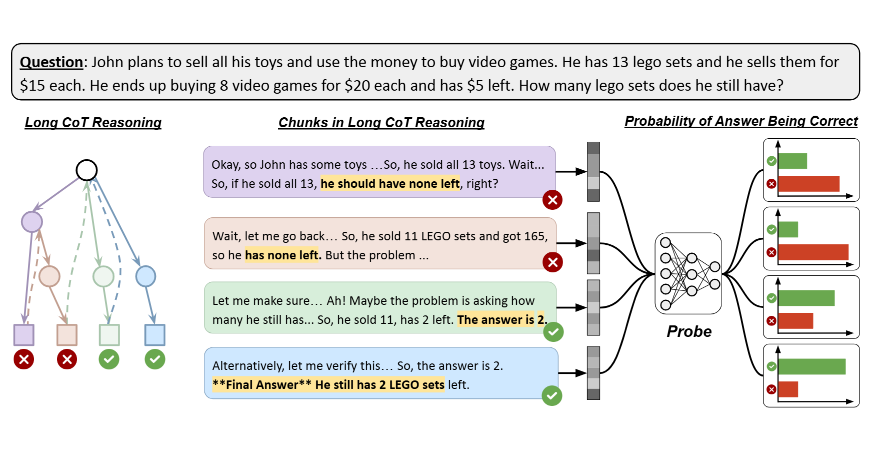

Reward hacking means the model is making less effort than expected: it finds the answer long before its fake CoT is finished. TRACE uses this idea to detect hacking when CoT monitoring fails. Work led by Xinpeng Wang Nitish Joshi and Rico Angell👇

New research with Aditi Raghunathan, Nicholas Carlini and Anthropic! We built ImpossibleBench to measure reward hacking in LLM coding agents 🤖, by making benchmark tasks impossible and seeing whether models game tests or follow specs. (1/9)

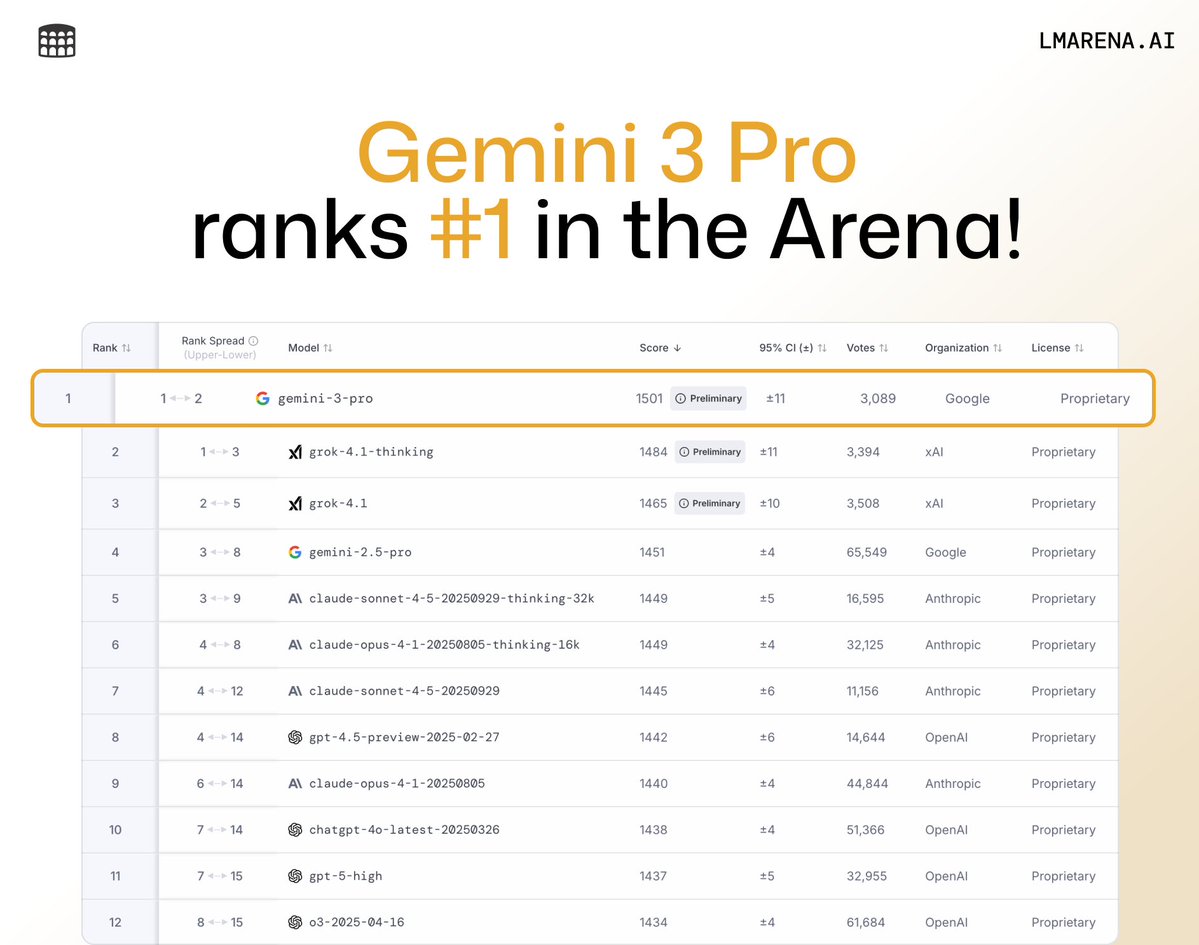

🚨BREAKING: Google DeepMind’s Gemini-3-Pro is now #1 across all major Arena leaderboards 🥇#1 in Text, Vision, and WebDev - surpassing Grok-4.1, Claude-4.5, and GPT-5 🥇#1 in Coding, Math, Creative Writing, Long Queries, and nearly all occupational leaderboards. Massive gains