Hugo

@mldhug

PhD student in multimodal learning for audio understanding at @telecomparis

ID: 1162859120988438528

17-08-2019 22:50:03

19 Tweet

45 Followers

403 Following

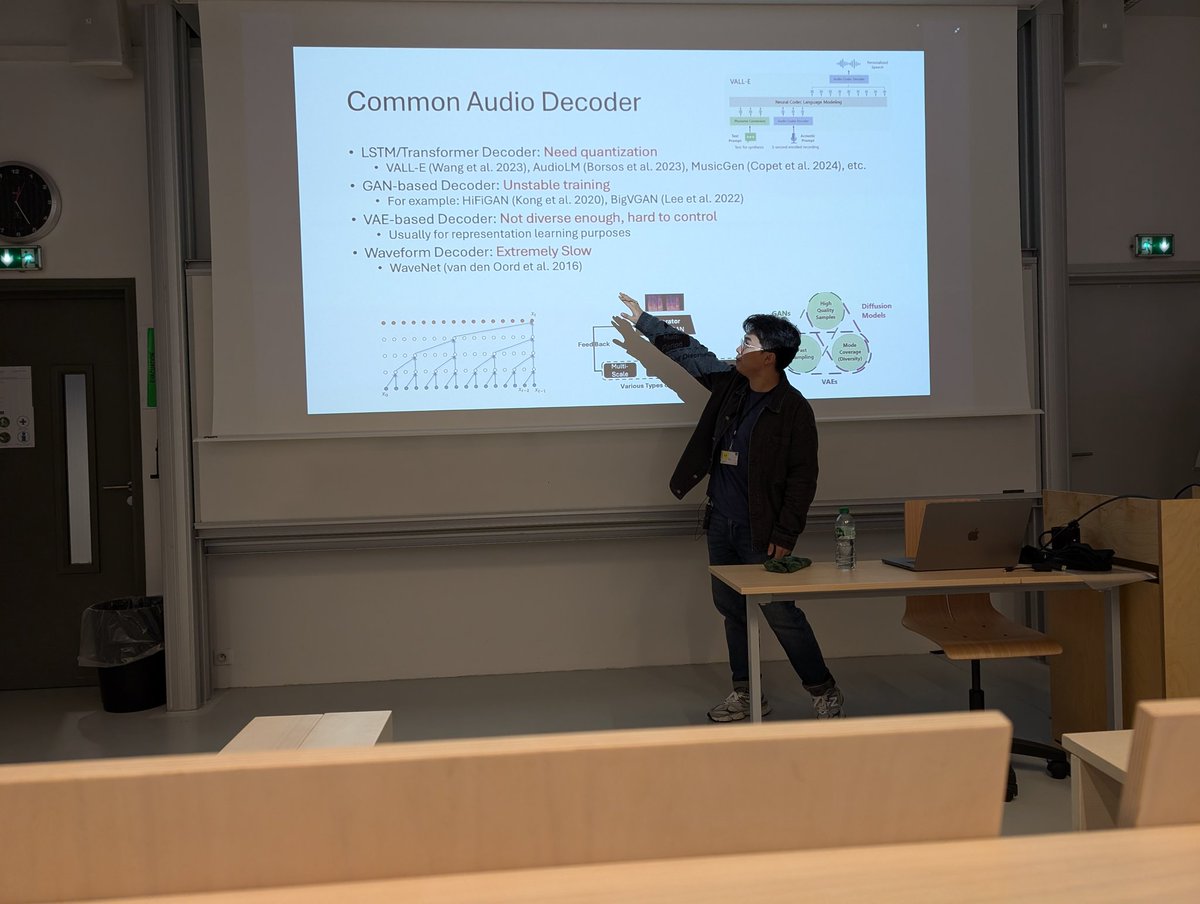

Thanks for tweeting, @AK! We’re super excited about the future of text-only vision model selection! 🙏 mars huang Jackson (Kuan-Chieh) Wang @cvpr @syeung10

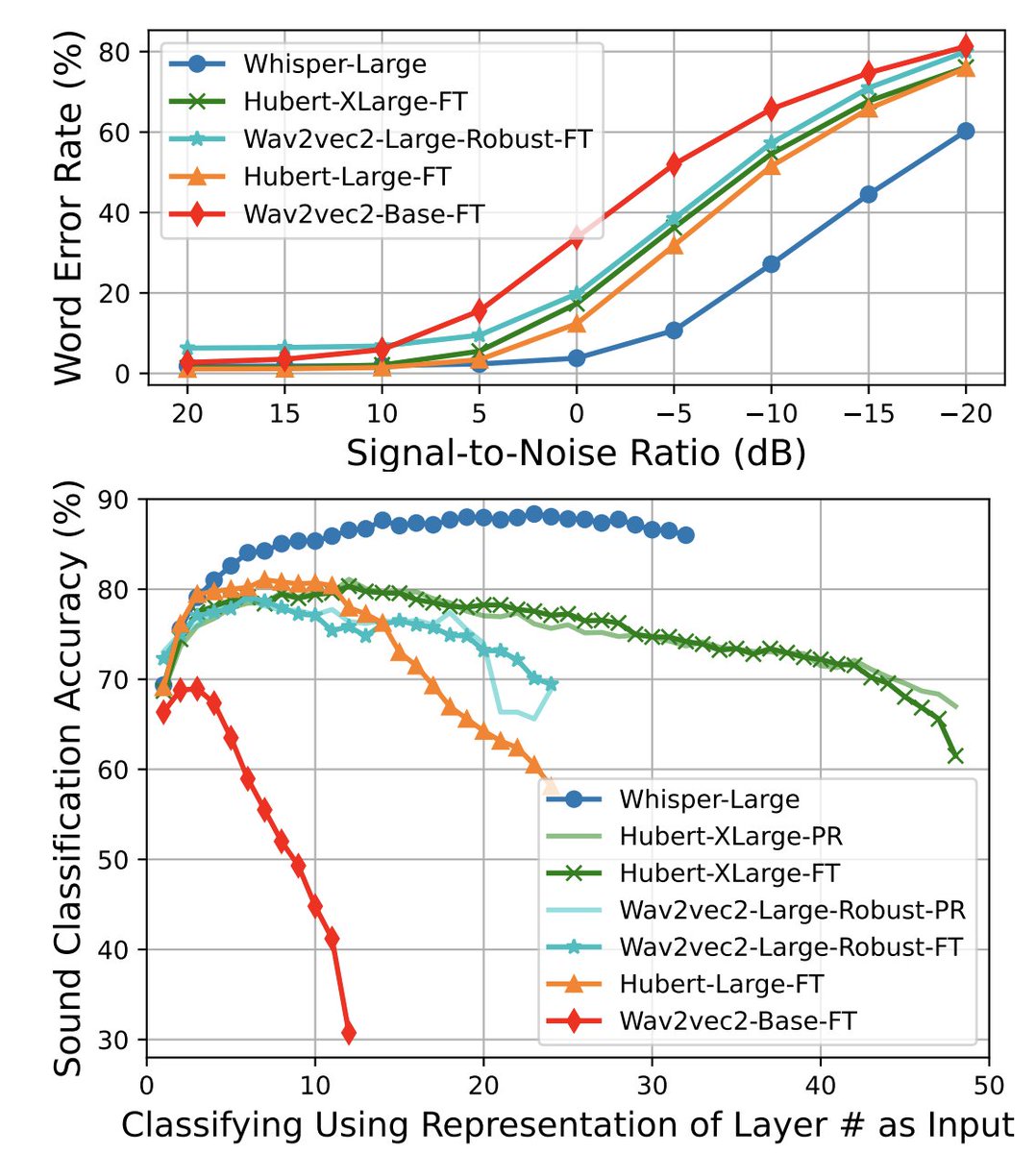

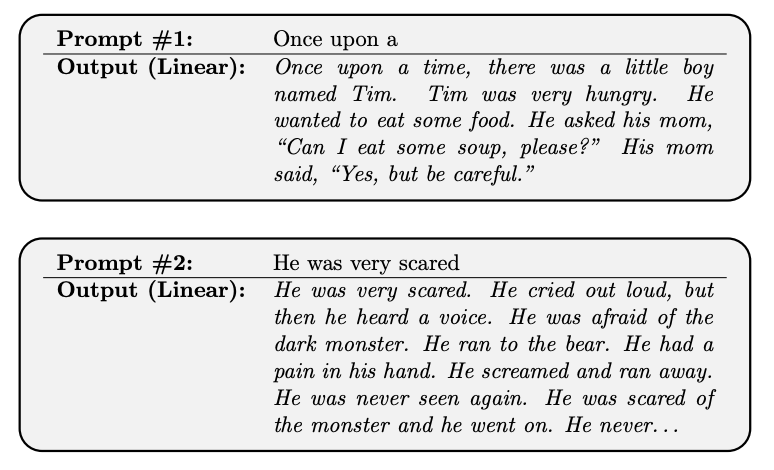

Who killed non-contrastive image-text pretraining? Alec Radford and Jong Wook Kim 💟 with the below Fig2 in CLIP. Who collected the 7 Dragonballs and asked Shenron to resurrect it? Yours truly, in this new paper of ours. Generative captioning is not only competitive, it seems better!

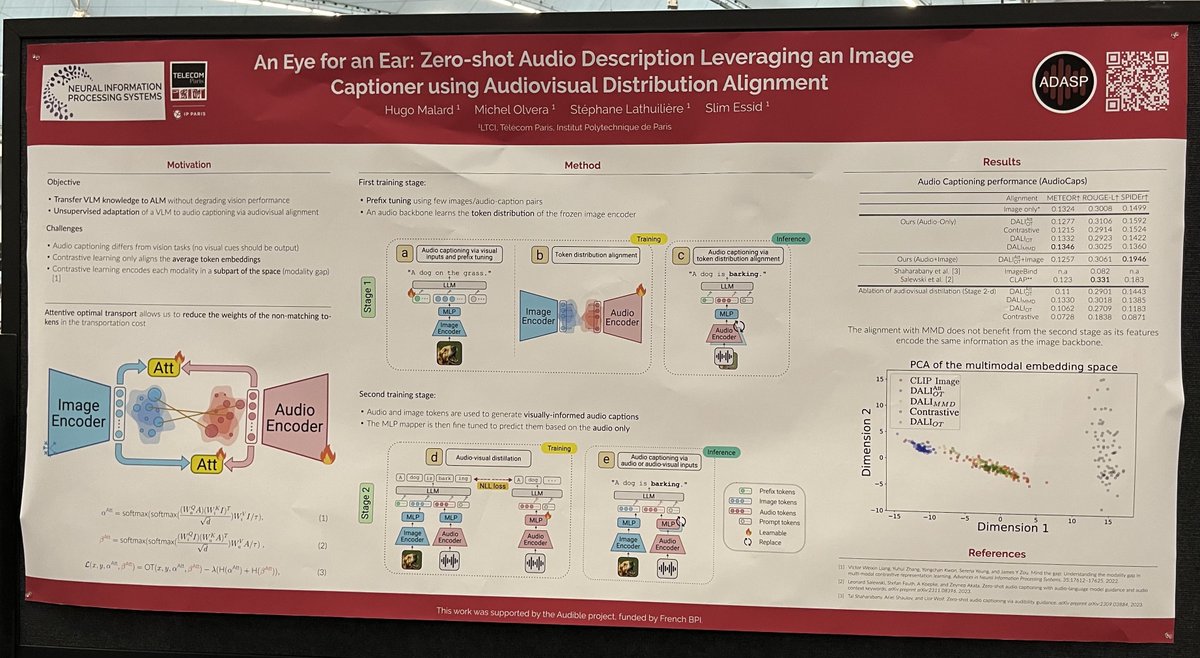

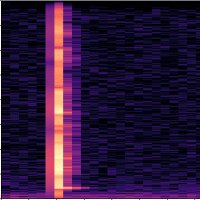

If you want to learn more about audio-visual alignment and how to use it to give audio abilities to your VLM, stop by our NeurIPS Conference poster #3602 (East exhibit hall A-C) tomorrow at 11am!