Mitchell Wortsman

@mitchnw

@AnthropicAI | prev @uwcse

ID: 387807409

http://mitchellnw.github.io 09-10-2011 18:11:40

425 Tweet

1,1K Followers

989 Following

Thrilled to share our paper “Large-Scale Transfer Learning for Tabular Data via Language Modeling,” introducing TabuLa-8B: a foundation model for prediction on tabular data. (with Juan C Perdomo + Ludwig Schmidt) 📖 arxiv.org/abs/2406.12031 🌐 huggingface.co/collections/ml… [long🧵]

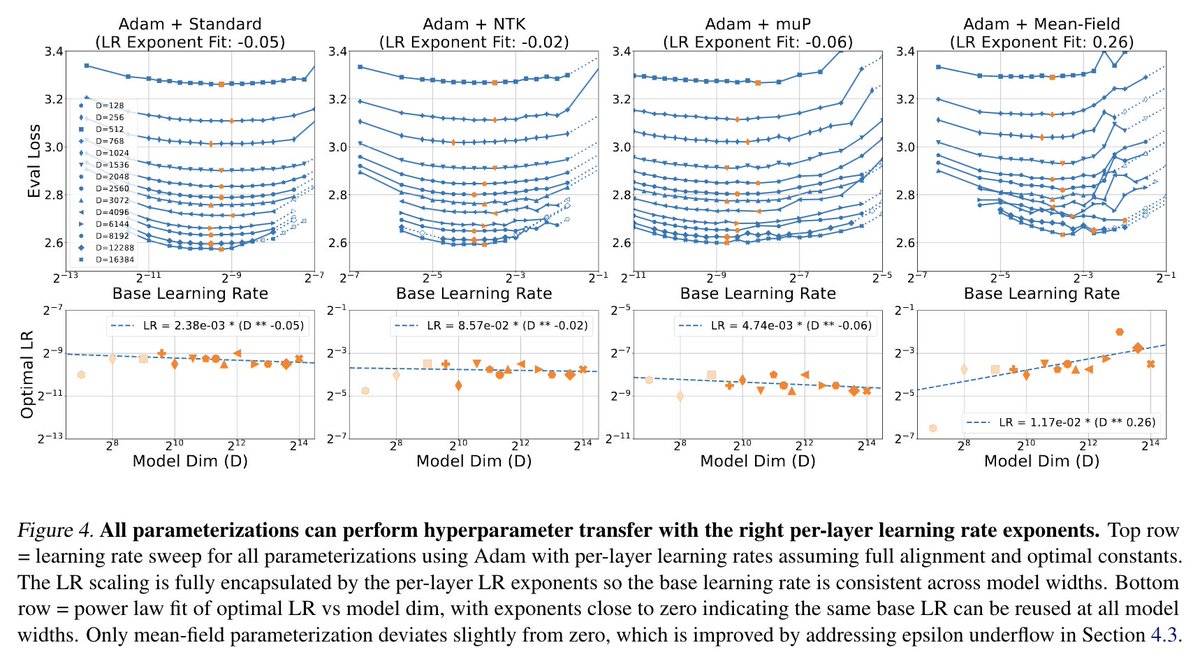

Come chat with me and Lechao Xiao at our ICML poster session 1:30-3pm CEST (Vienna time) today at Hall C 4-9 #2500 and see how our theory lets all parameterizations perform hyperparameter transfer! arxiv.org/abs/2407.05872

🚨 I’m on the job market this year! 🚨 I’m completing my Allen School Ph.D. (2025), where I identify and tackle key LLM limitations like hallucinations by developing new models—Retrieval-Augmented LMs—to build more reliable real-world AI systems. Learn more in the thread! 🧵

Excited to share that I'll be joining Paul Jankura to work on pretraining science! I've chosen to defer my Stanford PhD, where I'm honored to be supported by the Hertz Fellowship. There's something special about the science, this place, and these people. Looking forward to joining

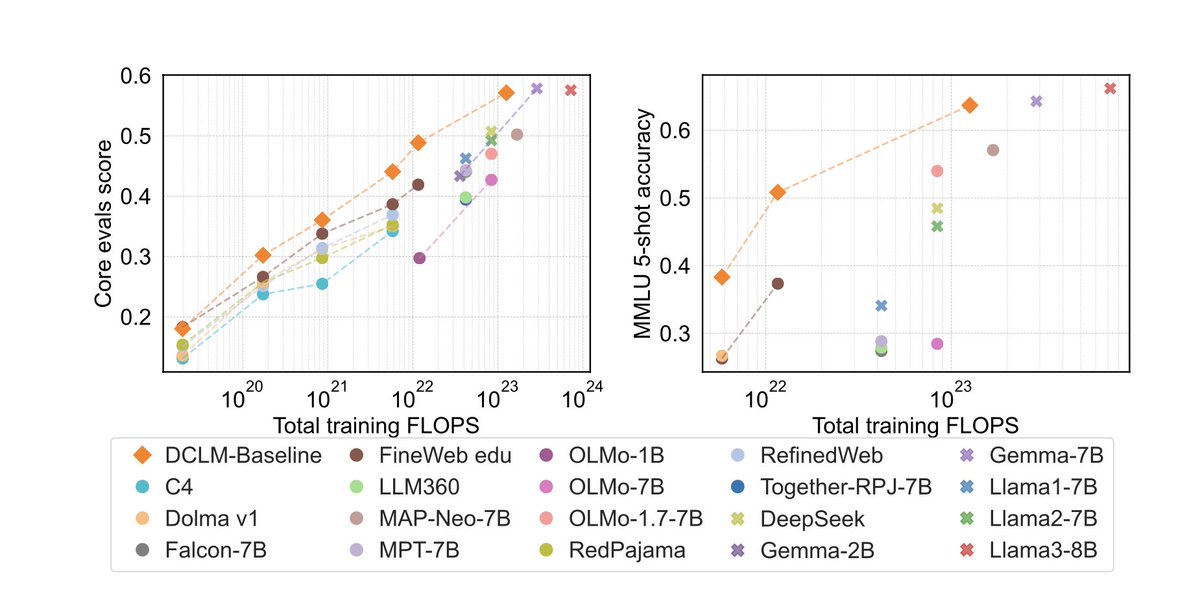

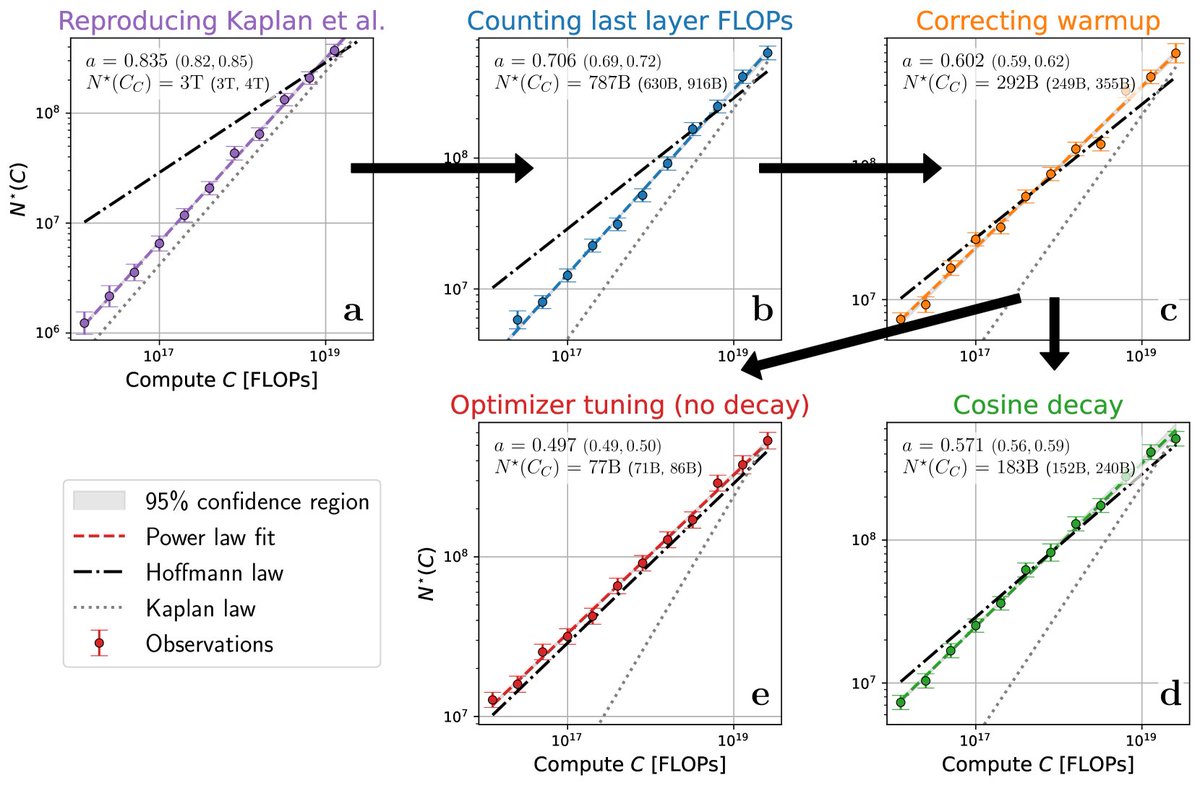

![Katie Everett (@_katieeverett) on Twitter photo We've gotten some great questions about the notion of alignment in our width-scaling parameterization paper! arxiv.org/abs/2407.05872

A deep dive into the alignment metric and intuition 🧵 [1/16] We've gotten some great questions about the notion of alignment in our width-scaling parameterization paper! arxiv.org/abs/2407.05872

A deep dive into the alignment metric and intuition 🧵 [1/16]](https://pbs.twimg.com/media/GSzuJqLaUAAS-Ac.jpg)