Mark Vero

@mark_veroe

PhD Student @ ETH Zürich @the_sri_lab

ID: 536484044

25-03-2012 17:22:01

29 Tweet

35 Followers

132 Following

We (well, not me, I am stuck in ZH) are presenting BaxBench at #ICML2025 from 4:30PM to 7PM in East Exhibition Hall A-B #E-806 as a spotlight💡. Come by and say hi to Niels Mündler, Nikola Jovanović, Jingxuan He, Veselin Raychev, and Baxi, our security inspector beaver.🦫

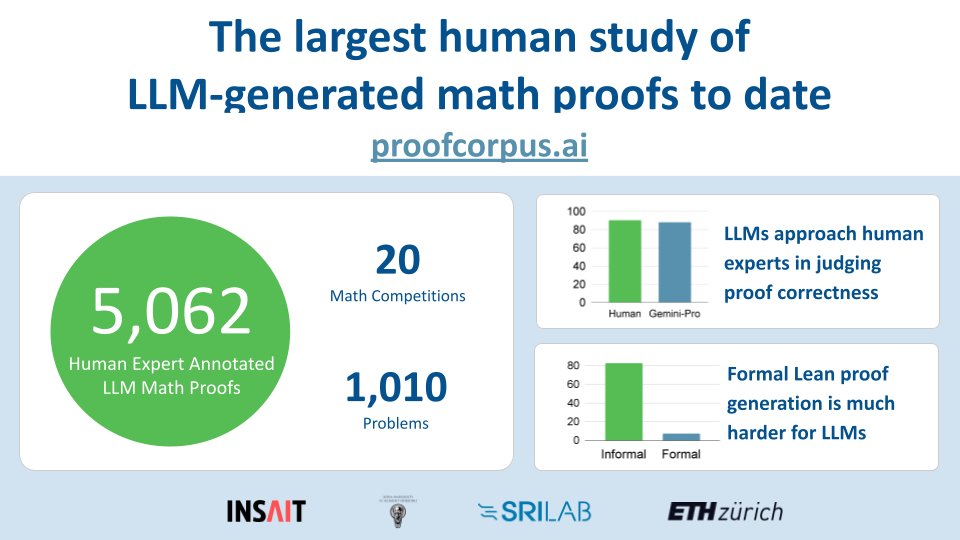

With the main track of #ICML2025 behind us, it is time for the cutting-edge workshops! The SRI Lab together with the INSAIT Institute is proud to present two papers at the AI4Math workshop by the matharena.ai team! Details in the 🧵