Lijie Fan

@lijie_fan

Research Scientist @GoogleDeepMind. CS PhD @MIT

ID: 1664080243374821387

http://lijiefan.me 01-06-2023 01:23:45

9 Tweet

231 Followers

51 Following

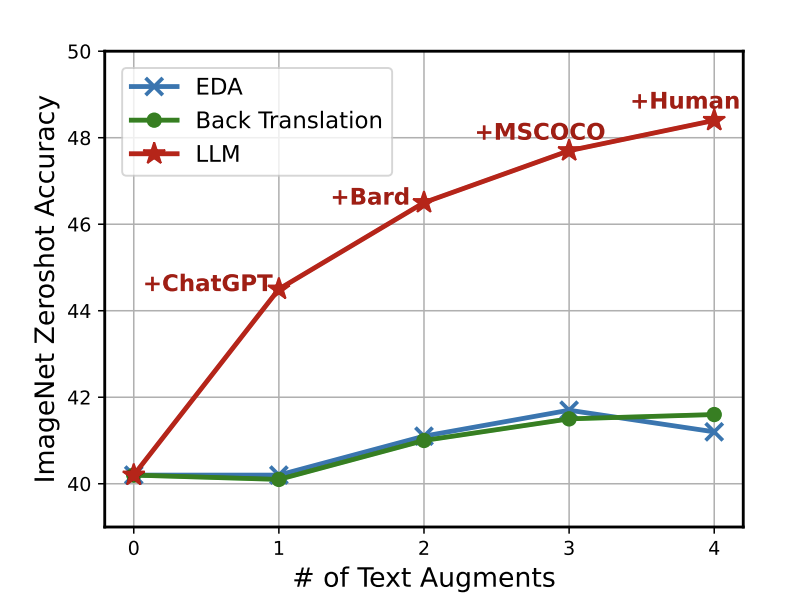

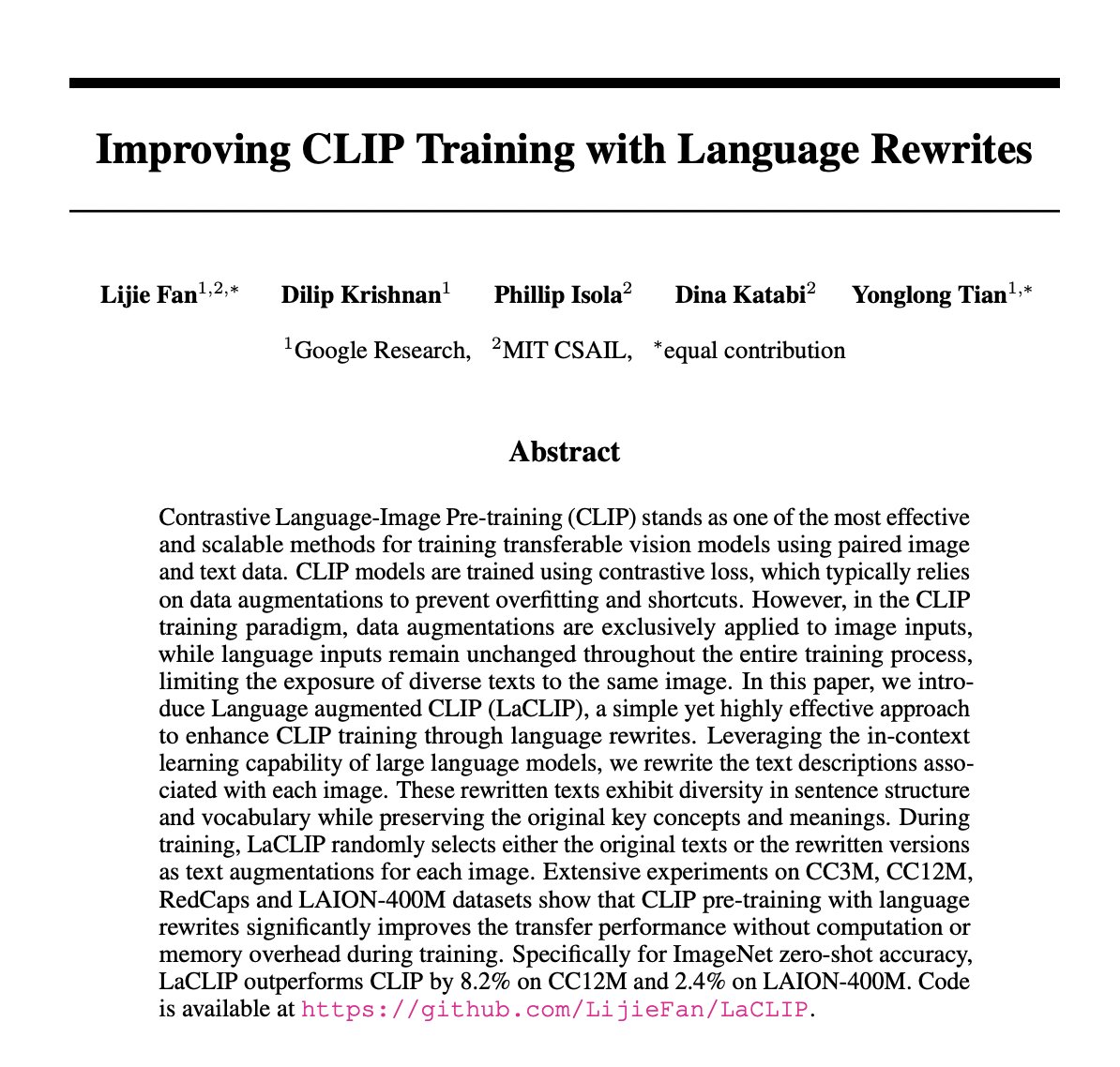

New paper! We show how to leverage pre-trained LLMs (ChatGPT, Bard, LLaMa) to rewrite captions, and significantly improve over CLIP embeddings: arxiv.org/abs/2305.20088 Joint work with Yonglong Tian Phillip Isola (MIT), Dina Katabi (MIT) Lijie Fan (MIT)