Lars Quaedvlieg

@lars_quaedvlieg

Research Assistant @ CLAIRE | MSc Data Science @ EPFL 🇨🇭 | Interested in reasoning with foundation models and sequential decision-making 🧠

ID: 1694031255262617600

https://lars-quaedvlieg.github.io/ 22-08-2023 16:58:25

19 Tweet

43 Followers

163 Following

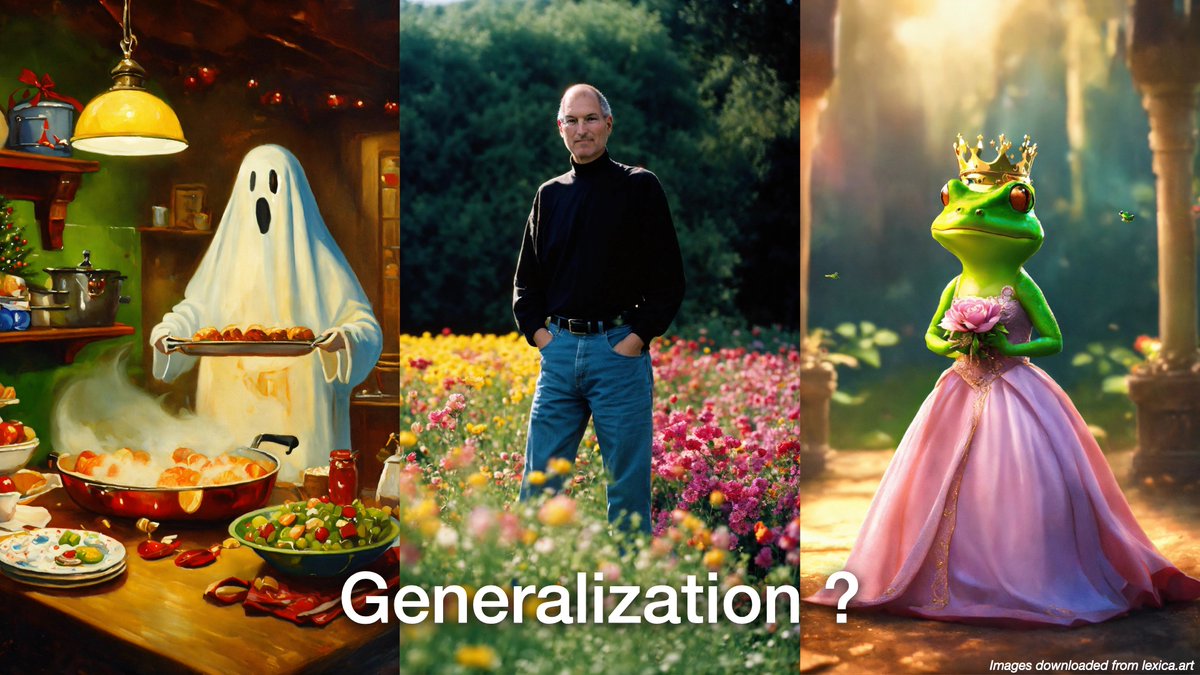

How is Stable Diffusion or Dall.E able to synthesize novel images? Does this arise from the architecture? Is it the training data? 🤔 Our new paper in #ICML2024 🚀 ICML Conference focuses on the interpolation aspect of generalization🧵 1/8

🌟 Excited to share our latest work on making diffusion language models (DLMs) faster than autoregressive (AR) models! ⚡ It’s been great to work on this with Caglar Gulcehre 😎 Lately, DLMs are gaining traction as a promising alternative to autoregressive sequence modeling 👀 1/14 🧵

Want to make the big FFN more efficient? Check out our work tomorrow! 📅 Wednesday, 11 am – 2 pm PST 📍 East Exhibit Hall A–C #2010 Stop by and explore how structured matrices can make LLM training more efficient. 🚀 Caglar Gulcehre Skander Moalla