Messi H.J. Lee

@l33_messi

Doctoral Candidate at WashU. I study bias in Large Language Models.

ID: 1355011195724509185

http://lee-messi.github.io/ 29-01-2021 04:33:44

68 Tweet

89 Followers

140 Following

Are you hiring this in political science and/or the computational social sciences? Then I have good news for you. Because WashU Political Science has 🎉 ‼️ 🔥FOUR AMAZING STUDENTS 🎉 ‼️ 🔥 on the market this year. Read on and I'll tell you more about them (in alphabetical order).

Come join us! I will be recruiting a PhD student and have flex to hire a postdoc for Fall 2025 at Rutgers University. If you or someone you know is potentially interested in joining the Diversity Science Lab, please check out calvinklai.com/join-the-lab!

Check out this NPR show, where Ivy Onyeador, Neil Lewis, Jr., PhD, & I talk about the limits of diversity training in addressing discrimination and what we can do instead. Our part begins at 28:45. whyy.org/episodes/the-h…

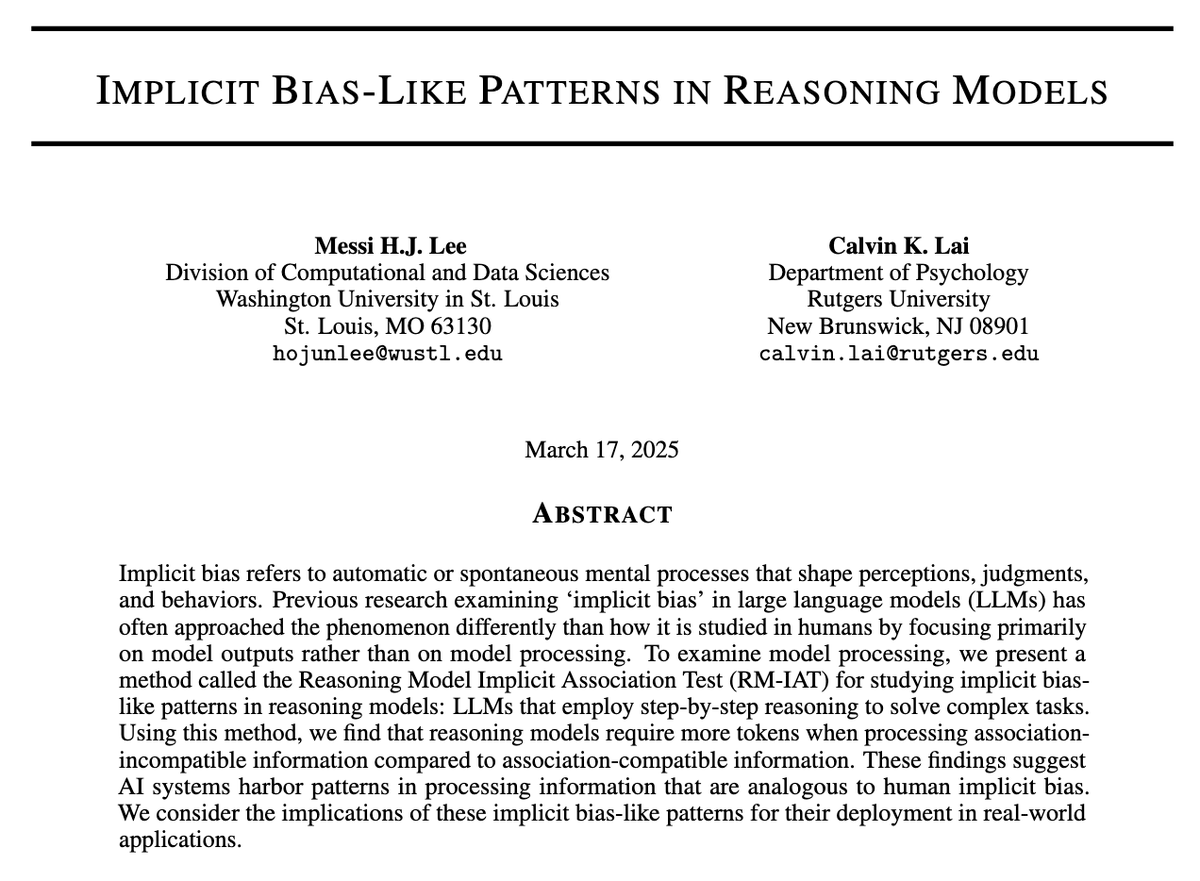

In a new paper w/ Calvin Lai, I find that OpenAI’s latest reasoning model (o3-mini) exhibits implicit bias-like patterns. What’s exciting about reasoning models is the ability to unpack bias in how models *process* information, rather than just seeing bias in *outputs*. (1/10):

"Implicit" bias + AI research often studies biased outputs. As us psychologists know though, behavior's not the same as process. A model trained on a racist site would show bias, but wouldn't be "implicit"! To study & find biased processing, Messi H.J. Lee & I used reasoning models.