Kunhao Zheng @ ICLR 2025

@kunhaoz

École Polytechnique X18, SJTU. Now in the amazing FAIR CodeGen @AIatMeta. Alumni: @Huggingface, Sea AI Lab, intern @openai

ID: 1087607633823952898

22-01-2019 07:07:21

131 Tweet

538 Followers

529 Following

1/ Happy to share my first accepted paper as a PhD student at Meta and École normale supérieure | PSL which I will present at ICLR 2026: 📚 Our work proposes difFOCI, a novel rank-based objective for ✨better feature learning✨ In collab with David Lopez-Paz, Giulio Biroli and Levent Sagun!

Check out our poster tmr at 10am at the ICLR Bidirectional Human-AI Alignment workshop! We cover how on-policy preference sampling can be biased and our optimal response sampling for human labeling. NYU Center for Data Science AI at Meta Julia Kempe Yaqi Duan x.com/feeelix_feng/s…

❄️Andrew Zhao❄️ Yeah we are doing it and it’s called Soft Policy Optimization: arxiv.org/abs/2503.05453 It can learn from arbitrary on/off policy samples. TLDR it reparametrizes Q function by your LLM. An elegant property: Belleman equation satisfied by construction so not separate TD loss.

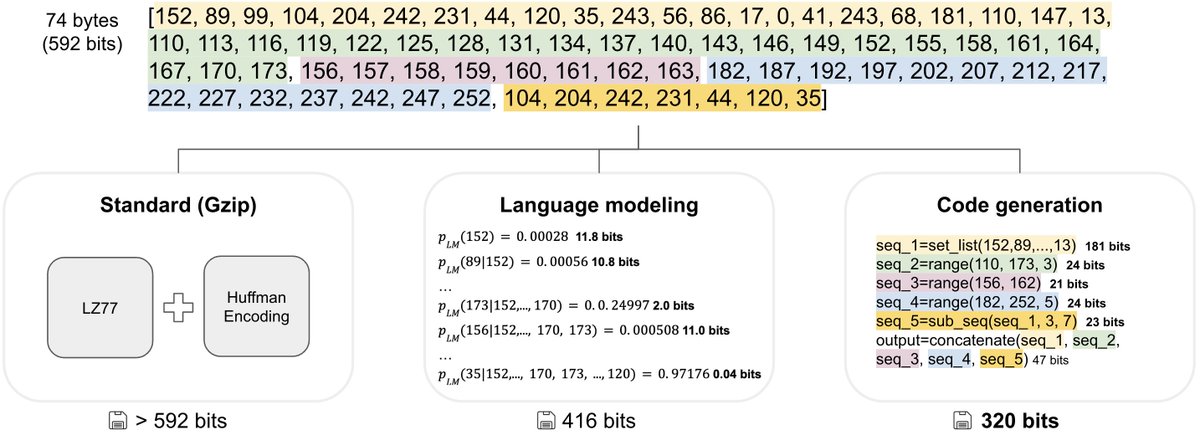

We present an Autoregressive U-Net that incorporates tokenization inside the model, pooling raw bytes into words then word-groups. AU-Net focuses most of its compute on building latent vectors that correspond to larger units of meaning. Joint work with Badr Youbi Idrissi 1/8