Jimmy

@jzheng1994

@PrimeIntellect

ID: 779349826853011457

23-09-2016 16:00:53

87 Tweet

497 Followers

1,1K Following

We asked Vincent Weisser about his vision for utilizing GPUs. "Every idling GPU is a market failure. Compute will be one of the biggest slices of GDP." "The goal is to have a fault-tolerant 'genius pool' of compute, ranging from H100s, A100s to even RTX 3090s." "Unutilized

if you're into pure maths and can code come join me at Prime Intellect - there's lots of fun and alpha in being able to reason about the parameter space through the tools of differential and algebraic geometry.

We asked Sholto Douglas from Anthropic about the costs of RL (Reinforcement Learning) runs. "In Dario Amodei's essay, he said that RL runs cost only $1M back in December." "RL is a more naively parallelizable and scalable than pre-training." "With pre-training, you need

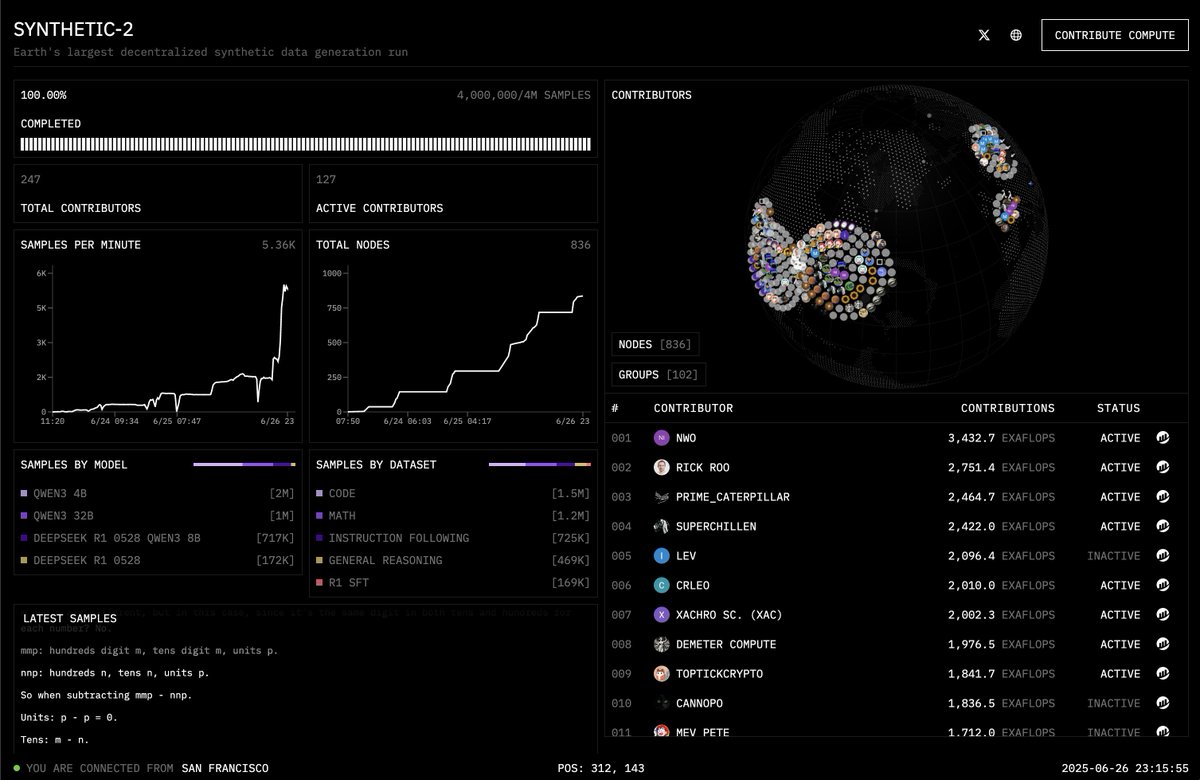

Releasing SYNTHETIC-2: our open dataset of 4m verified reasoning traces spanning a comprehensive set of complex RL tasks and verifiers. Created by hundreds of compute contributors across the globe via our pipeline parallel decentralized inference stack. primeintellect.ai/blog/synthetic…

we’re hiring ai researchers, engineers, growth, interns etc at Prime Intellect ping me if you want to work on open agi & frontier research infra for everyone