Jiawei Liu

@jiaweiliu_

Cooking good programs.

ID: 1328882067401129985

http://www.jw-liu.xyz 18-11-2020 02:06:03

568 Tweet

1,1K Followers

1,1K Following

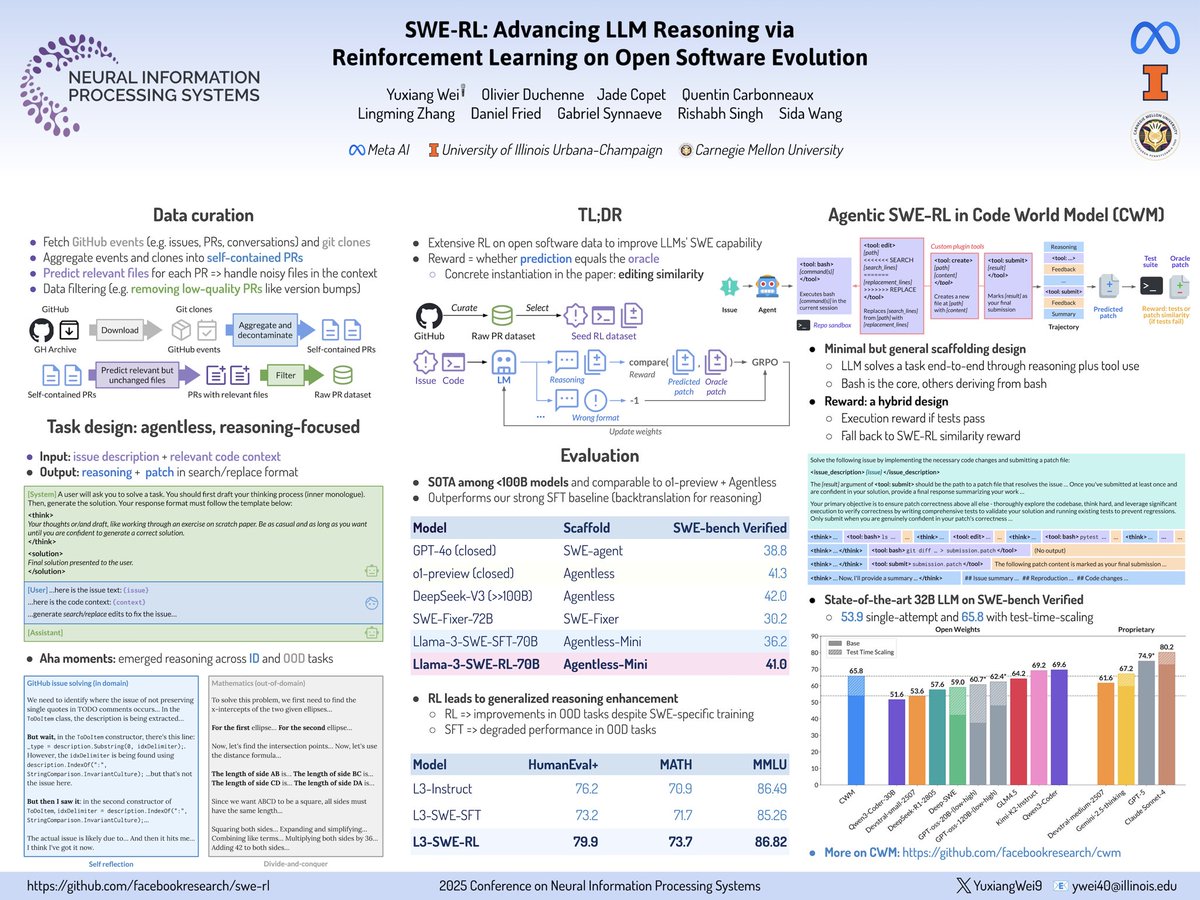

Checkout Yuxiang Wei ‘s new work! Online learning plus simple patch similarity reward makes models better SWEs!

Noam Brown “Designing hard evals is easy, designing meaningful ones is hard” -unknown

I’m gonna be recruiting students thru both Language Technologies Institute | @CarnegieMellon (NLP) and CMU Engineering & Public Policy (Engineering and Public Policy) for fall 2026! If you are interested in reasoning, memorization, AI for science & discovery and of course privacy, u can catch me at ACL! Prospective students fill this form:

📢 The Software Engineering group at Cornell Bowers Computing and Information Science is growing fast -- we're now 8 PhD students strong! I’m recruiting PhD students for Fall 2026! If you are interested in the intersection of SE and AI, apply to Cornell CS and reach out! Ddl: Dec 15, 2025. RT!

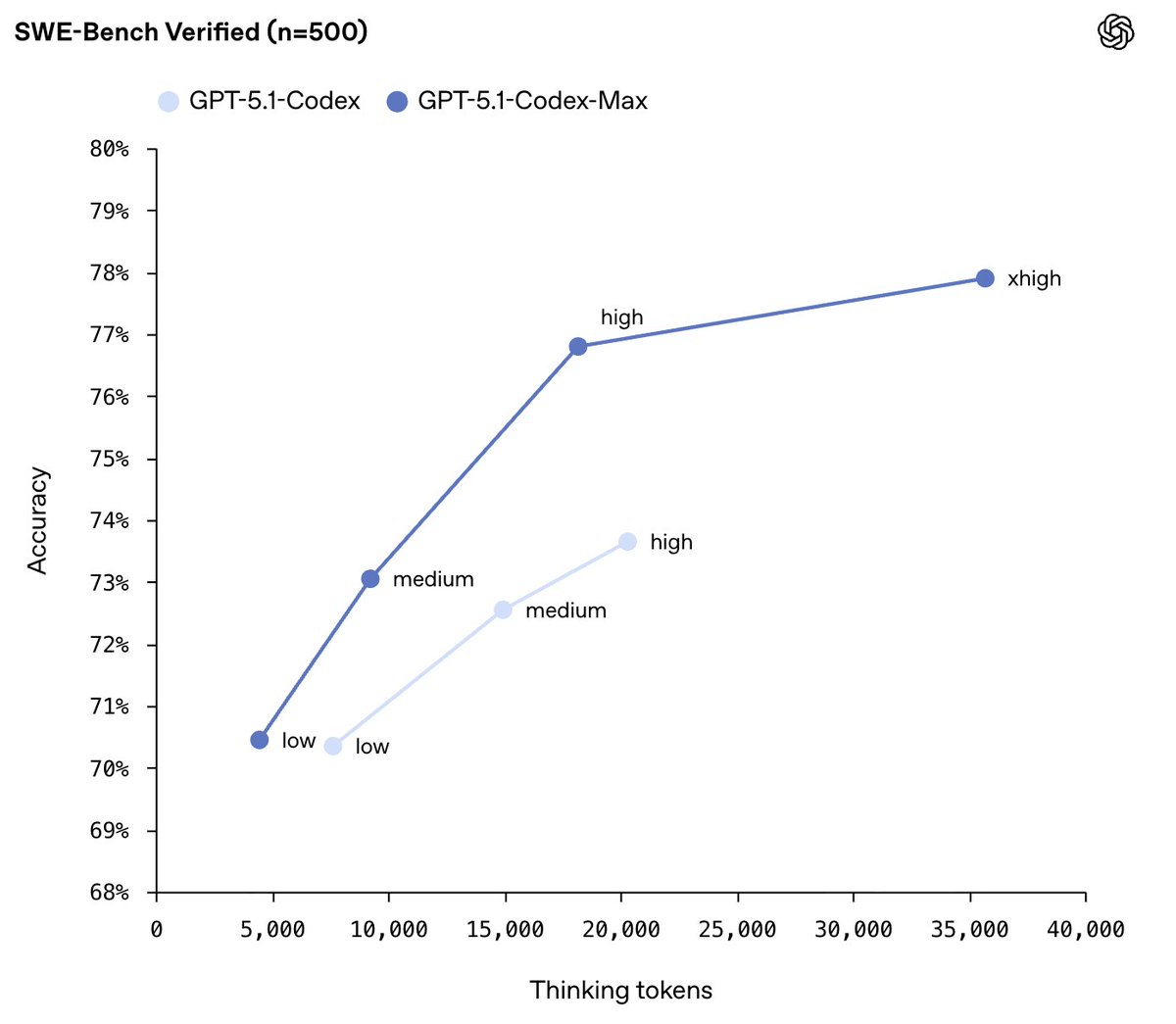

Today we at OpenAI are releasing GPT-5.1-Codex-Max, which can work autonomously for more than a day over millions of tokens. Pretraining hasn't hit a wall, and neither has test-time compute. Congrats to my teammates Kevin Stone & Michael Malek for helping to make it possible!

![Jiawei Liu (@jiaweiliu_) on Twitter photo multi-turn reasoning convo can drop earlier CoTs to save context, but we need to prefill assistant[i-1] + user[i], instead of just user[i] for non-reasoning models. in agentic tasks, assistant outputs can be pretty long. so ig a quick optimization can be “pre-”prefilling multi-turn reasoning convo can drop earlier CoTs to save context, but we need to prefill assistant[i-1] + user[i], instead of just user[i] for non-reasoning models. in agentic tasks, assistant outputs can be pretty long. so ig a quick optimization can be “pre-”prefilling](https://pbs.twimg.com/media/Gta5AKpXIAAbw-v.png)