Jiateng Liu

@jiatengliu

ID: 1580476081903058947

13-10-2022 08:30:49

27 Tweet

94 Followers

118 Following

We're also excited to announce our #EMNLP2024 panel on Wed., Nov. 13! Title: The Importance of NLP in the LLM Era featuring Heng Ji Rada Mihalcea Alice Oh Sasha Rush moderated by Monojit Choudhury

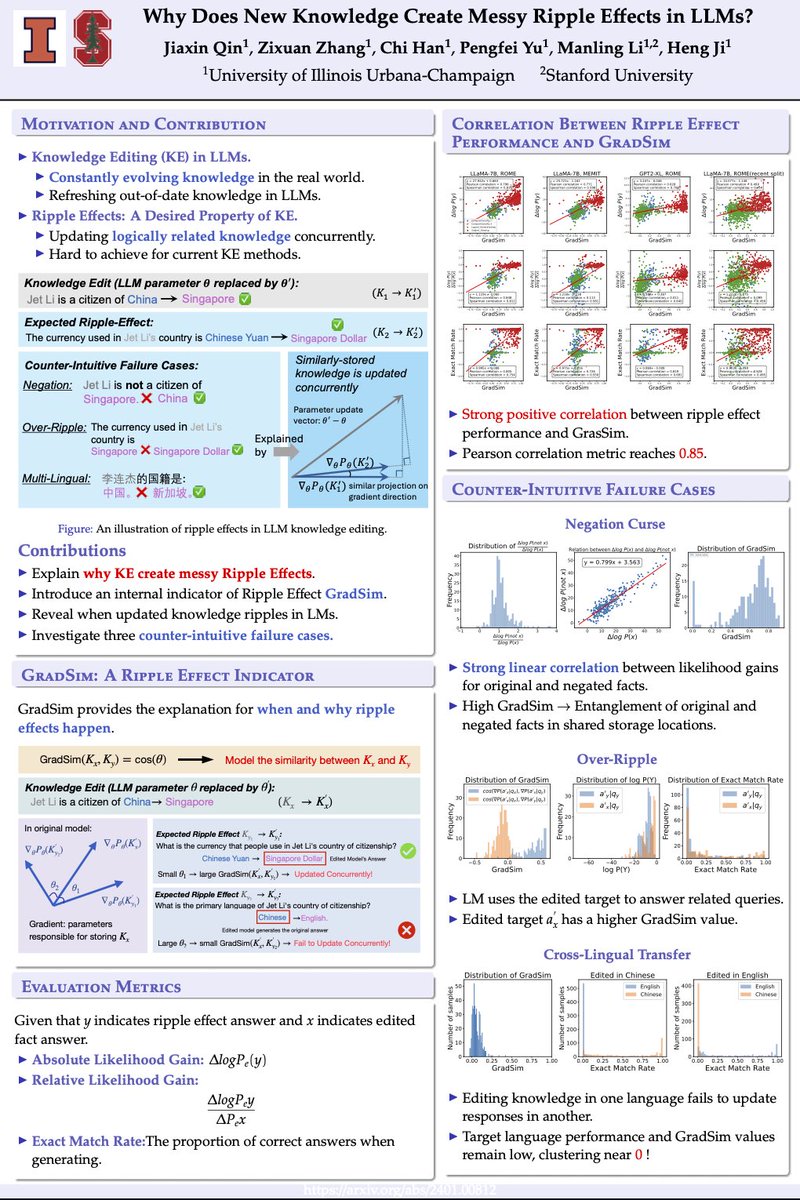

I am at #EMNLP2024! I will present our work "Why Does New Knowledge Create Messy Ripple Effects in LLMs? " on Web 10:30am. Thanks to all the collaborators Heng Ji Zixuan Zhang Chi Han Manling Li Looking forward to have a chat! Paper Link: arxiv.org/pdf/2407.12828

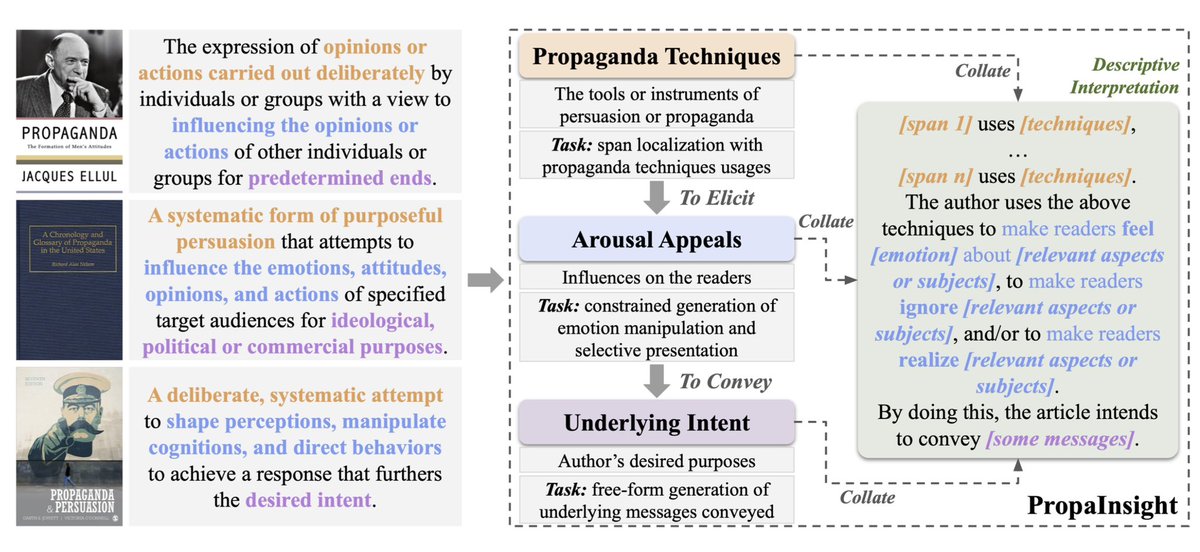

Excited to share 1/2 of my #coling2025 papers: PropaInsight: Toward Deeper Understanding of Propaganda! Huge thanks to my coauthors Jiateng Liu, May Fung and team, and special thanks to Julia Hirschberg, Heng Ji, Preslav Nakov for their support! Read here: arxiv.org/pdf/2409.18997

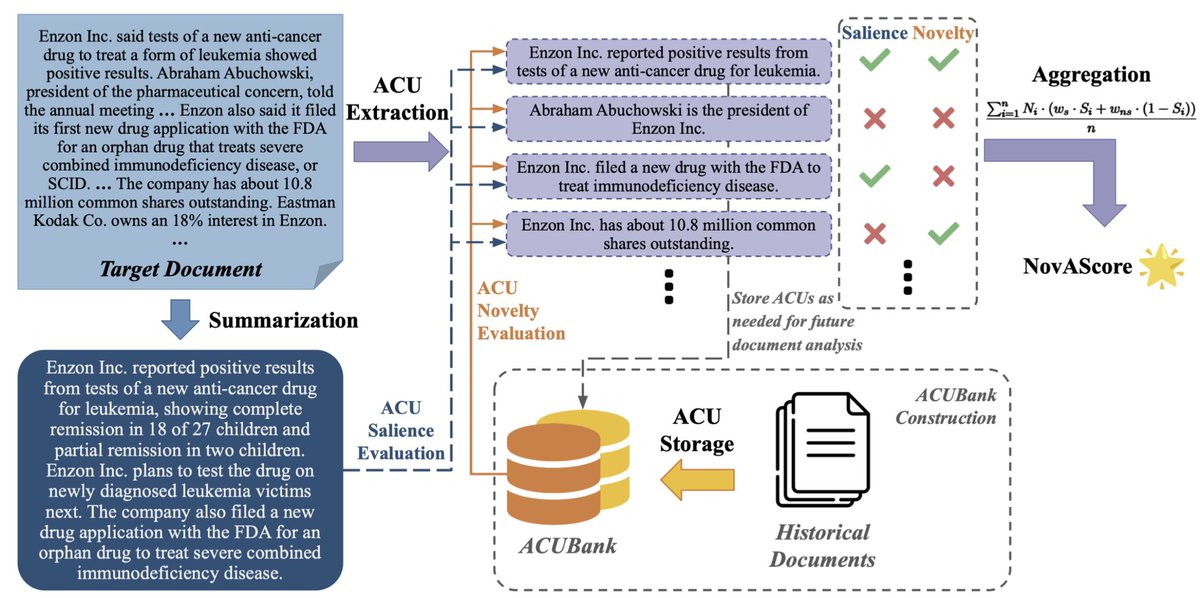

Here’s 2/2 of my #coling2025 papers: NoVAScore🌟 We introduce an automated metric to assess document novelty and salience. Huge thanks to my coauthor Ziwei (Sara) Gong and the team for their amazing collaboration! Read here: arxiv.org/pdf/2409.09249

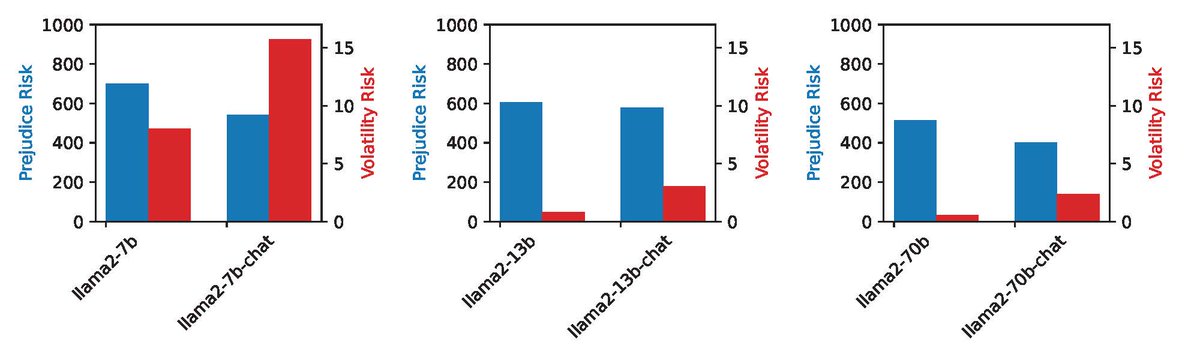

![Yuji Zhang (@yuji_zhang_nlp) on Twitter photo 🔍 New Preprint! Why do LLMs generate hallucinations even when trained on all truths? 🤔 Check out our paper [arxiv.org/abs/2407.08039]

💡 We find that universally, data imbalance causes LLMs to over-generalize popular knowledge and produce amalgamated hallucinations.

📊 🔍 New Preprint! Why do LLMs generate hallucinations even when trained on all truths? 🤔 Check out our paper [arxiv.org/abs/2407.08039]

💡 We find that universally, data imbalance causes LLMs to over-generalize popular knowledge and produce amalgamated hallucinations.

📊](https://pbs.twimg.com/media/GToPDqlaoAAGjhE.jpg)

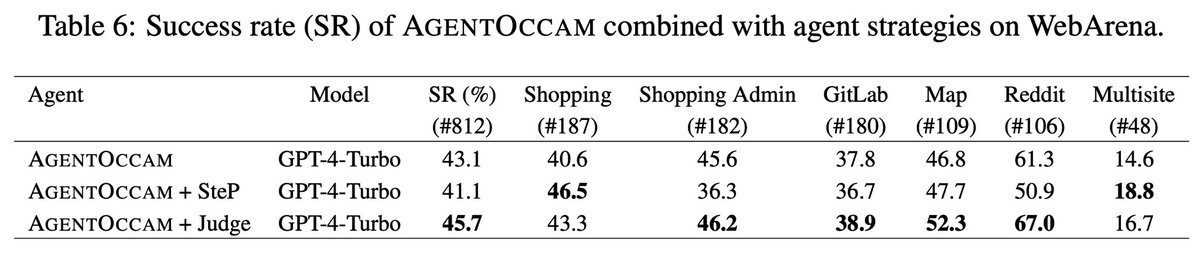

![Yuji Zhang (@yuji_zhang_nlp) on Twitter photo 🧠Let’s teach LLMs to learn smarter, not harder💥[arxiv.org/pdf/2506.06972]

🤖How can LLMs verify complex scientific information efficiently?

🚀We propose modular, reusable atomic reasoning skills that reduce LLMs’ cognitive load to verify scientific claims with little data. 🧠Let’s teach LLMs to learn smarter, not harder💥[arxiv.org/pdf/2506.06972]

🤖How can LLMs verify complex scientific information efficiently?

🚀We propose modular, reusable atomic reasoning skills that reduce LLMs’ cognitive load to verify scientific claims with little data.](https://pbs.twimg.com/media/GtsRtsQXsAADFlD.jpg)