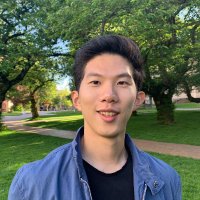

Haochen Zhang

@jhaochenz

Member of Technical Staff @AnthropicAI | prev. CS PhD @StanfordAILab

ID: 1704090745

https://cs.stanford.edu/~jhaochen/ 27-08-2013 08:06:18

26 Tweet

743 Followers

259 Following

With the right regularizer, linear autoencoders recover the ordered principal components, but very slowly. Interesting model system for representation learning since we know the optimal representation. New work w/ Jenny (Xuchan) Bao, James Lucas, and Sushant Sachdeva. arxiv.org/abs/2007.06731

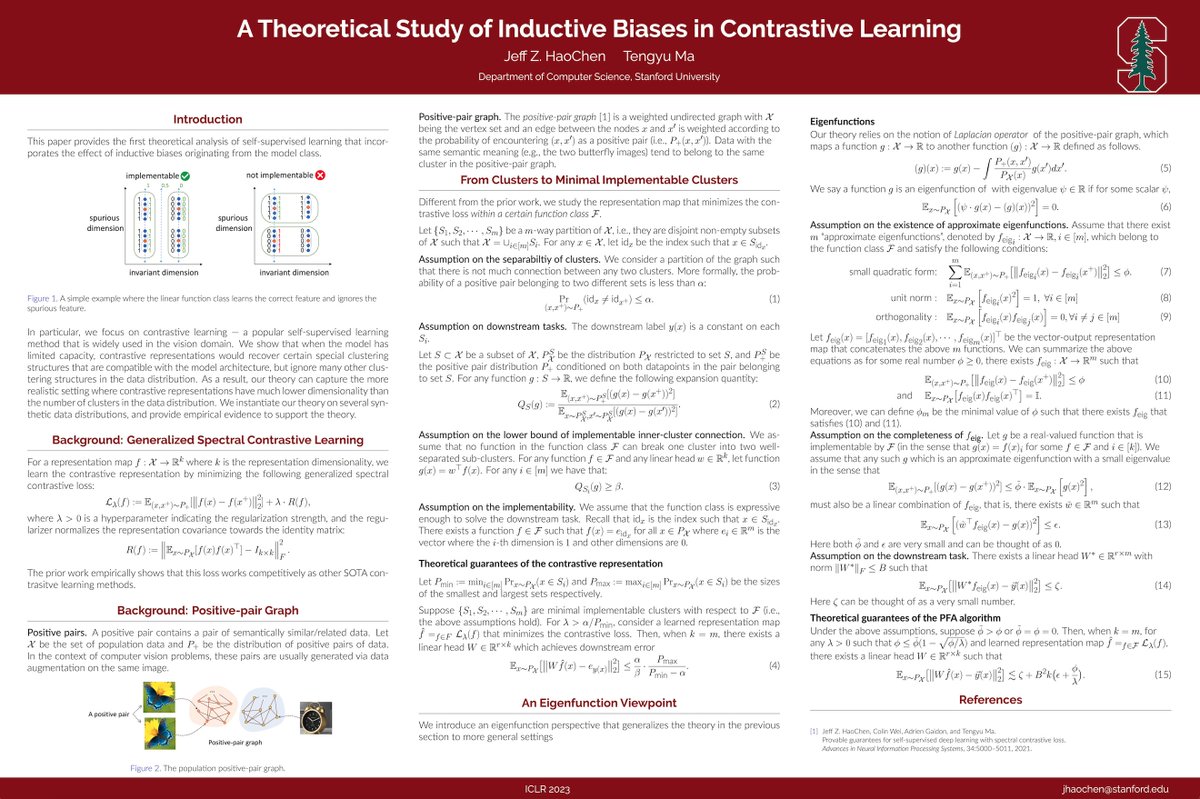

Curious about why contrastive learning produces representations useful for downstream tasks? Check out Haochen Zhang, Colin Wei, and Tengyu Ma's theoretical explanation in our latest blog post: ai.stanford.edu/blog/understan… Based on the NeurIPS 2021 oral paper: arxiv.org/abs/2106.04156

Our paper got accepted to ICML ‘22 as a long talk! Thanks to all the co-authors (Kendrick Shen Robbie Jones Sang Michael Xie Haochen Zhang Tengyu Ma Percy Liang). Congrats Kendrick on yet another oral (as an undergrad!)

📢 Introducing Voyage AI Voyage_AI_! Founded by a talented team of leading AI researchers and me 🚀🚀. We build state-of-the-art embedding models (e.g., better than OpenAI 😜). We also offer custom models that deliver 🎯+10-20% accuracy gain in your LLM products. 🧵