Jonathan Kamp

@jb_kamp

Computational Linguist; PhD Candidate @CLTLVU; Interpretability in NLP; Argument Mining

ID: 1513502208414916609

http://jbkamp.github.io 11-04-2022 13:00:34

30 Tweet

71 Followers

92 Following

Getting ready to present my work (together with my supervisor @PiekVossen ) at COLING 2022. If you are interested in the evaluation of open-domain dialogue, come to room 104 at 14:45 pm, join us online, or read the paper here: aclanthology.org/2022.ccgpk-1.3…

Our @CLTLVU colleague Selene Baez Santamaria together with @PiekVossen and Thomas Baier just won the best paper award at the Workshop on Customized Chat Grounding Persona and Knowledge. Hurray! COLING 2022

Thanks! Khalid Al-Khatib next time in Groningen? ;) Talking about granularity: any overlap with a paper in this same session by Mattes Ruckdeschel from Leibniz-Institut für Medienforschung? Check the proceedings here: aclanthology.org/volumes/2022.a…

So far today: I've been able to virtually attend co-occurring NLLP Workshop and #BlackboxNLP and listen to some inspiring talks. 🧙 Ito hard skills, I learned how to mute/unmute individual zoom chrome tabs

Some smooth work by Gabriele Sarti and colleagues from the #InDeep project in releasing a tool for interpreting sequence generation models. Keep up the development! @InseqDev

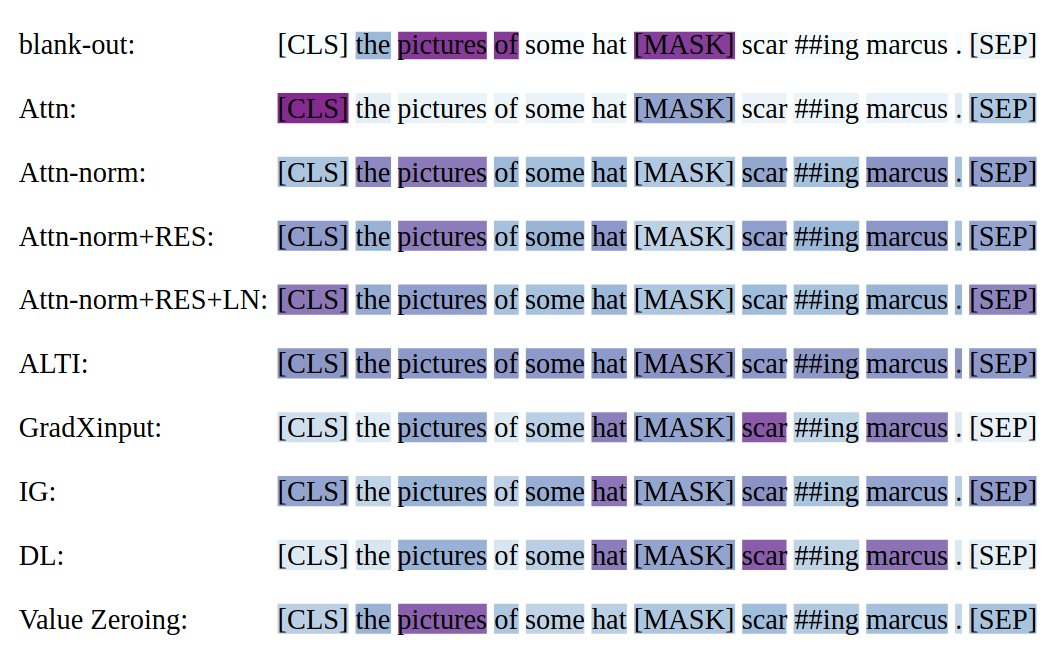

Shout-out to Hosein Mohebbi et al. from our #InDeep consortium for their awesome work "Quantifying Context Mixing in Transformers", introducing Value Zeroing as a new promising post-hoc interpretability approach for NLP! 🎉 Paper: arxiv.org/abs/2301.12971

Talk at GroNLP by the invitation of Khalid Al-Khatib with my wonderful colleagues Jonathan Kamp and @myrthereuver Leiden Computer Science HHAI Conference - 🦋 @hhaiconference.bsky.social Text Mining and Retrieval Leiden @CLTLVU Interactive Intelligence Group

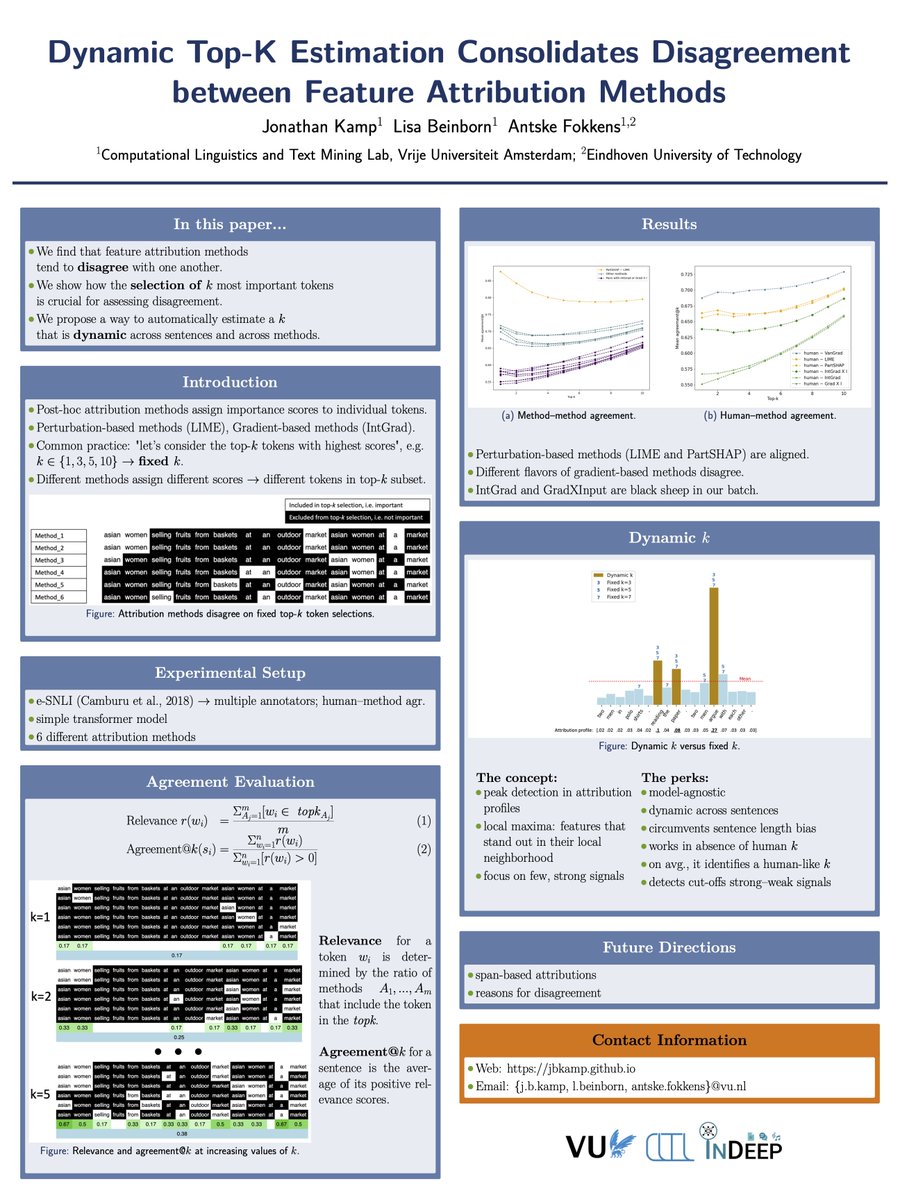

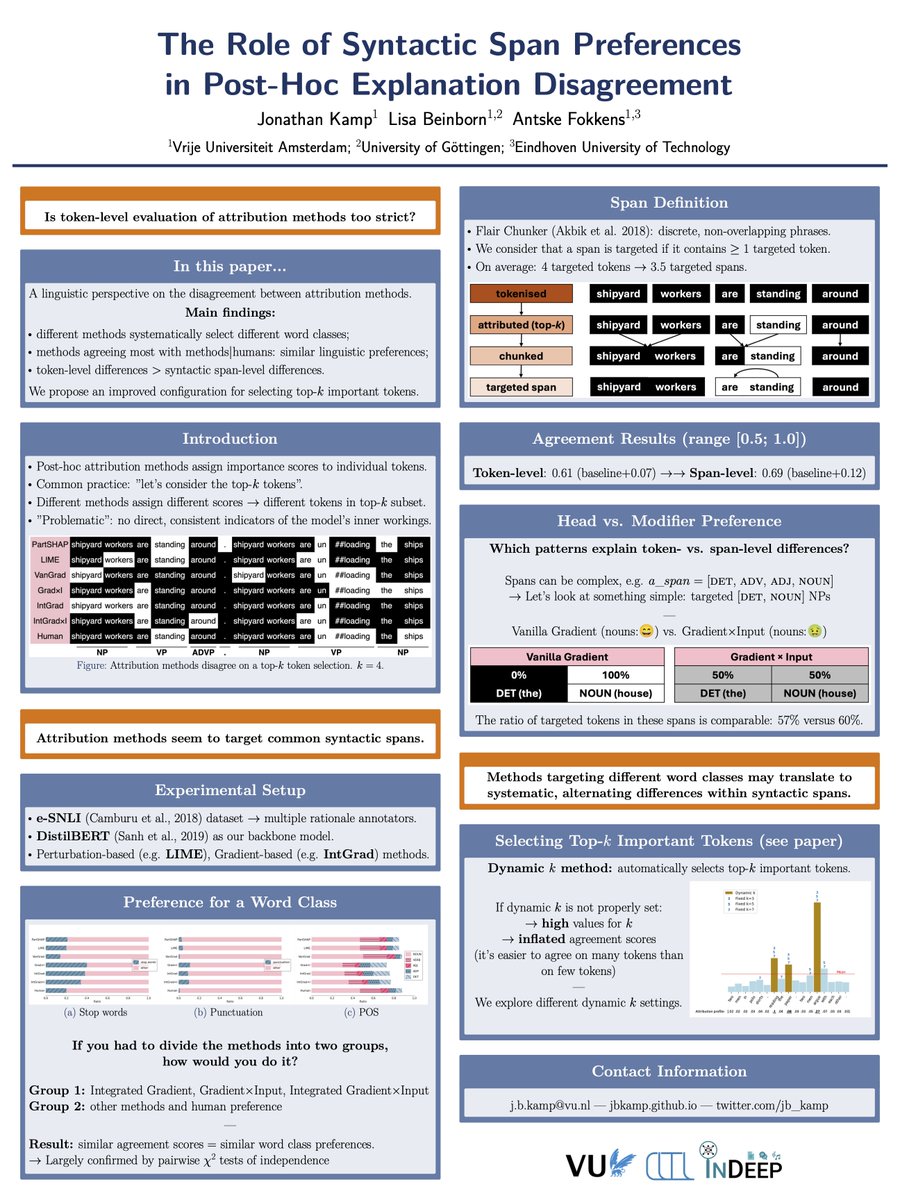

Did you know that token-level differences between feature attribution methods are smoothed out if we compare them on the syntactic span level? I'll be at LREC COLING 2024 (Turin) next month to present our paper on the linguistic preferences of such methods :) arxiv.org/abs/2403.19424

Also at LREC COLING 2024 and interested in #interpretability in #nlp / #xai? I'm happy to tell you something about post-hoc attribution methods and their linguistic preferences 🔻 Poster session >> from 17:30 onwards Proceedings: aclanthology.org/2024.lrec-main…

⚠️ Citations from prompting or NLI seem plausible, but may not faithfully reflect LLM reasoning. 🏝️ MIRAGE detects context dependence in generations via model internals, producing granular and faithful RAG citations. 🚀 Demo: huggingface.co/spaces/gsarti/… Fun collab w/ Jirui Qi @EMNLP25 ✈️,