James Hensman

@jameshensman

Machine learner. Building big Bayesian models @microsoft. Views my own. he/him.

ID: 14699604

http://jameshensman.github.io 08-05-2008 12:35:55

548 Tweet

7,7K Followers

2,2K Following

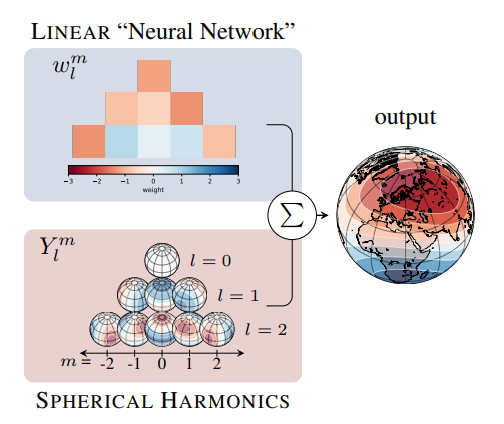

New preprint out on geographic location encoding using spherical harmonics and sinusoidal representations! 🌐➡️🌏 Work led by Marc Rußwurm and w/ Esther Rolf, Robin Zbinden and devistuia. 📄Paper: arxiv.org/abs/2310.06743 💻Code: github.com/marccoru/locat… 🧵Thread: 👇 1/11

The first "Research Focus" blog post of 2024 from Microsoft Research highlights papers from couple of internship projects that I co-supervised with Siân Lindley and James Hensman last summer. Blog post: microsoft.com/en-us/research…

We're hiring!🚀 The Alexandria team (microsoft.com/en-us/research…) at Microsoft Research (Cambridge, UK) is looking for summer #interns. Come work with us on KB-powered LLMs, generative knowledge linking, transformer compression, & other exciting problems More info: jobs.careers.microsoft.com/global/en/job/…

It was such a pleasure to work with Saleh Ashkboos and @max_croci on this method for LLM compression during Saleh's internship last year.

Come and work with us to make Language Models more efficient. A Research Residency at Microsoft Research and 365 Research in Cambridge, UK. jobs.careers.microsoft.com/global/en/job/…

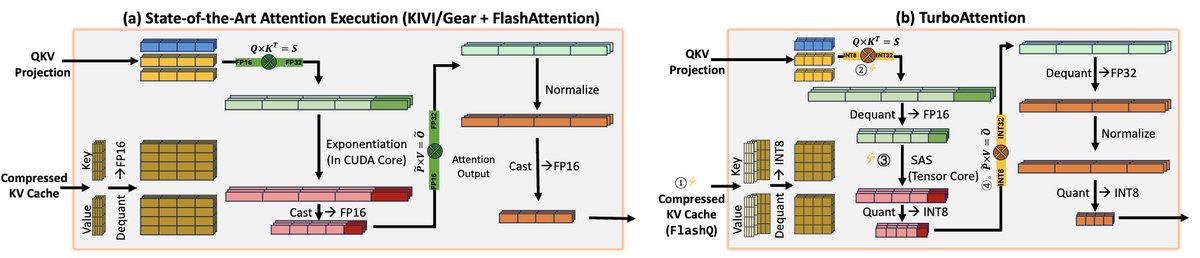

[1/7] Happy to release 🥕QuaRot, a post-training quantization scheme that enables 4-bit inference of LLMs by removing the outlier features. With Amirkeivan Mohtashami @max_croci Dan Alistarh Torsten Hoefler 🇨🇭 James Hensman and others Paper: arxiv.org/abs/2404.00456 Code: github.com/spcl/QuaRot

![Saleh Ashkboos (@ashkboossaleh) on Twitter photo [1/7] Happy to release 🥕QuaRot, a post-training quantization scheme that enables 4-bit inference of LLMs by removing the outlier features.

With <a href="/akmohtashami_a/">Amirkeivan Mohtashami</a> @max_croci <a href="/DAlistarh/">Dan Alistarh</a> <a href="/thoefler/">Torsten Hoefler 🇨🇭</a> <a href="/jameshensman/">James Hensman</a> and others

Paper: arxiv.org/abs/2404.00456

Code: github.com/spcl/QuaRot [1/7] Happy to release 🥕QuaRot, a post-training quantization scheme that enables 4-bit inference of LLMs by removing the outlier features.

With <a href="/akmohtashami_a/">Amirkeivan Mohtashami</a> @max_croci <a href="/DAlistarh/">Dan Alistarh</a> <a href="/thoefler/">Torsten Hoefler 🇨🇭</a> <a href="/jameshensman/">James Hensman</a> and others

Paper: arxiv.org/abs/2404.00456

Code: github.com/spcl/QuaRot](https://pbs.twimg.com/media/GKItBQxW4AAh30A.jpg)

Saleh Ashkboos Amirkeivan Mohtashami @max_croci Dan Alistarh Torsten Hoefler 🇨🇭 James Hensman Awesome project. Just did an installation video of QuaRot locally. Thanks for the great project. youtu.be/r7FvhB1WgI8?si…

We are hiring a senior researcher in ML for healthcare at MSR Cambridge (UK)! The position is in my team, so if you get it you will work with me (is this a pro or a con? do not answer). Focus is multimodal (~vision-language) models for radiology! Link: jobs.careers.microsoft.com/global/en/job/…

Today we bring the latest #DeepSeek distilled models to #Copilot+ PC’s, where they really shine due to the devices’ efficiency and power. This performance is unprecedented. It’s so enabling to put this class of #AI reasoning running on your Copilot+ PC! blogs.windows.com/windowsdevelop…