Haoqin Tu

@haoqint

Passionate researcher in #NLProc, #Multimodality, and #AISafety; Ph.D. Student @UCSC, @BaskinEng; Prev @UCAS1978.

ID: 1377915615055282180

http://haqtu.me 02-04-2021 09:27:47

118 Tweet

168 Followers

230 Following

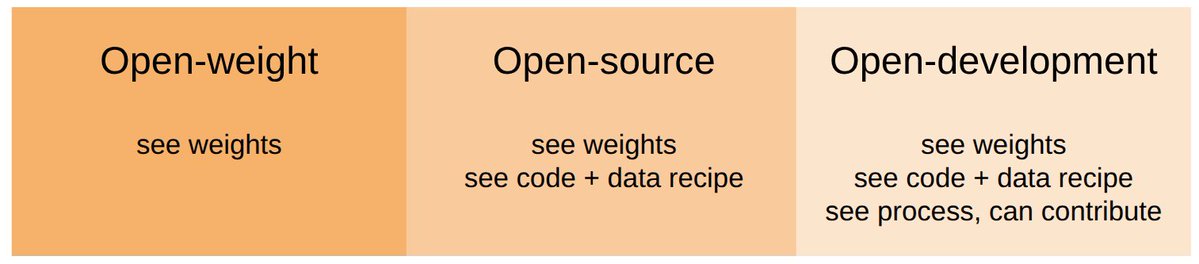

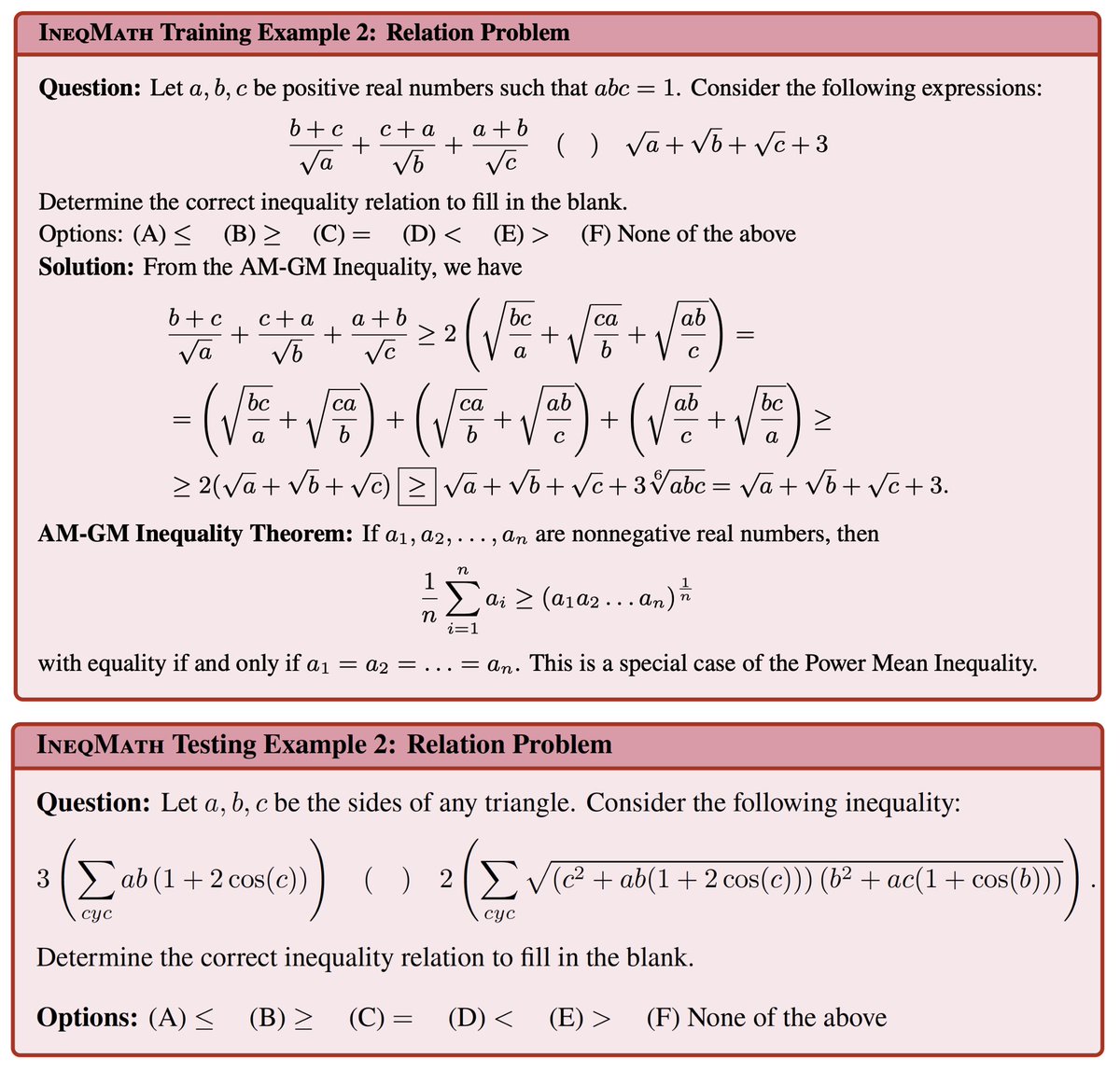

Nice collab w Hardy Chen, solid insights that challenge the traditional path of “SFT, then RL” to train reasoning MLLMs. What we really need are reliable rewards in RL and diverse, high-quality data!

So cool to see big_vision (github.com/google-researc…) is used as a foundation for open source projects, even completely outside of google. Lucas Beyer (bl16) @xzhai André Susano Pinto Andreas Steiner