Gopala Anumanchipalli

@gopalaspeech

Robert E. And Beverly A. Brooks Assistant Professor @UCBerkeley @UCSF

Formerly @CarnegieMellon @ISTecnico @IIIT_Hyderabad

ID: 996829570274807808

http://people.eecs.berkeley.edu/~gopala/ 16-05-2018 19:07:44

150 Tweet

948 Followers

586 Following

This work led by JIACHEN LIAN and collaborators at ALBA Language Neurobiology laboratory will be presented at #NeurIPS2024

Catch us at poster #242 on Thursday at 4:30 PM! More details here: 🔗 Website: tinglok.netlify.app/files/avsounds… 📄 arXiv: arxiv.org/abs/2409.14340 w/ Ren Wang , Bernie Huang, Andrew Owens , and Gopala Anumanchipalli .

Self-Supervised Syllabic Representation Learning from speech. With unsupervised syllable discovery & linear time tokenization of speech at the syllabic rate (~4 Hz) !! Work from my group Cheol Jun Cho, Nick Lee and Akshat Gupta Berkeley AI Research to be presented at #ICLR2025

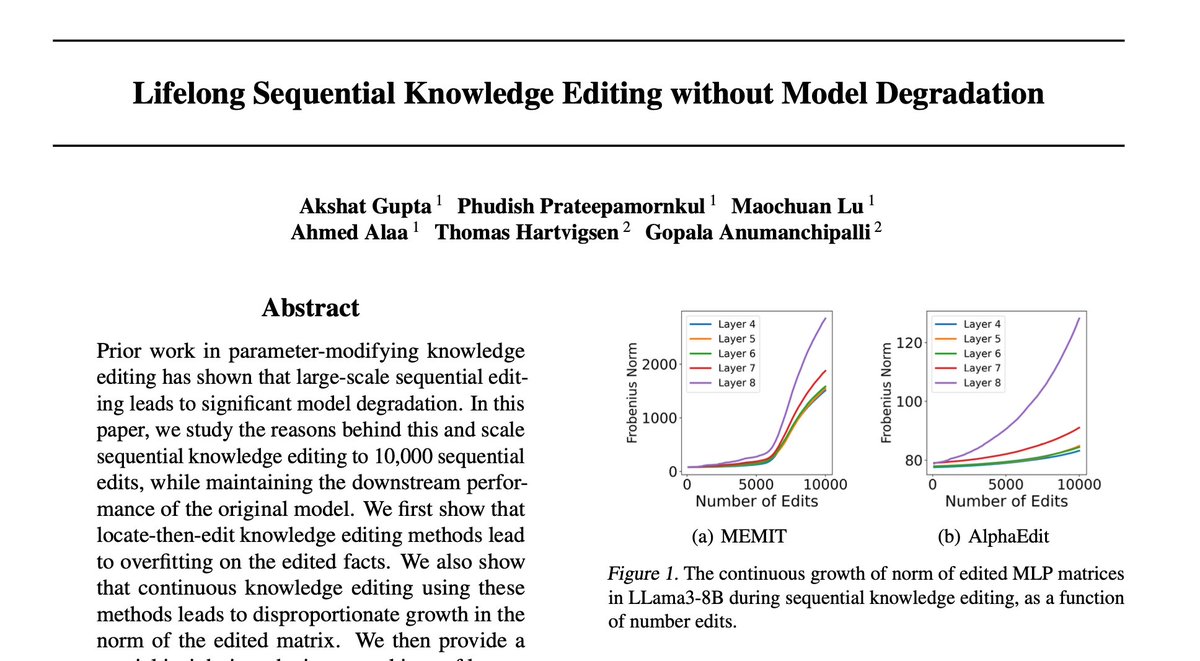

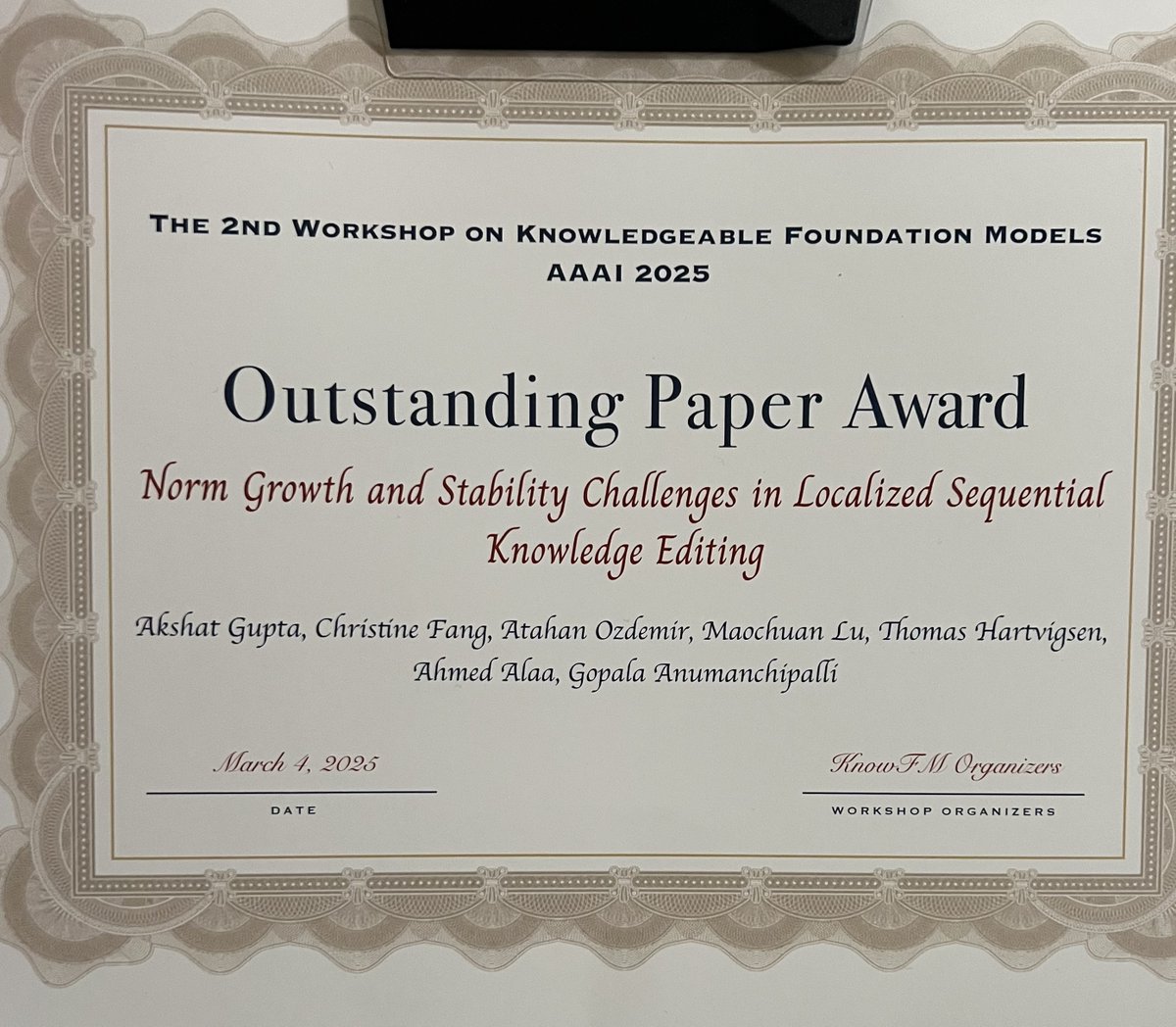

Thrilled to share that our paper on "Norm Growth and Stability Challenges in Sequential Knowledge Editing" has been accepted for an Oral Presentation at the KnowFM workshop @ #AAAI2025 w/ Tom Hartvigsen Ahmed Alaa Gopala Anumanchipalli More details below (1/n)

Our work on knowledge editing got an "Outstanding Paper Award"🏆🏆 at the AAAI KnowFM Workshop!! #AAAI2025 🥳🥳🥳 Congratulations to my amazing co-authors Tom Hartvigsen Ahmed Alaa Gopala Anumanchipalli

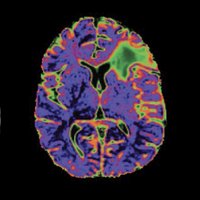

1/n) Our latest work is out today in Nature Neuroscience! We developed a streaming “brain-to-voice” neuroprosthesis which restores naturalistic, fluent, intelligible speech to a person who has paralysis. nature.com/articles/s4159…

Today in Nature Neuroscience, @ChangLabUCSF and Berkeley Engineering’s Gopala Anumanchipalli show that their new AI-based method for decoding neural data synthesizes audible speech from neural data in real-time: l8r.it/G2KC

A paper in Nature Neuroscience presents a new device capable of translating speech activity in the brain into spoken words in real-time. This technology could help people with speech loss to regain their ability to communicate more fluently in real time. go.nature.com/444kW0k

Work led by BAIR students Kaylo Littlejohn and Cheol Jun Cho advised by BAIR faculty Gopala Anumanchipalli "...made it possible to synthesize brain signals into speech in close to real-time." dailycal.org/news/campus/re… via The Daily Californian

#ICLR25 Our work on characterizing alignment between MLP matrices in LLMs and Linear Associative Memories has been accepted for an Oral Presentation at the NFAM workshop. Location : Hall 4 #5 Time : 11 AM (April 27) Gopala Anumanchipalli Berkeley AI Research

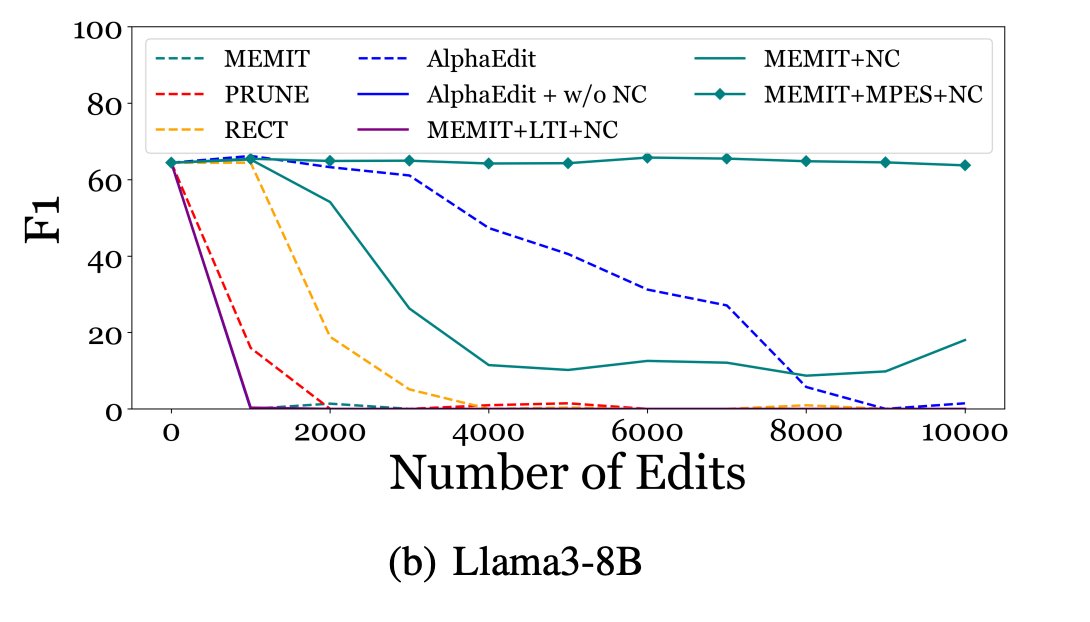

Just did a major revision to our paper on Lifelong Knowledge Editing!🔍 Key takeaway (+ our new title) - "Lifelong Knowledge Editing requires Better Regularization" Fixing this leads to consistent downstream performance! Tom Hartvigsen Ahmed Alaa Gopala Anumanchipalli Berkeley AI Research