Georgios Pavlakos

@geopavlakos

Assistant Professor at UT Austin @UTCompSci | Working on Computer Vision and Machine Learning

ID: 1272527553652236288

https://geopavlakos.github.io/ 15-06-2020 13:53:22

175 Tweet

2,2K Followers

267 Following

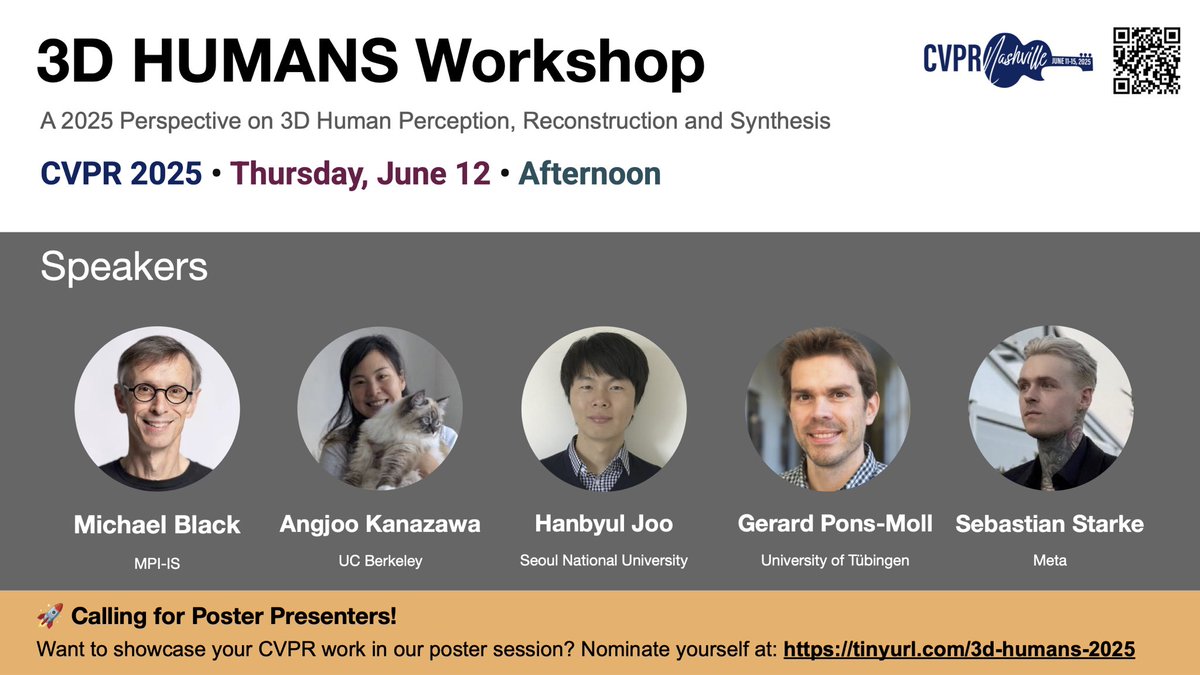

Thrilled to see a full room of attendees—thank you all for joining our workshop! Special thanks to our amazing speakers: Siyu Tang @VLG-ETHZ, Xavier Puig, Jingyi Yu, Michael Black and Angjoo Kanazawa. And a big thank you to all the authors for the poster presentation!

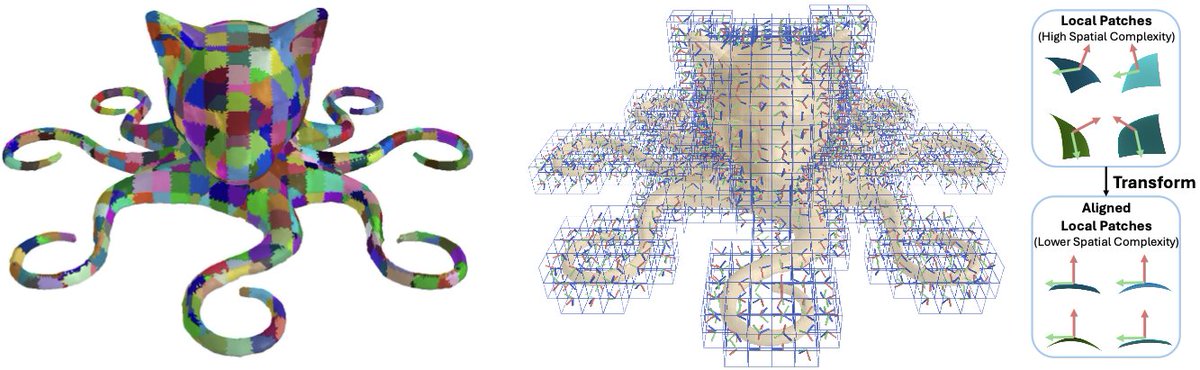

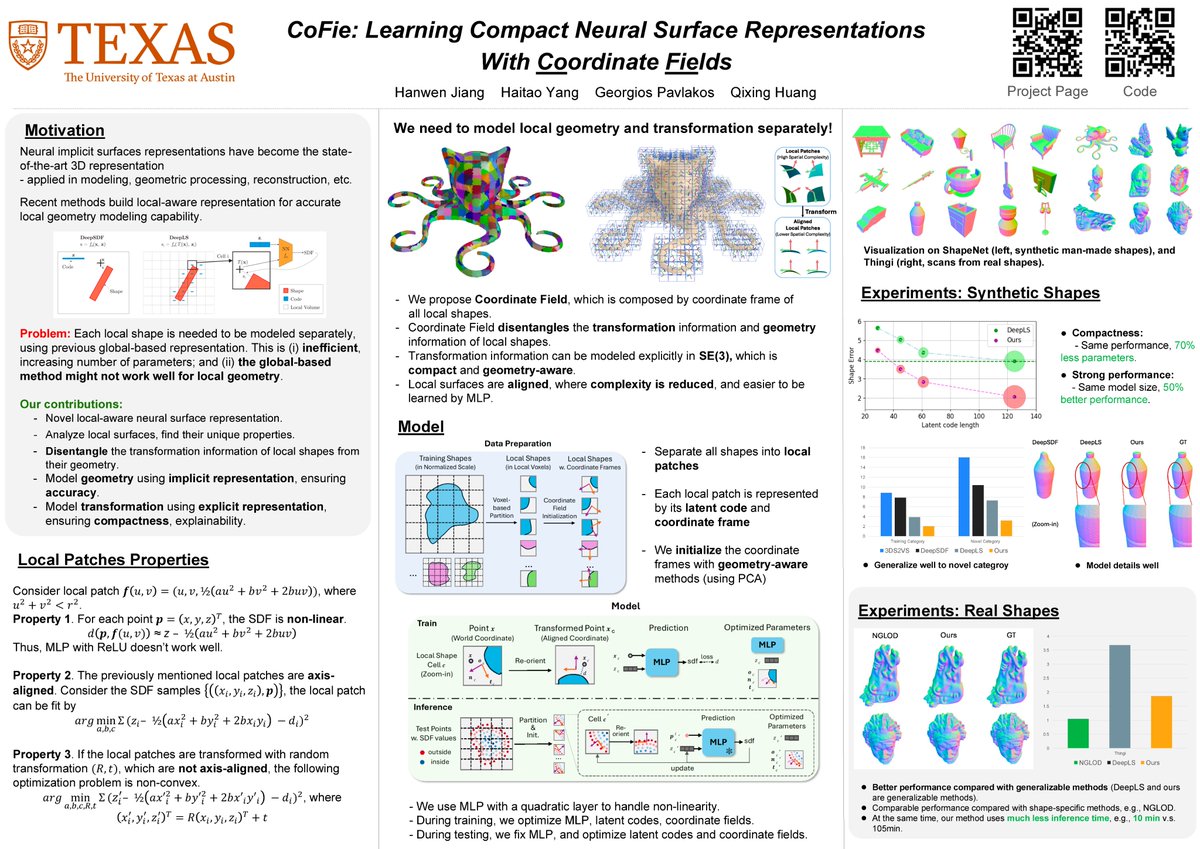

See our poster here! And this is a joint work with Haitao, Georgios Pavlakos and Qixing Huang

I'm excited to present "Fillerbuster: Multi-View Scene Completion for Casual Captures"! This is work with my amazing collaborators Norman Müller, Yash Kant, Vasu Agrawal, Michael Zollhoefer, Angjoo Kanazawa, Christian Richardt during my internship at Meta Reality Labs. ethanweber.me/fillerbuster/

Make sure to check out Hanwen's Hanwen Jiang latest work! 🚀 We introduce RayZer, a self-supervised model for novel view synthesis. We use zero 3D supervision, yet we outperform supervised methods! Some surprising and exciting results inside! 🔍🔥

🔍 3D is not just pixels—we care about geometry, physics, topology, and functions. But how to balance these inductive biases with scalable learning? 👀 Join us at Ind3D workshop #CVPR2025 (June 12, afternoon) for discussions on the future of 3D models! 🌐 ind3dworkshop.github.io/cvpr2025