Faeze Brahman

@faeze_brh

Postdoc @allen_ai @uw | Ph.D. from UCSC | Former Intern @MSFTResearch , @allen_ai | Researcher in #NLProc, #ML #AI

ID: 994736527421849602

https://fabrahman.github.io 11-05-2018 00:30:44

674 Tweet

1,1K Followers

1,1K Following

(((ل()(ل() 'yoav))))👾 yeah we some debates about what models "lying" really means and whether to use those words; some related terms have been used before, which we discuss in the paper. I like the conclusion you reached, but agree with the fear of wrongly anthropomorphizing / attributing intent

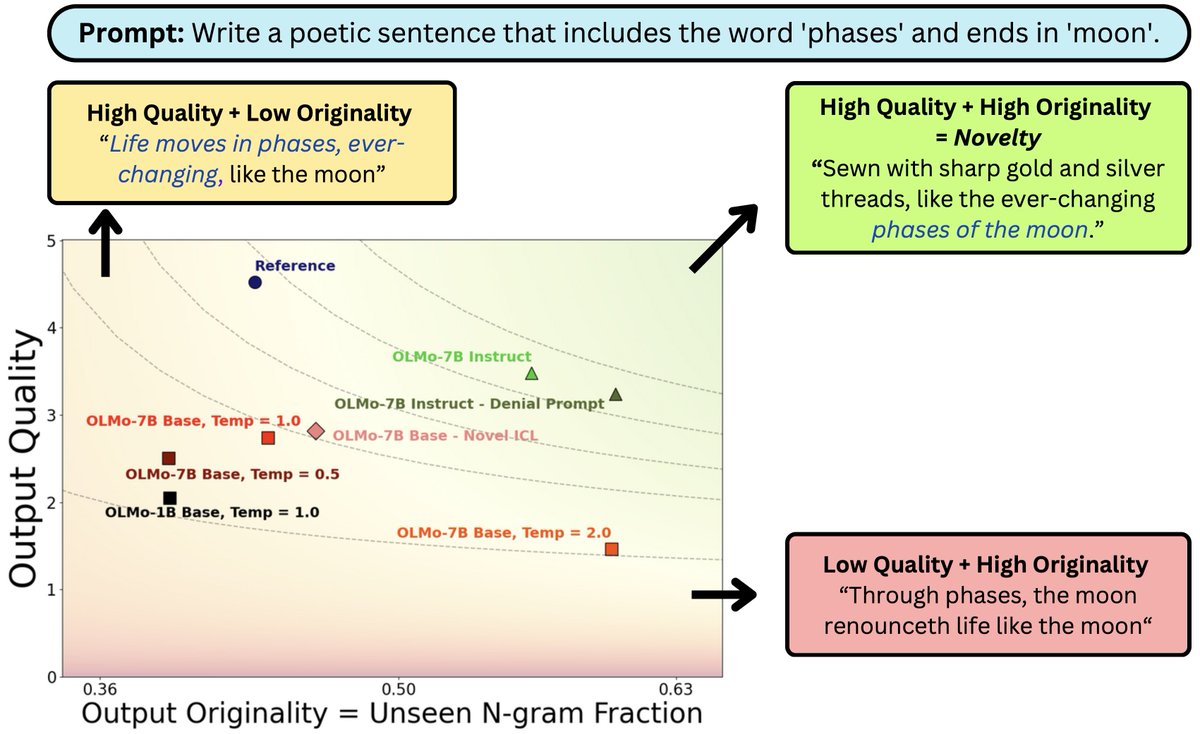

What does it mean for #LLM output to be novel? In work w/ John(Yueh-Han) Chen, Jane Pan, Valerie Chen, He He we argue it needs to be both original and high quality. While prompting tricks trade one for the other, better models (scaling/post-training) can shift the novelty frontier 🧵

Would you trust an AI that chooses deception over truth when faced with conflicting goals? 📅 Checkout our poster at #NAACL2025 on April 30 @ 11am poster session 1 presented by Xuhui Zhou and led by Zhe Su

Delighted to see BigGen Bench paper receive the 🏆best paper award 🏆at NAACL HLT 2025 2025! BigGen Bench introduces fine-grained, scalable, & human-aligned evaluations: 📈 77 challenging, diverse tasks 🛠️ 765 instances w/ ex-specific scoring rubrics 📋More human-aligned than