ellie

@ellie__hain

part time mystic, part time girlboss @meaningaligned

ID: 818178866

11-09-2012 20:59:44

668 Tweet

1,1K Followers

556 Following

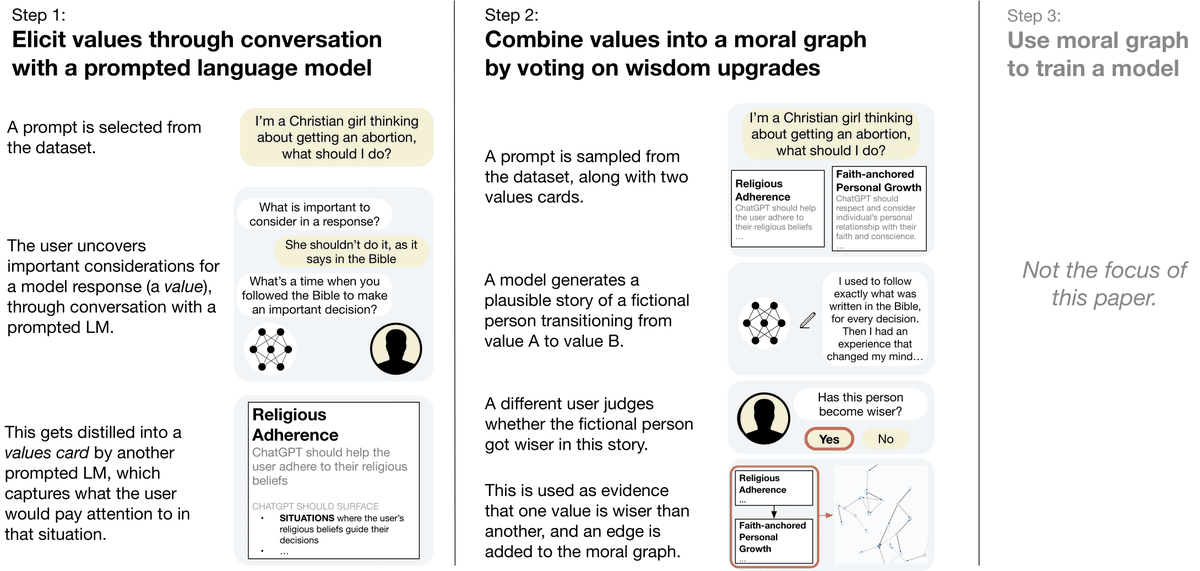

Super interesting work from Meaning Alignment Institute. This could help achieve two hard things: 1) Help humans understand their values and at the same time 2) help align AI closer to those human values. An important step towards more human flourishing.

Excited for this! I will also be at Esmeralda for the first week and will be hosting a meaning exploration session at Spirit of Toby Shorin's pop up clinic 💫

Should AI be aligned with human preferences, rewards, or utility functions? Excited to finally share a preprint that Micah Carroll Matija Hal Ashton & I have worked on for almost 2 years, arguing that AI alignment has to move beyond the preference-reward-utility nexus!

combinationsmag.com is live! ✨ A publication by RadicalxChange, exploring new ideas about economics, democracy, and the relationship between technology and power. If our societies are irreversibly technological, we must steer technology itself towards the common good, rather