Rotem Dror

@drorrotem

Asst. Prof. of Natural Language Processing @UofHaifa

ID: 1063386168337858560

http://rtmdrr.github.io 16-11-2018 10:59:54

188 Tweet

313 Followers

246 Following

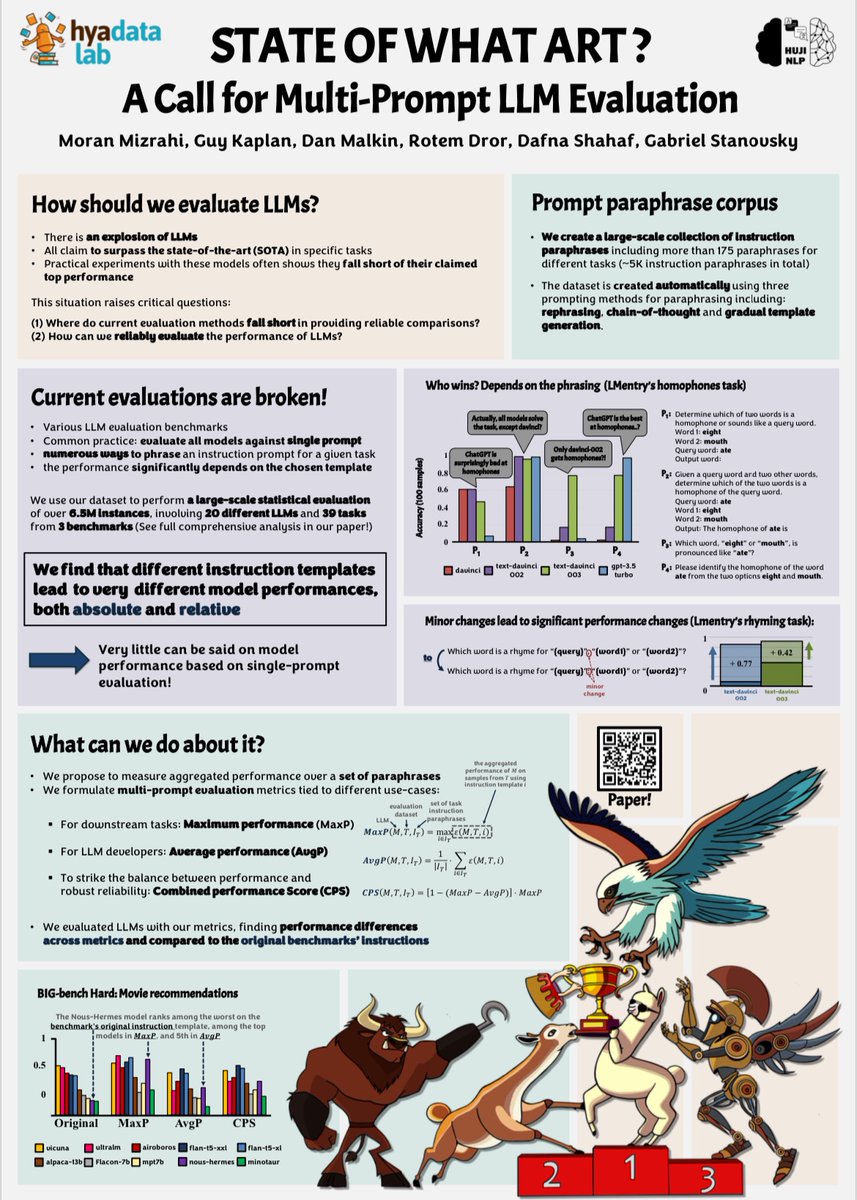

Excited to have presented our TACL paper about the sensitivity of LLMs to prompt paraphrases at #ACL2024! 📊✨ Guy Kaplan, Dan H.M 🎗, Rotem Dror, Hyadata Lab (Dafna Shahaf), Gabriel Stanovsky direct.mit.edu/tacl/article/d…

For a new and exciting research about data we need your help! 🤓📑💻 If your dataset meets these criteria, we would love to cite your research and analyze the data - exciting results are guaranteed:) #DataScience #NLP #vision Journal of Data-centric Machine Learning Research

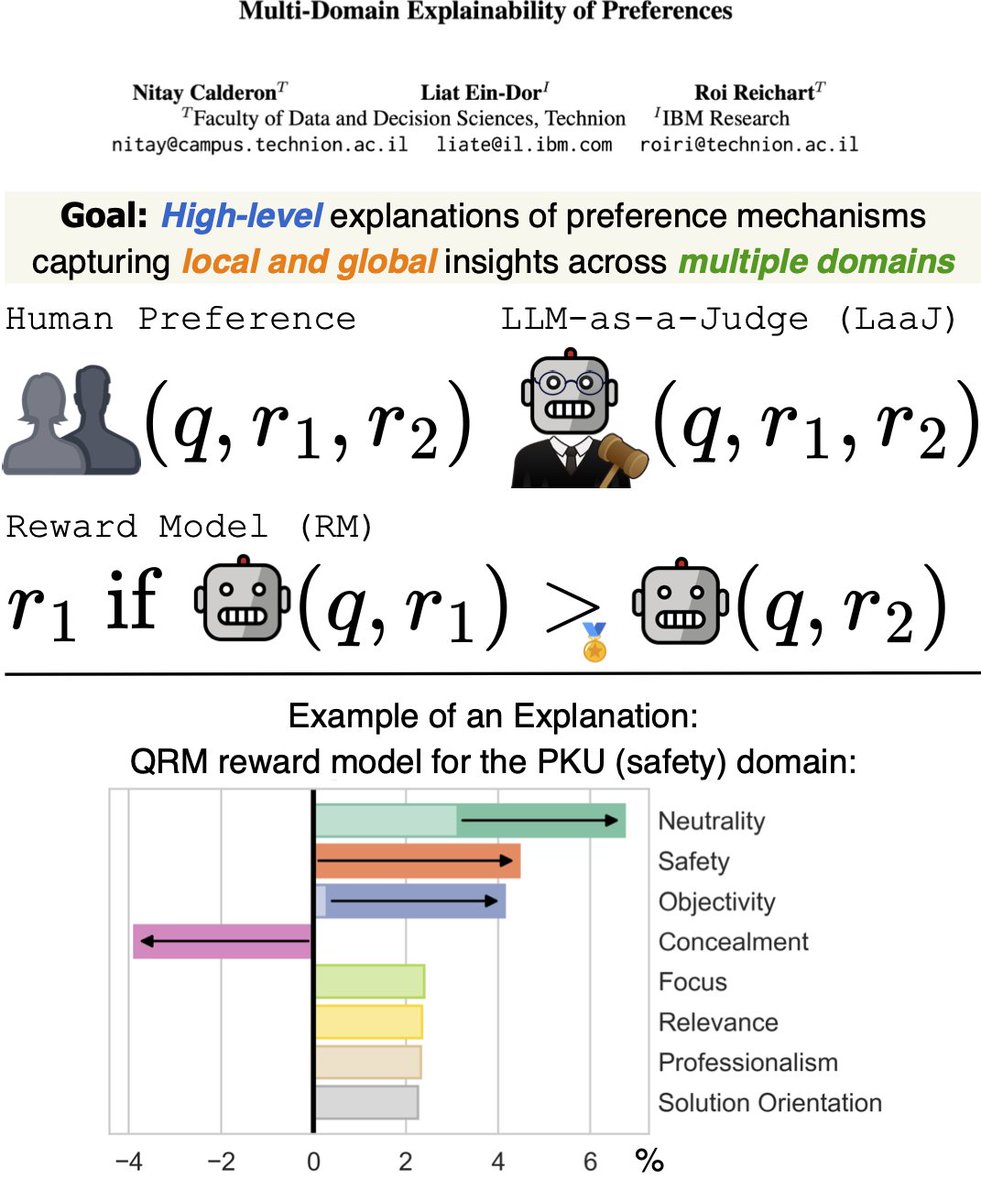

Do you think LLMs could win a Nobel Prize one day? 🤔 Can NLP predict heroin addiction outcomes, uncover suicide risks, or simulate brain activity? 🧠 Lotem Peled, Roi Reichart, and I wrote a blog post about NLP for Human-Centric Sciences 🤖👩🔬 👇👇👇 nitaytech.github.io/blog/2024/nlp4…

In our new preprint, Roi Reichart ,Rotem Dror and I propose a new statistical procedure: The Alternative Annotator Test (alt-test) The goal? To help researchers justify using LLMs over humans—if the LLM passes, its annotations can be confidently trusted😎 arxiv.org/abs/2501.10970

Check out our new pre-print on statistically sound methodology to verify the quality of LLM-as-a-judge annotation. Nitay Calderon Rotem Dror

A few months ago I told you that I'm working on something awesome and I need some datasets...well here is the first output of that effort and I sincerely think it's amazing 🤩 check it out! Nitay Calderon Roi Reichart #llm-as-a-judge, #nlpevaluation

The Alternative Annotator Test (alt-test) is a new statistical procedure proposed in our ACL 2025 paper! 🇦🇹🇦🇹 Rotem Dror Roi Reichart The goal? To help justify using LLMs over humans. If the LLM passes the test, its annotations can be trusted 😎 arxiv.org/abs/2501.10970