Doyoung Kim

@doyoungkim_ml

Incoming CS PhD @NYU_Courant; Prieviously MS & BS @kaist_ai; General intelligence in Language ∪ Robotics

ID: 1548921016562229248

https://doyoungkim-ml.github.io/ 18-07-2022 06:42:22

405 Tweet

313 Followers

529 Following

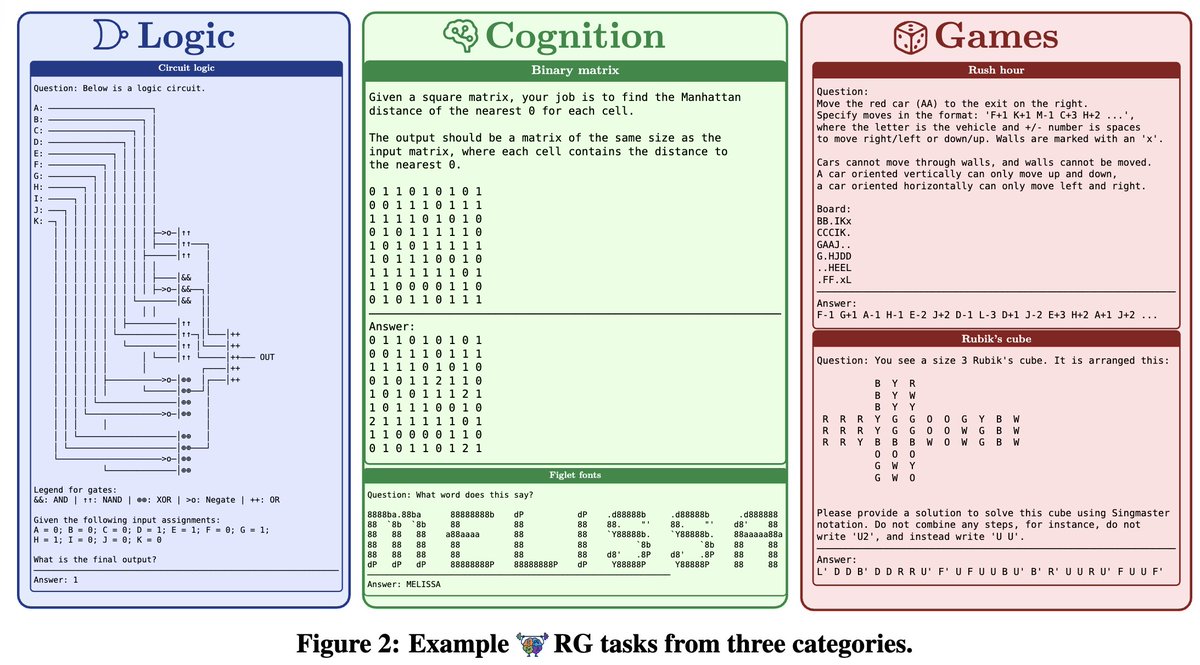

Here's how I (almost) got the high scores in ARC-AGI-1 and 2 (the honor goes to Jeremy Berman) while keeping the cost low. To put things into perspective: o3-preview scored 75.7% on ARC-AGI-1 last year while spending $200/task on low setting. My approach scores 77.1% while spending