Li Dong

@donglixp

NLP Researcher at Microsoft Research

ID: 269696571

http://dong.li 21-03-2011 08:49:12

241 Tweet

4,4K Followers

3,3K Following

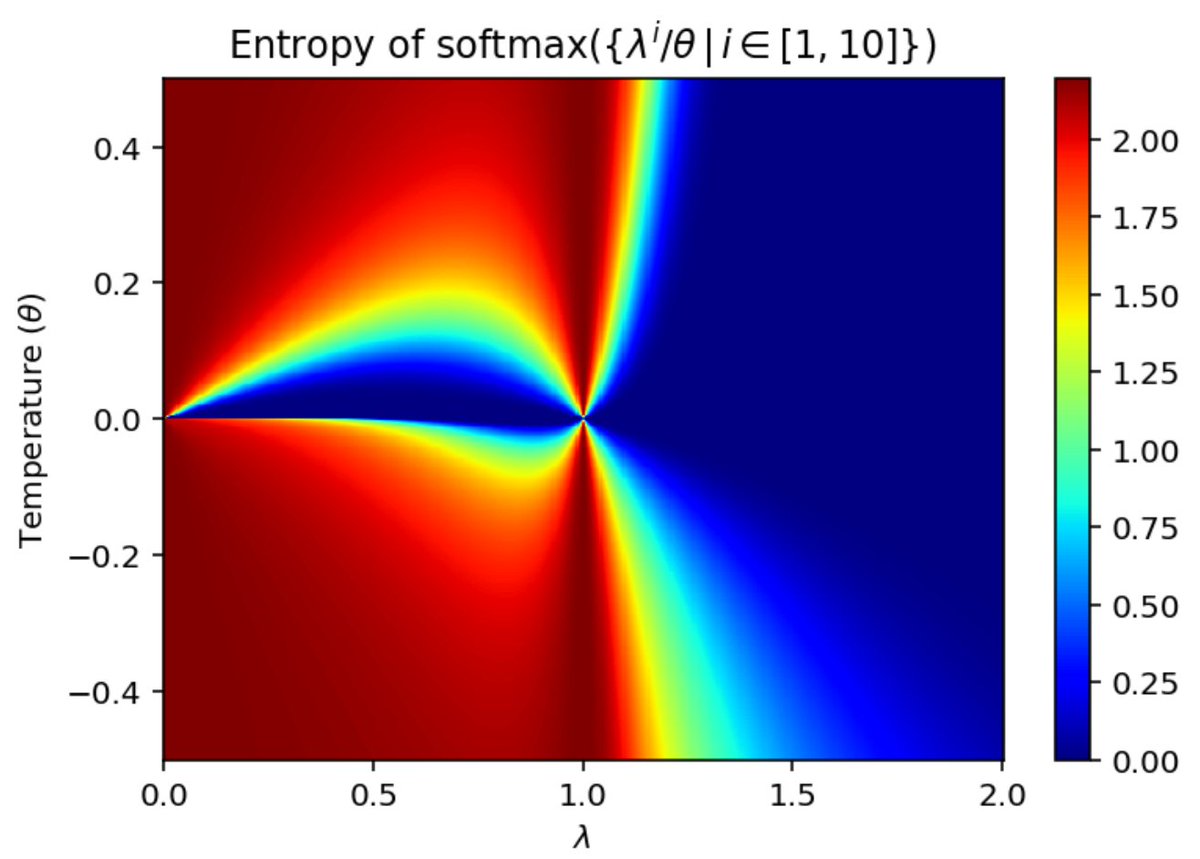

this Google DeepMind paper explores the limitations of the softmax function in artificial intelligence systems, particularly its inability to maintain sharpness in decision-making as the number of inputs increases. key findings the softmax function cannot robustly approximate