Dang Nguyen

@dangnth97

PhD @CS_UCLA | Student Researcher @GoogleAI | IMO 2015 Silver

ID: 1635212781086711810

https://hsgser.github.io/ 13-03-2023 09:35:28

96 Tweet

273 Followers

1,1K Following

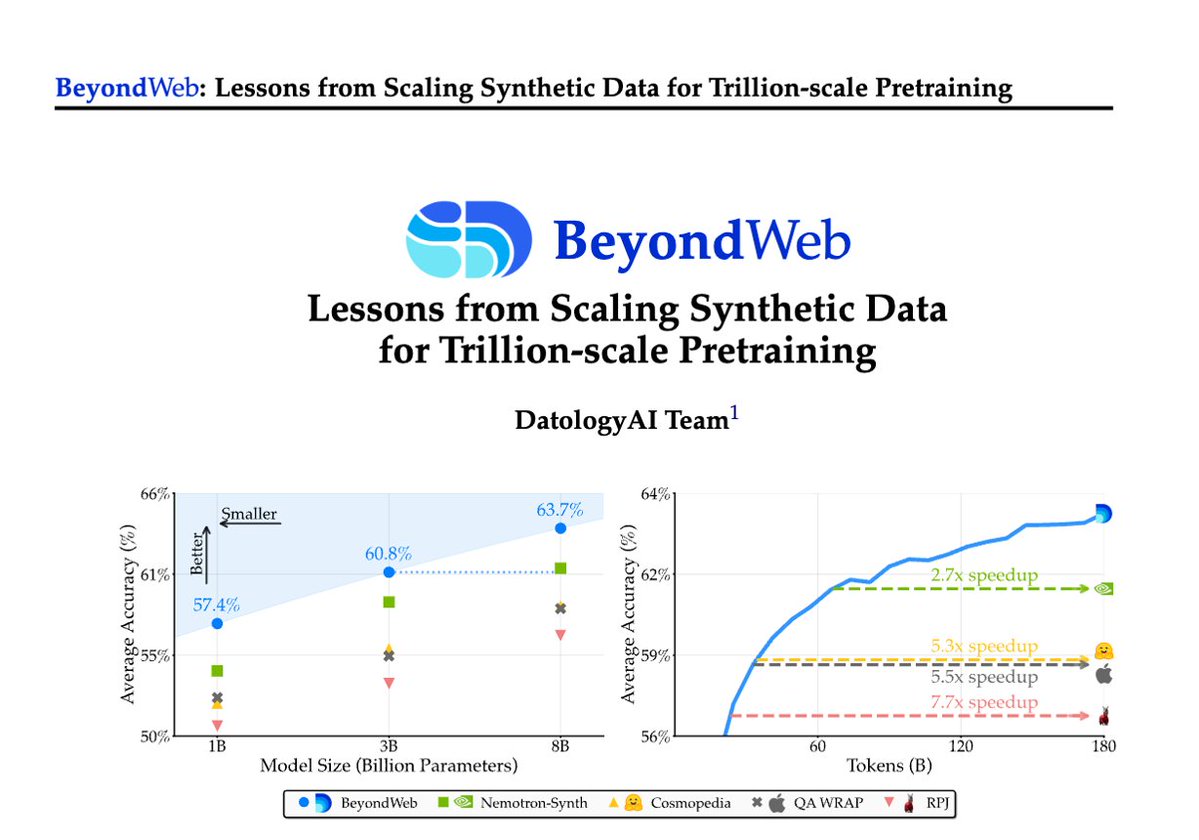

1/Pretraining is hitting a data wall; scaling raw web data alone leads to diminishing returns. Today DatologyAI shares BeyondWeb, our synthetic data approach & all the learnings from scaling it to trillions of tokens🧑🏼🍳 - 3B LLMs beat 8B models🚀 - Pareto frontier for performance