Damien Ferbach

@damien_ferbach

PhD at @Mila_Quebec/ Previously maths at @ENS_ULM

ID: 1497811031703408642

https://damienferbach.github.io 27-02-2022 05:49:27

31 Tweet

169 Followers

145 Following

Great article on Model Collapse out in the New York Times! Features great work (Sina Alemohammad Ilia Shumailov🦔 Zakhar Shumaylov Nicolas Papernot Quentin Bertrand et al) and some of ours with Elvis Dohmatob Yunzhen Feng François Charton Pu Yang 杨 埔 Kudos to Aatish for a super job nytimes.com/interactive/20…

Some exciting news coverage of iterative retraining of generative models by the NYT! nytimes.com/interactive/20… The article includes several papers including some of my co-authors from Mila - Institut québécois d'IA Quentin Bertrand Damien Ferbach Gauthier Gidel were excited to write: Broad strokes

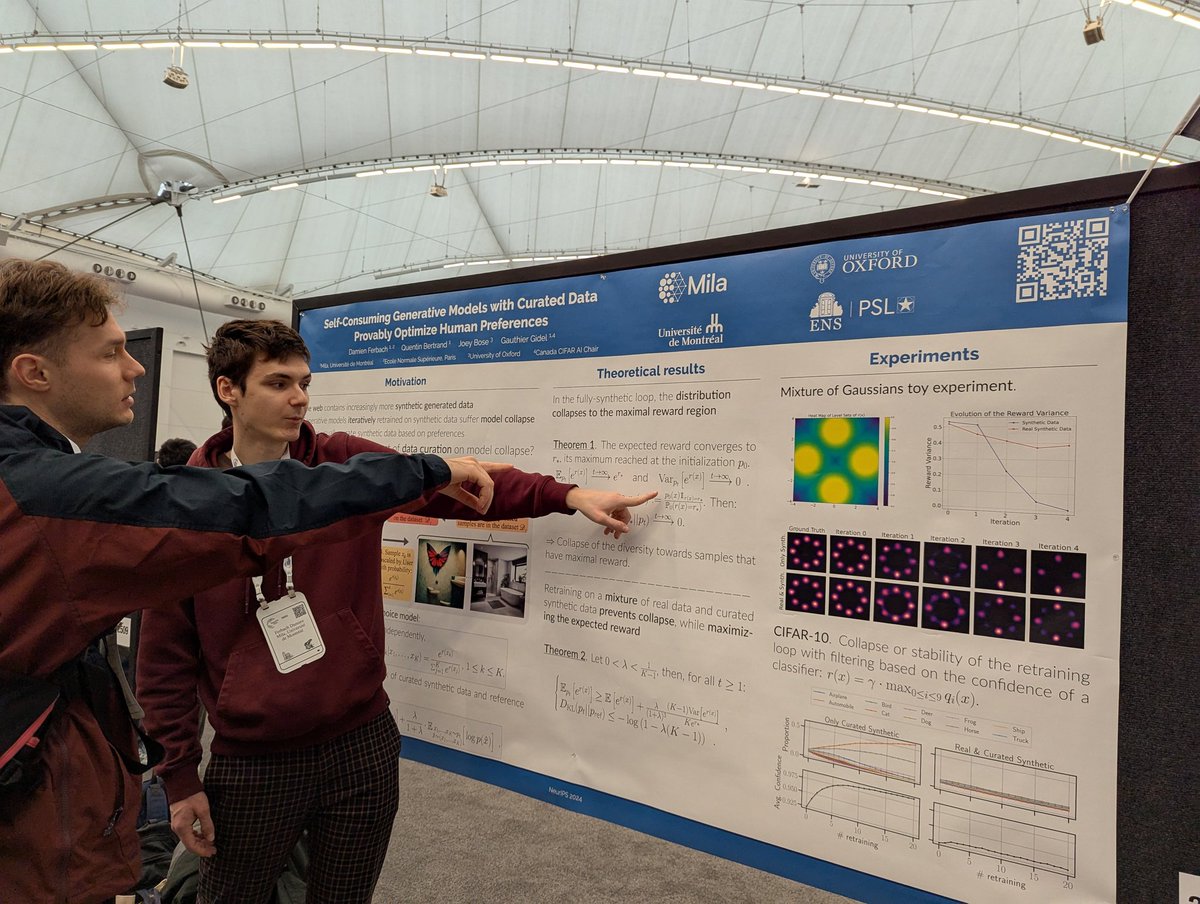

I am delighted to share that our paper has been accepted at #NeurIPS as a spotlight!🚀 A huge thanks to my amazing collaborators Quentin Bertrand, Joey Bose and my supervisor Gauthier Gidel !!

My group will be presenting a paper a NeurIPS on its topic: arxiv.org/pdf/2407.09499 Lead by Damien Ferbach with the amazing Quentin Bertrand and Joey Bose 6/n

Come checkout our spotlight poster in the East ballroom #2510 happening now with Damien Ferbach Quentin Bertrand Gauthier Gidel Especially come if you're interested in self Consuming generative models and model collapse!!

Come checkout our poster now!!! Poster 2510 east ballroom. Joey Bose Gauthier Gidel Quentin Bertrand Mila - Institut québécois d'IA

Un aperçu du poster spotlight de Damien Ferbach présenté à NeurIPS Conference, Self-Consuming Generative Models with Curated Data Provably Optimize Human Preferences:

In summary, while data can change the power law exponent significantly, it's quite challenging to find architectures and optimizers that change the exponent! If you want to see something that *does* change the power law exponent, stay tuned and follow Damien Ferbach for more 😁