Clara Isabel Meister

@clara__meister

PhD student in the ML Institute at ETH Zurich.

Still figuring out how Twitter works... 🤦♀️

ID: 1141006043218108419

http://cimeister.github.io 18-06-2019 15:33:34

102 Tweet

1,1K Followers

49 Following

"A Measure-Theoretic Characterization of Tight Language Models" Draft: arxiv.org/abs/2212.10502 By Leo Du (JHU) Lucas Torroba-Hennigen Tiago Pimentel Clara Isabel Meister Jason Eisner (JHU) @ryandcotterell TLDR; Formalizes LMs' distribution (i.e., whether generative process terminates with prob 1.)

"Tokenization and the Noiseless Channel" Draft: coming soon! By Vilém Zouhar Clara Isabel Meister Gianni Gastaldi @giannig.bsky.social Leo Du (JHU) Mrinmaya Sachan @ryandcotterell TLDR; Develops information-theoretic efficiency measures of subword tokenization alg + theoretical bounds for such measures.

1/8 Excited to share the result of my internship at Ai2 with Arman Cohan Iz Beltagy Matthew Peters James Henderson Hamish Ivison Jake Tae . We propose TESS: a Text-to-text Self-conditioned Simplex Diffusion model arxiv.org/abs/2305.08379

Has a language’s word order been affected by a pressure for uniform information density? We investigate this Q in our upcoming TACL paper 🧵 with Clara Isabel Meister Tiago Pimentel Michael Hahn @ryandcotterell Richard Futrell Roger Levy arxiv.org/abs/2306.03734

Computational psycholinguists and friends take note: Marten van Schijndel and I are co-editing, under the aegis of @AdrianBStaub, a special issue of JML on language models and psycholinguistics! Call: sciencedirect.com/journal/journa…. I'm happy to chat about this in Toronto at #ACL2023NLP!

Come to our ACL tutorial tomorrow at 14h on generating text from language models! Material will be online here: rycolab.io/classes/acl-20… w/ Tiago Pimentel Afra Amini John Hewitt Luca Malagutti @ryandcotterell

🚨🚨New Paper Announcement (to appear in TACL) 📜 from me, Tiago Pimentel, Clara Isabel Meister, @ryandcotterell and Roger Levy: Testing the Predictions of Surprisal Theory in 11 Languages arxiv.org/abs/2307.03667 🌎

If you're at #ACL2023NLP, stop by the poster session tomorrow @ 16h for our paper "On the Efficacy of Sampling Adapters"! Hope to see some of you there :) arxiv.org/pdf/2307.03749… Tiago Pimentel Luca Malagutti Ethan Gotlieb Wilcox @ryandcotterell

We have an absolutely stellar meetup coming up this October 19th @ 6:00 PM! Jonas Pfeiffer from Google DeepMind will be presenting followed by Eiso Kant co-founder and CTO of poolside. Last meetup we ran out of spots! RSVP: zurich-nlp.ch/event/zurich-n…

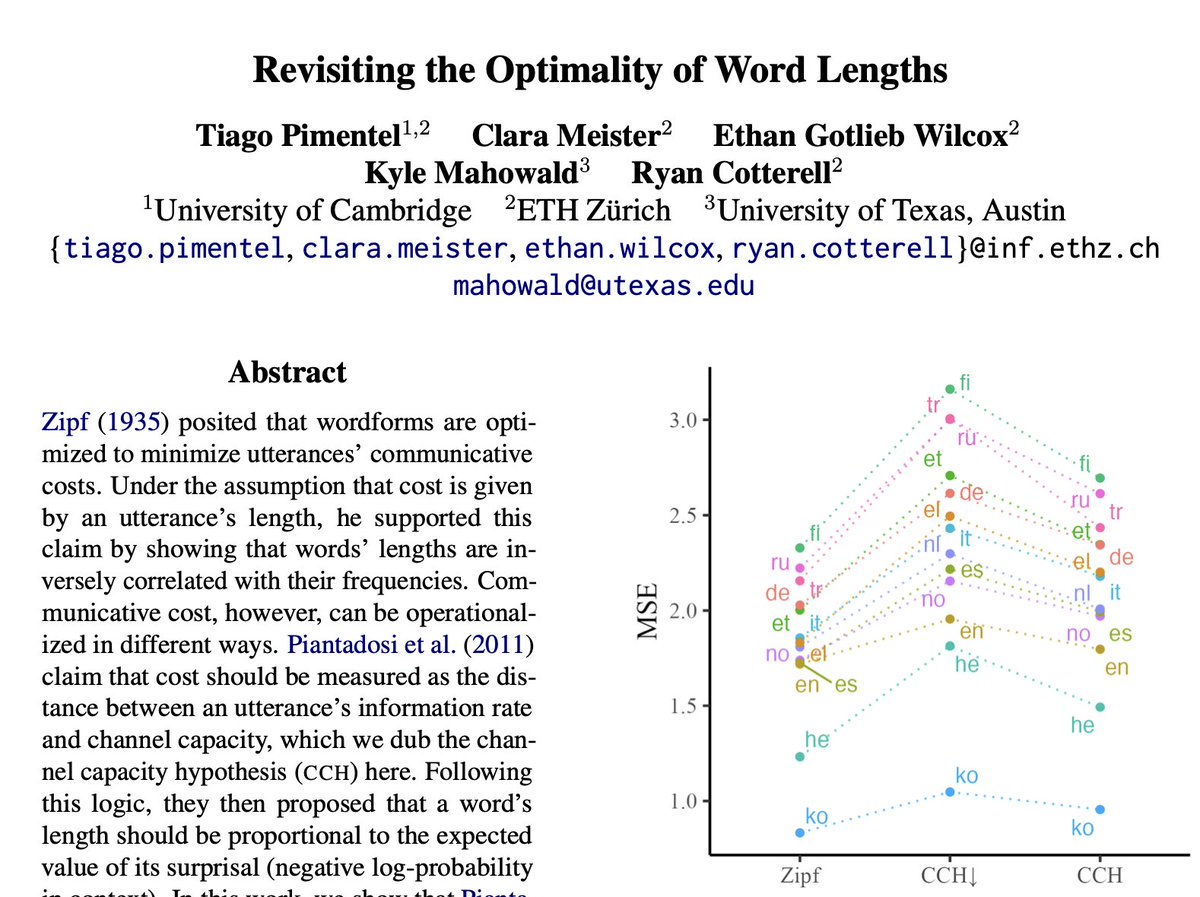

Thank you to #EMNLP2023 chairs for the 😱 two 😱 outstanding paper awards! I am so grateful to have worked on these projects with wonderful colleagues — Tiago Pimentel (who is the first author on one of the papers!), Clara Isabel Meister, Kyle Mahowald and @ryandcotterell

🔔🌟 New Preprint Alert 🔔🌟 “An Information-Theoretic Analysis of Targeted Regressions during Reading” with Tiago Pimentel , Clara Isabel Meister , @ryandcotterell - Psycholinguistics 🧠 Computational Modeling 🤖 Crosslinguistic Studies 🌍 Information Theory 📡 osf.io/preprints/psya…

Happy to share our #ACL2024 paper: "Causal Estimation of Memorisation Profiles" 🎉 Drawing from econometrics, we propose a principled and efficient method to estimate memorisation using only observational data! See 🧵 +Clara Isabel Meister, Thomas Hofmann, Andreas Vlachos, Tiago Pimentel

Hey #NLProc and #psycholing Twitter :) We found a bug in how we're all computing contextual word probabilities and wrote a paper about it! It's a very easy fix, so please check it out! +Clara Isabel Meister

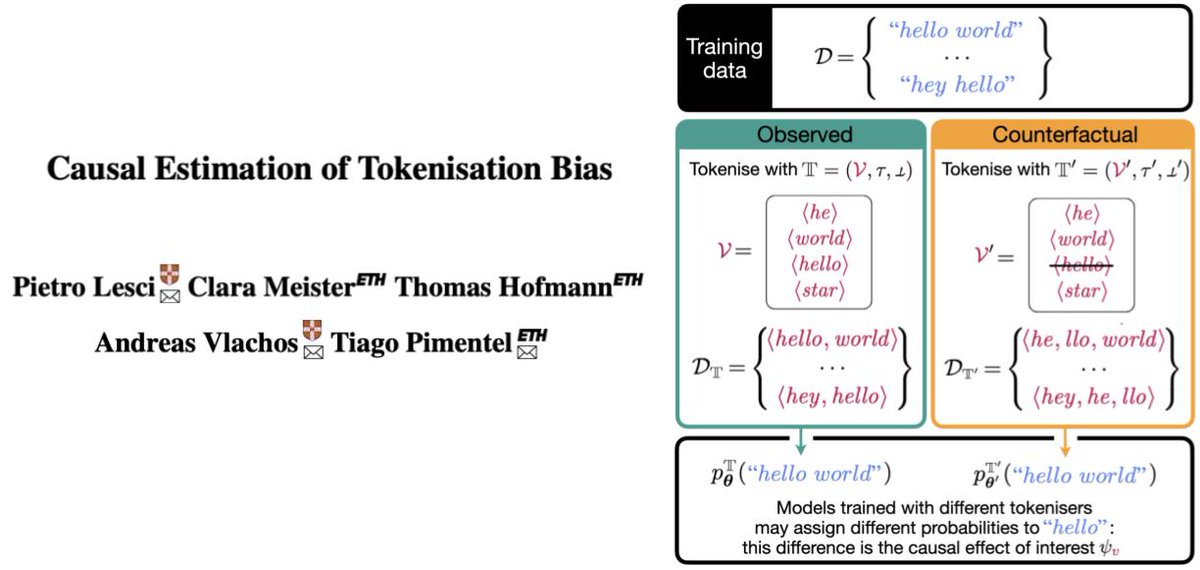

Super excited and grateful that our paper received the best paper award at #ACL2024 🎉 Huge thanks to my fantastic co-authors — Clara Isabel Meister, Thomas Hofmann, Andreas Vlachos, and Tiago Pimentel — the reviewers that recommended our paper, and the award committee #ACL2024NLP