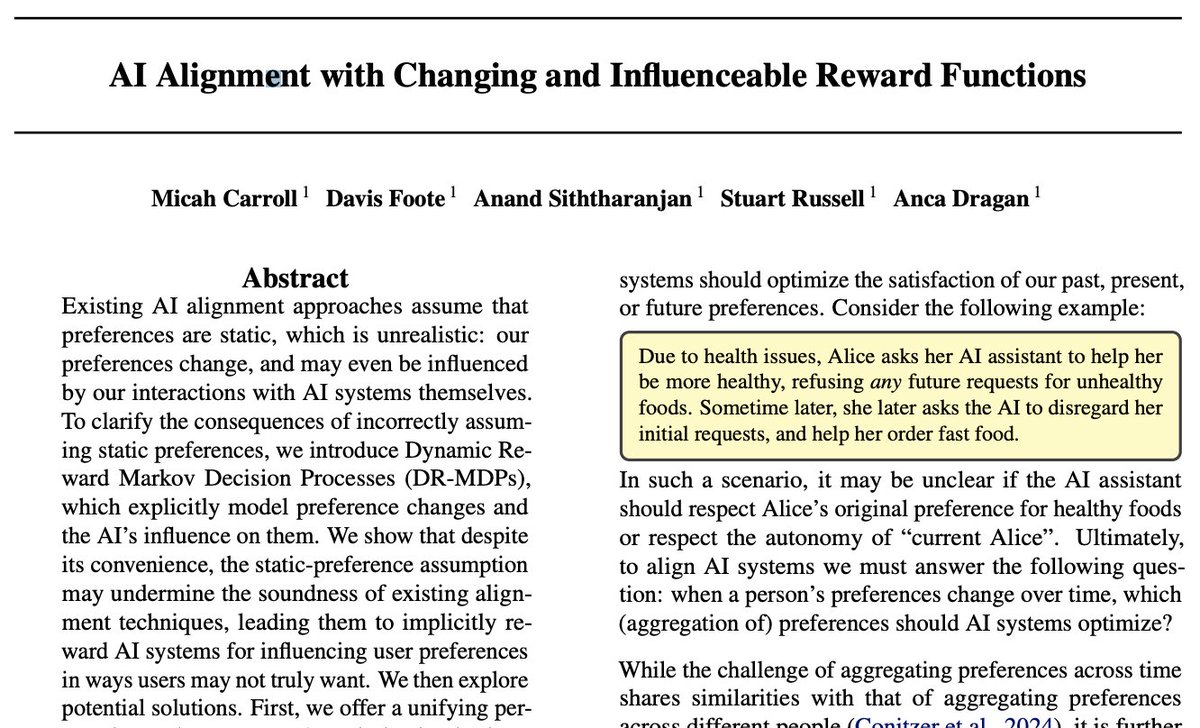

Center for Human-Compatible AI

@chai_berkeley

CHAI is a multi-institute research organization based out of UC Berkeley that focuses on foundational research for AI technical safety.

ID: 1058055843466244096

http://humancompatible.ai 01-11-2018 17:59:05

200 Tweet

3,3K Followers

109 Following

Center for Human-Compatible AI applications for 2025 close in just over a day! ⏰‼️ Apply now! Details below: