Changyu Chen

@cameron_chann

PhD student @sgSMU. RL x LLMs. Previously @NTUsg, @ZJU_China

Post-training for Sailor2

ID: 1266549323581411328

https://cameron-chen.github.io/ 30-05-2020 01:58:14

95 Tweet

223 Followers

218 Following

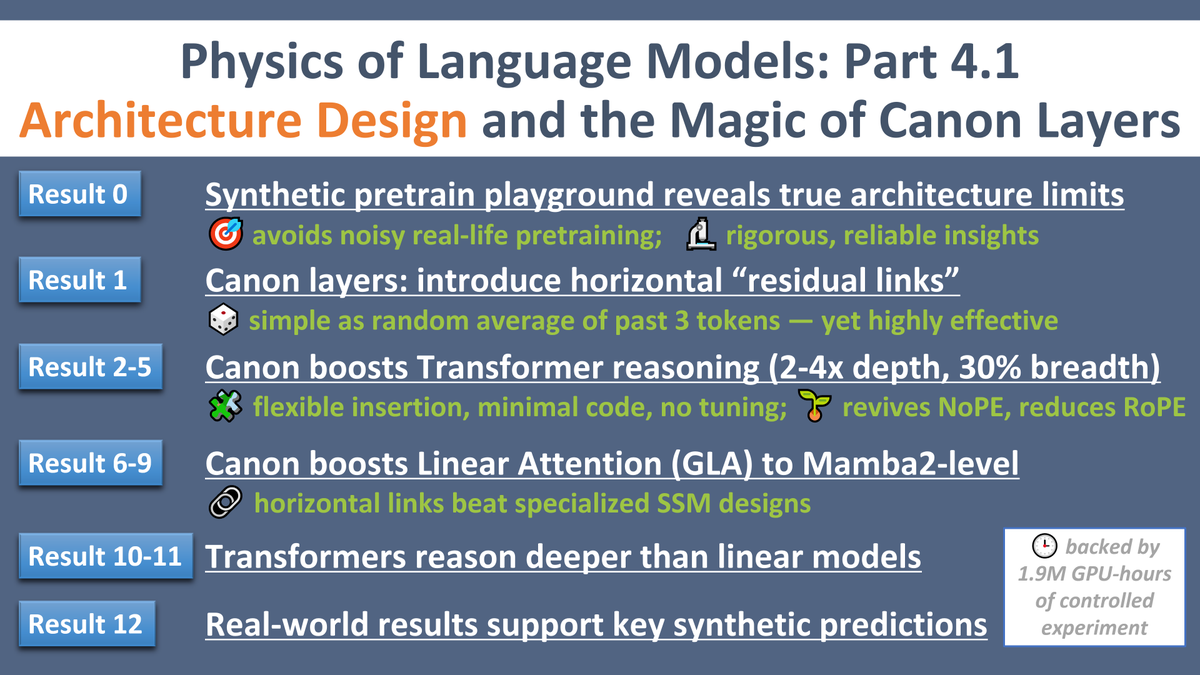

🚨 RL x LLM folks at #ICLR2025 — come join us during the Friday lunch break! If you haven’t RSVP’d on Whova, you can also register here: lu.ma/s8udv997?tk=B4… Bo Liu (Benjamin Liu) and I will scout for a chill spot (likely a corner at the venue) and share the location tomorrow.