Sanyam Bhutani

@bhutanisanyam1

👨💻 Sr Data Scientist @h2oai | Previously: @weights_biases 🎙 Podcast Host @ctdsshow 👨🎓 International Fellow @fastdotai 🎲 Grandmaster @Kaggle

ID:784597005825871876

https://www.youtube.com/c/chaitimedatascience 08-10-2016 03:31:18

7,9K Tweets

34,5K Followers

994 Following

RAG vs. Fine-Tuning debates drive me nuts. They are not fungible replacements for each other. Dan Becker and I are teaching a free lighting course on the topic

maven.com/p/787a2f/rag-a…

A few weeks ago, I had an incredible conversation with Jeremy Howard

We chatted all things AnswerAI (his new startup), FastAI, and more. I hope you enjoy this as much as I did 🫶

youtu.be/OujUZnXf4J0?si…

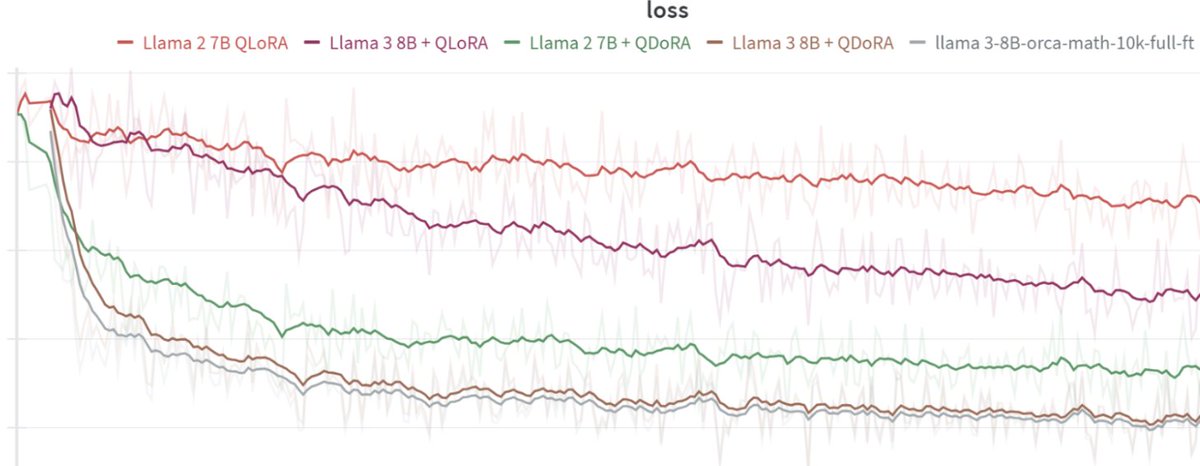

There are a growing number of voices expressing disillusionment with fine-tuning.

I'm curious about the sentiment more generally. (I am withholding sharing my opinion rn).

Tweets below are from Emmanuel Ameisen anton Ethan Mollick

The incredible Radek Osmulski 🇺🇦 was kind enough to interview me 🙏

We talked about:

- My journey into programming

- How fastai changed my life

- Why I started my podcast series

- My approach to goal setting

- Reading LLM papers

Thanks again Radek!

m.youtube.com/watch?v=JrwO7f…

You need to put 2x the quantity as your goal, where x is the quantity you can achieve easily in a week.

Eg: you should aim daily 2 hours to read paper, if you can comfortably read 1 hour now.

- Sanyam Bhutani said this during an interview with Radek.

youtu.be/JrwO7f2C__U?si…

After watching the video

youtu.be/haRCu4hoI2A?si…

I have started reading this book.

I will be posting some ideas from this book.

Thanks Sanyam Bhutani and Radek Osmulski 🇺🇦

Last week, Radek Osmulski 🇺🇦 and I got to hangout with the CEO of Answer.ai 🙏

Jeremy Howard has always been very kind to us with his time

Also, he owns a large beach in Brisbane so that makes it an awesome trip 😁

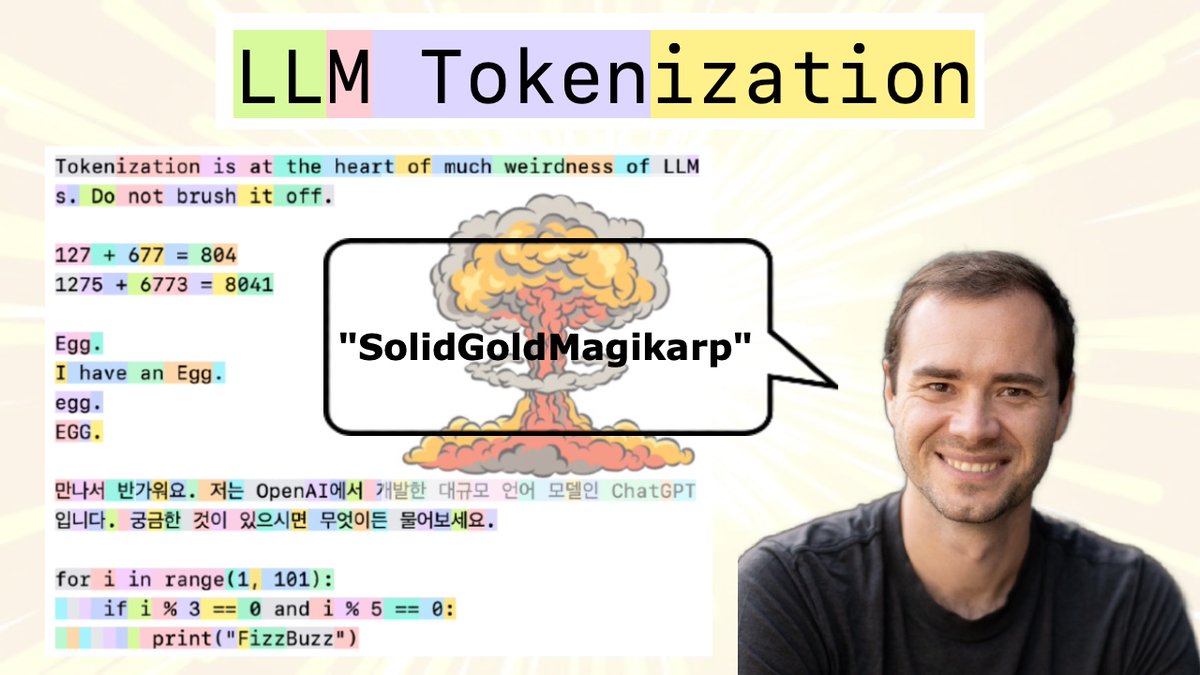

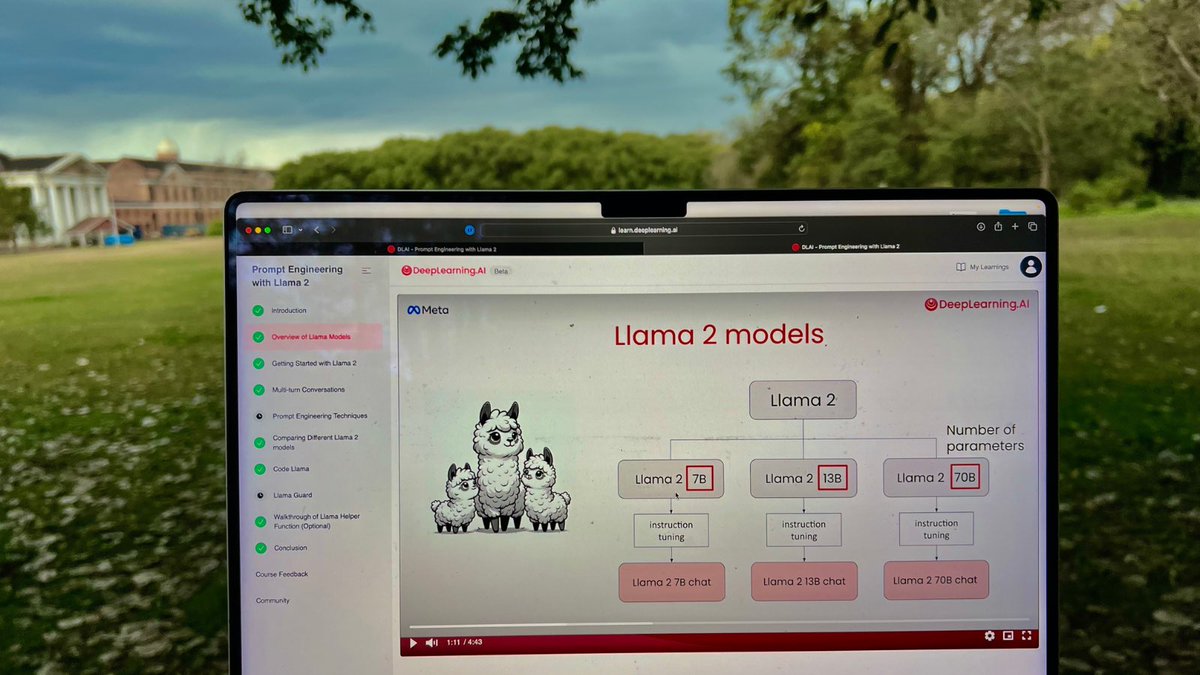

A perfect intro to open source LLMs! 🙏

The course by Amit Sangani is now my top recommendation for getting started with Large Language Models:

- Just enough theory for a whole picture

- Teaches prompting, special tokens and conversational agents

- Perfectly abstracts the…

After 3 years of learning, being mentored and building with this legend

I finally met Aman Arora in person! 🙏