Anish Shah

@ash0ts

Making ML Make Sense @ wandb.ai 👾

ID: 1461369766455787523

18-11-2021 16:24:56

62 Tweet

136 Followers

270 Following

Evaluated Llama 3.1 70B (Fireworks AI) as well and is performant at the same level as Mistral Large 2 128B. - Llama is outperforming on GSM8k (math) while Mistral is outperforming on MATH benchmark.😅 - Llama > Mistral (reasoning tasks) - Mistral > Llama (Q&A tasks)

I will be talking tomorrow on- - LLM landscape - Need for structured output - function calling, json, constrained decoding, more - RAG - LLM system evaluation - Weights & Biases Weave for building LLM applications correctly If it excites you, consider showing up. 💫⭐🌟

Join us on September 10 to learn how to build production-ready RAG systems. Learn from Anish Shah about optimizing pipelines, enhancing queries, and scaling solutions for real-world applications. Ideal for tech leads and product managers driving AI innovation. Register now:

⚡️ AI Hacker Cup Lightning Comp Today we're kicking off a ⚡️ 7-day competition to solve all 5 of the 2023 practice Hacker Cup challenges with Mistral AI models Our current baseline is 2/5 with the starter RAG agent (with reflection) Mistral AI api access provided Details👇

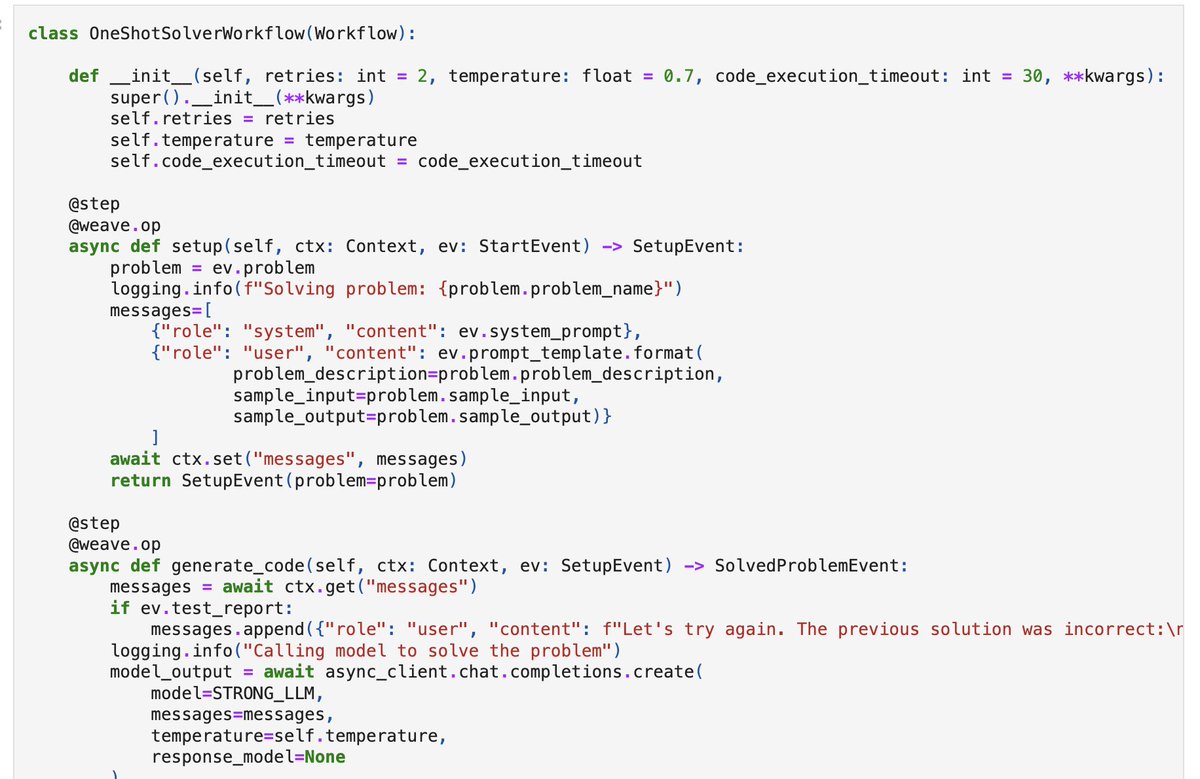

Today I gave LlamaIndex 🦙's workflows a go to tackle the NeurIPS AI hackercup competition. I created a Workflow to iterate from an initial solution, run the generated solution, and check against the expected output. It is effortless to define steps with the expected inputs and

Defining your grading criteria for LLM outputs is an organic process that evolves the more time you spend on it In “Who Validates the Validators” Shreya Shankar et al highlight this This weekend in our SF office a good chunk % of hackers will learn this 😃 lu.ma/judge