Aryan

@aryanvs_

latent explorer @huggingface

ID: 1722556084462714880

09-11-2023 10:06:04

500 Tweet

758 Followers

1,1K Following

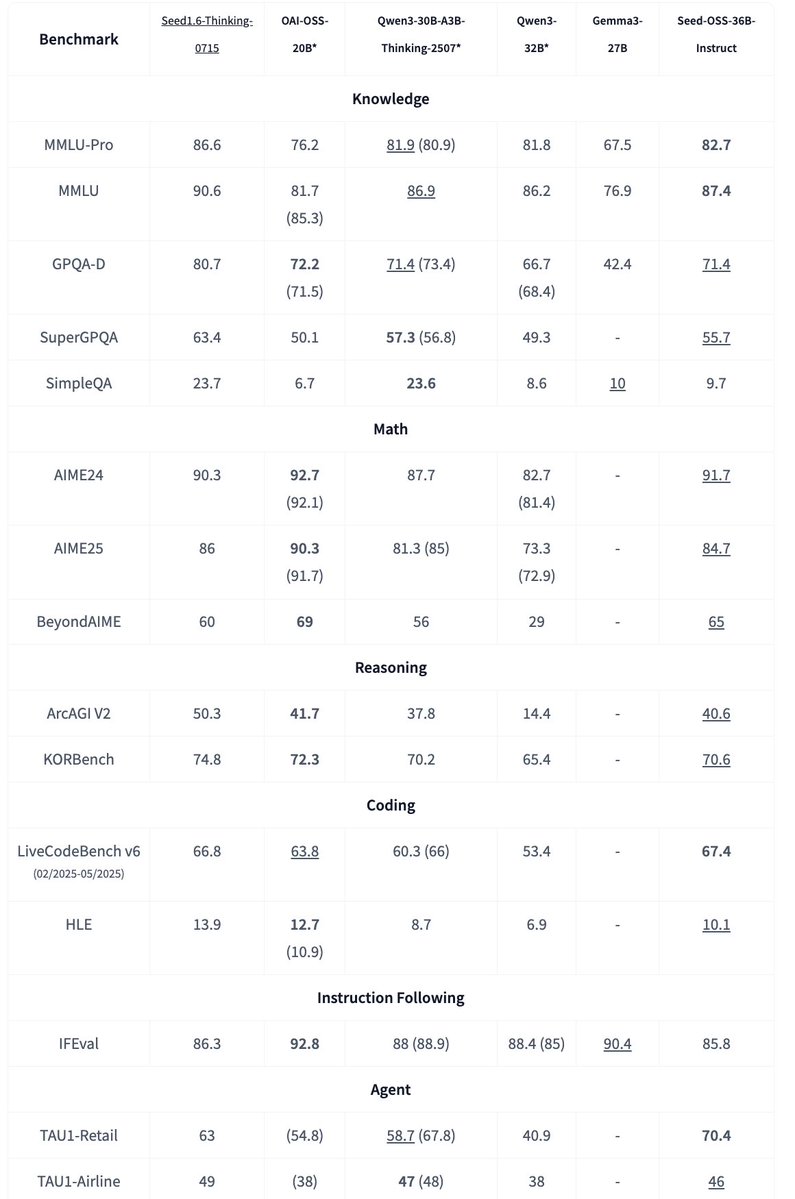

ByteDance Seed OSS is now available on Hugging Face!!! - 36B, Apache2 - Great for ablation research, including Base, base without synthetic instruction data and instruction variants - Great performance on reasoning & Agents - Native 512k long context - Flexible thinking budget