Arnaud Autef

@arnaud_autef

Research Engineer @GoogleDeepMind working on Gemini Flash⚡

ID: 1169004884982730753

03-09-2019 21:51:09

748 Tweet

240 Followers

346 Following

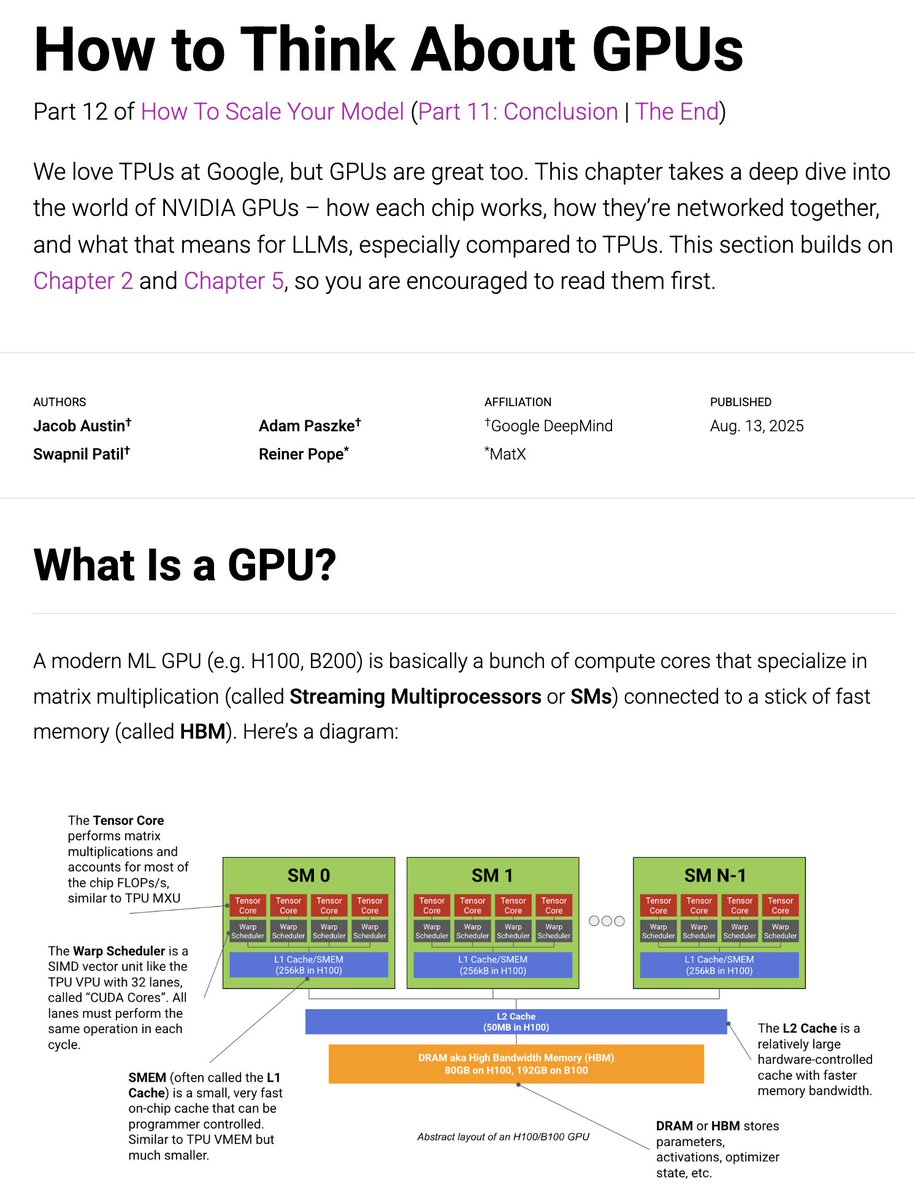

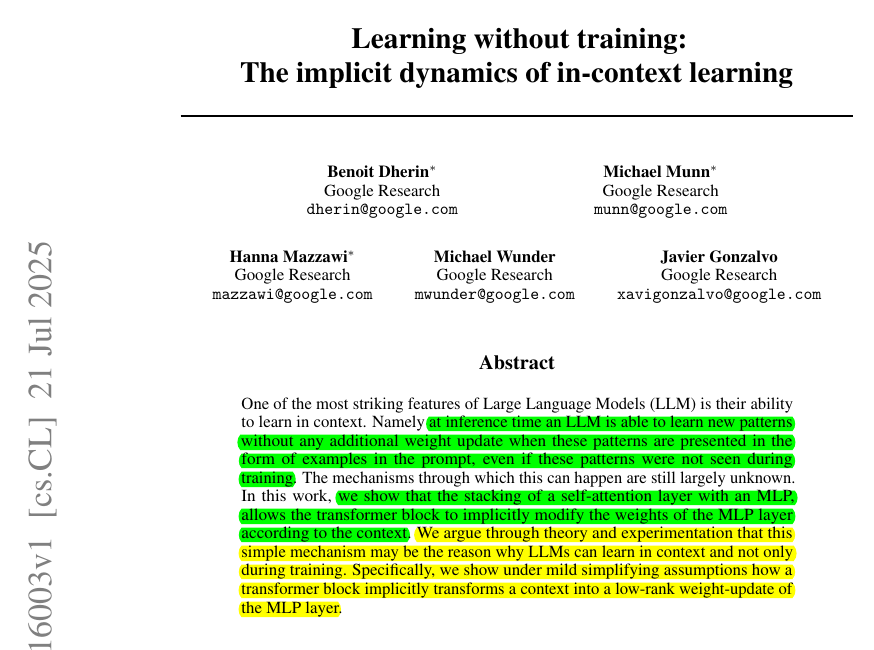

Beautiful Google Research paper. LLMs can learn in context from examples in the prompt, can pick up new patterns while answering, yet their stored weights never change. That behavior looks impossible if learning always means gradient descent. The mechanisms through which this

New paper 📜: Tiny Recursion Model (TRM) is a recursive reasoning approach with a tiny 7M parameters neural network that obtains 45% on ARC-AGI-1 and 8% on ARC-AGI-2, beating most LLMs. Blog: alexiajm.github.io/2025/09/29/tin… Code: github.com/SamsungSAILMon… Paper: arxiv.org/abs/2510.04871