Argilla

@argilla_io

Making AI data go brrrr (acquired by 🤗 Hugging Face)

ID: 1432630844720562177

https://github.com/argilla-io 31-08-2021 09:06:42

1,1K Tweet

4,4K Followers

39 Following

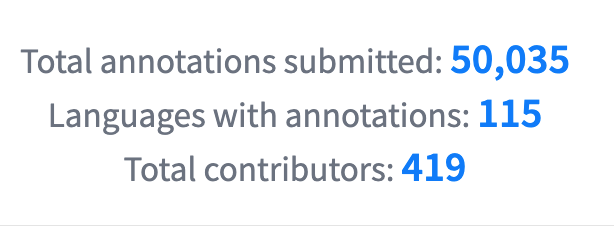

How Fine is FineWeb2? The community has evaluated the educational quality of over 1,000 examples from FineWeb 2 across 15 languages (and counting!). tl;dr: The Hugging Face community is amazing, and there's already sufficient data to start building. More in 🧵

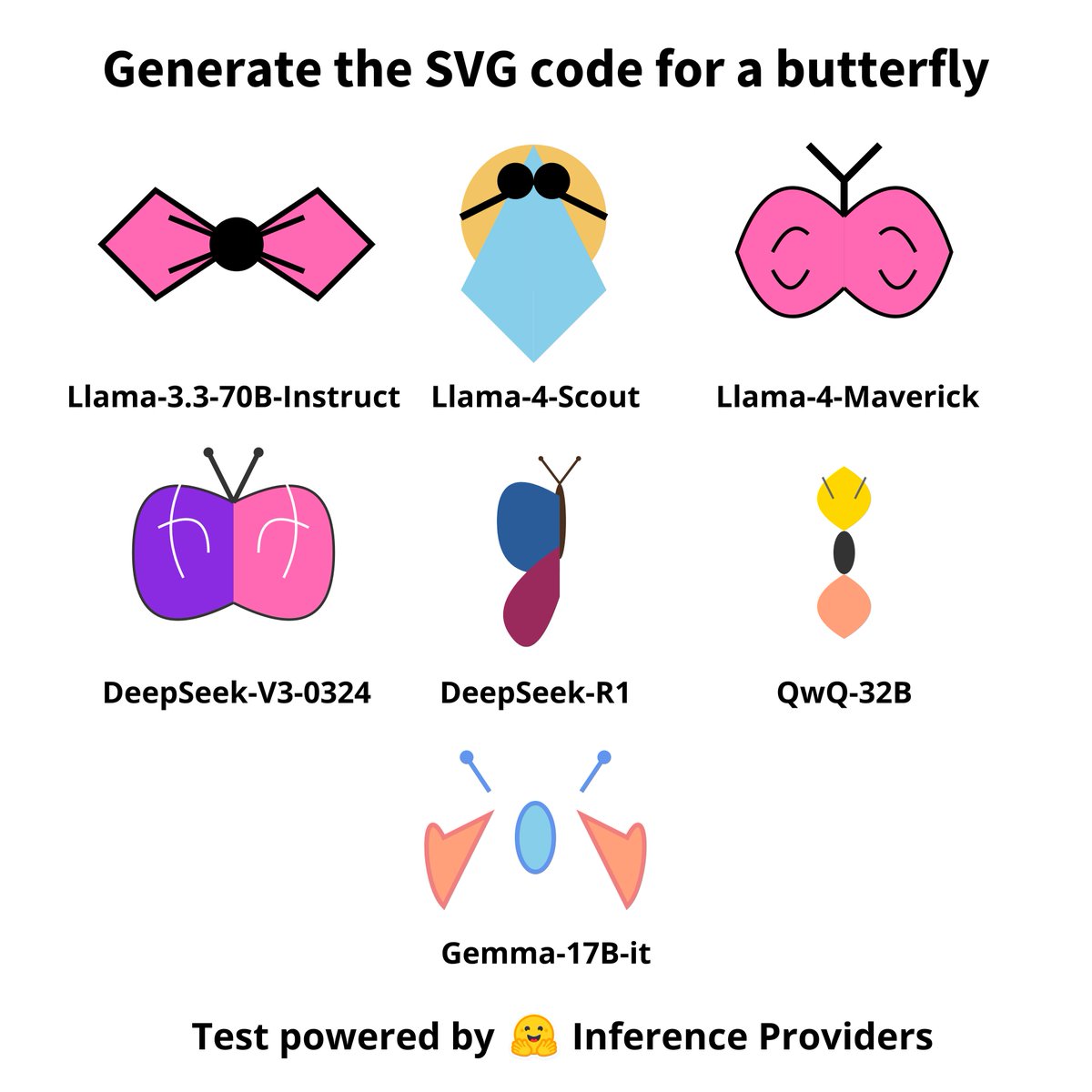

Open Source AI vibes on the Hugging Face Hub I'm building a small vibe benchmark you can run with Hugging Face Inference Providers (link in the next message). IMO, Inference Providers will become one of the most important pieces of the stack for building with AI: No lock-in,