Apoorva

@apoorvasriniva

bits and atoms

ID: 2289006950

https://www.apoorva-srinivasan.com/ 13-01-2014 03:00:13

2,2K Tweet

2,2K Followers

924 Following

LLMs memorize a lot of training data, but memorization is poorly understood. Where does it live inside models? How is it stored? How much is it involved in different tasks? Jack Merullo & Srihita Vatsavaya's new paper examines all of these questions using loss curvature! (1/7)

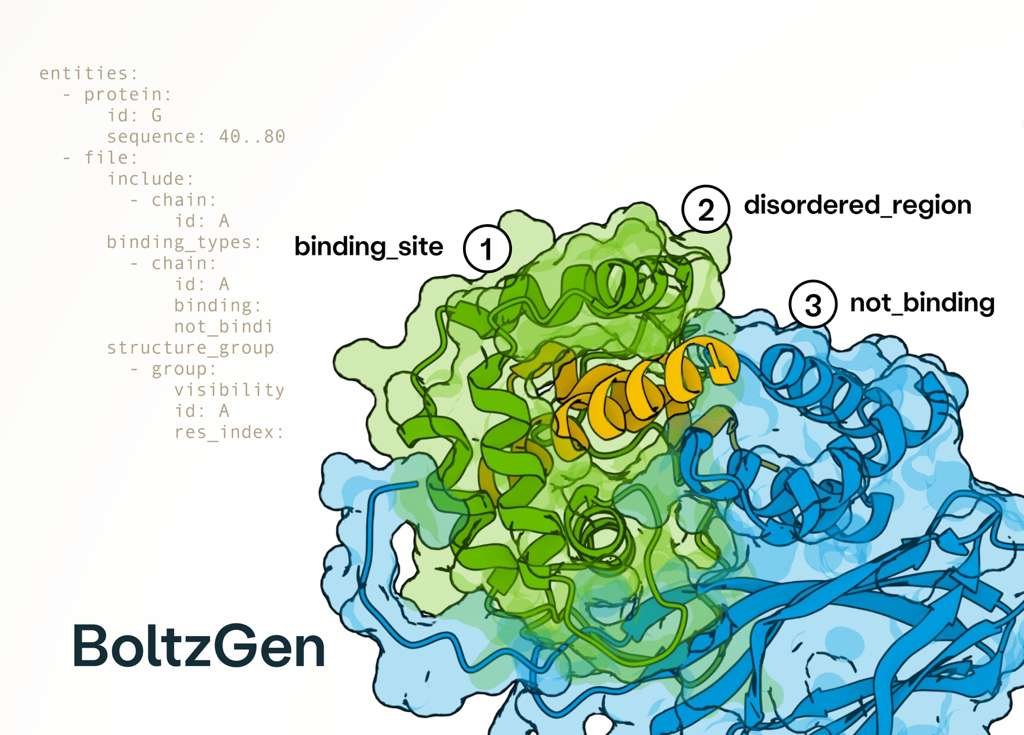

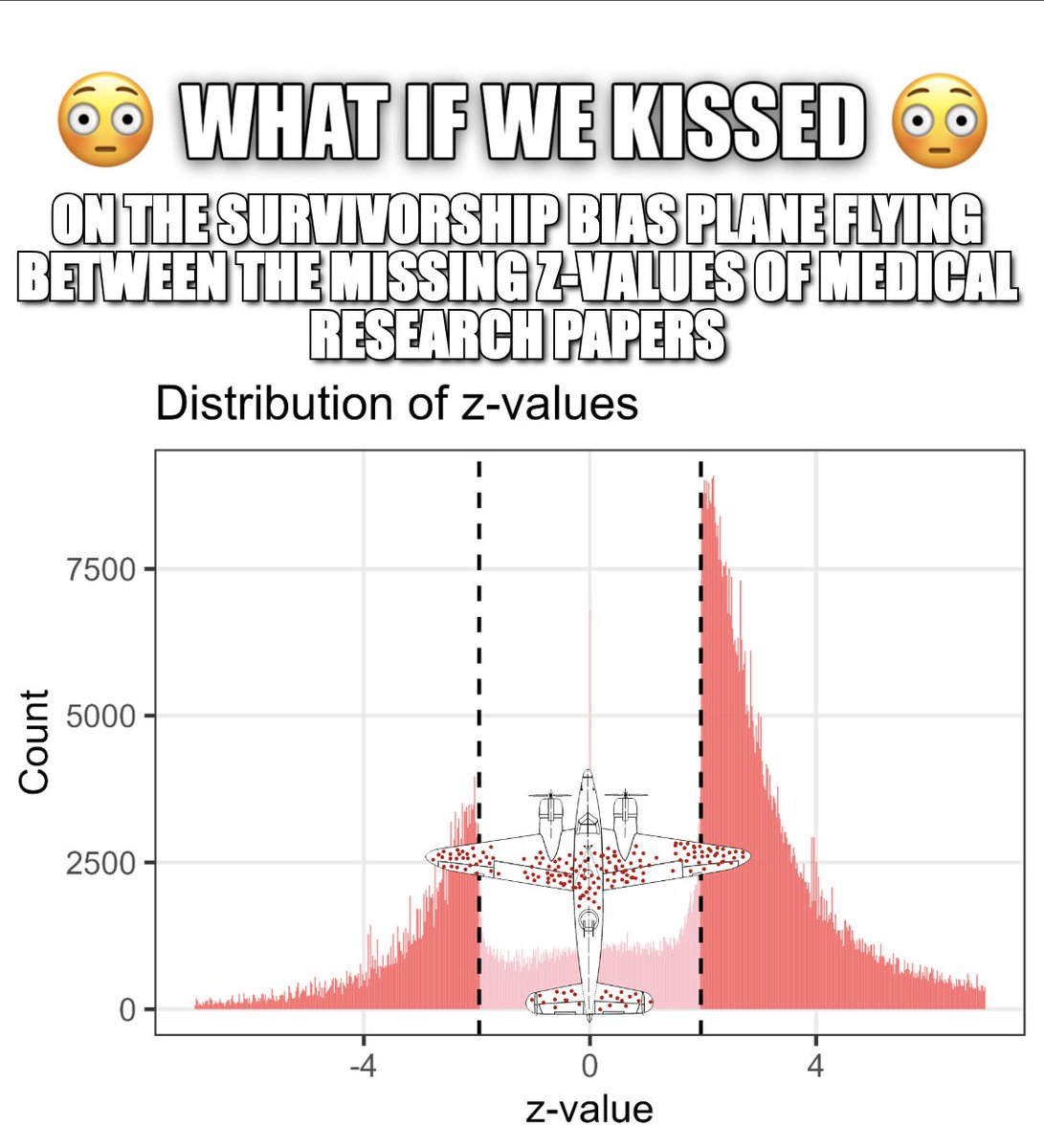

You have heard of AI slop in the context of short video creation. But the same principle applies when it comes to improving drug discovery: we absolutely do not need a deluge of new hypotheses; we need better predictive validity (as per Jack Scannell). writingruxandrabio.com/p/what-will-it…