Anjali Gupta

@anjaliwgupta

PhD @NYU_Courant, B.S. @Yale

ID: 1142867284203098114

http://www.anjaliwgupta.com 23-06-2019 18:49:28

17 Tweet

73 Followers

129 Following

I am recruiting Ph.D. students for my new lab at New York University! Please apply, if you want to work with me on reasoning, reinforcement learning, understanding generalization and AI for science. Details on my website: izmailovpavel.github.io. Please spread the word!

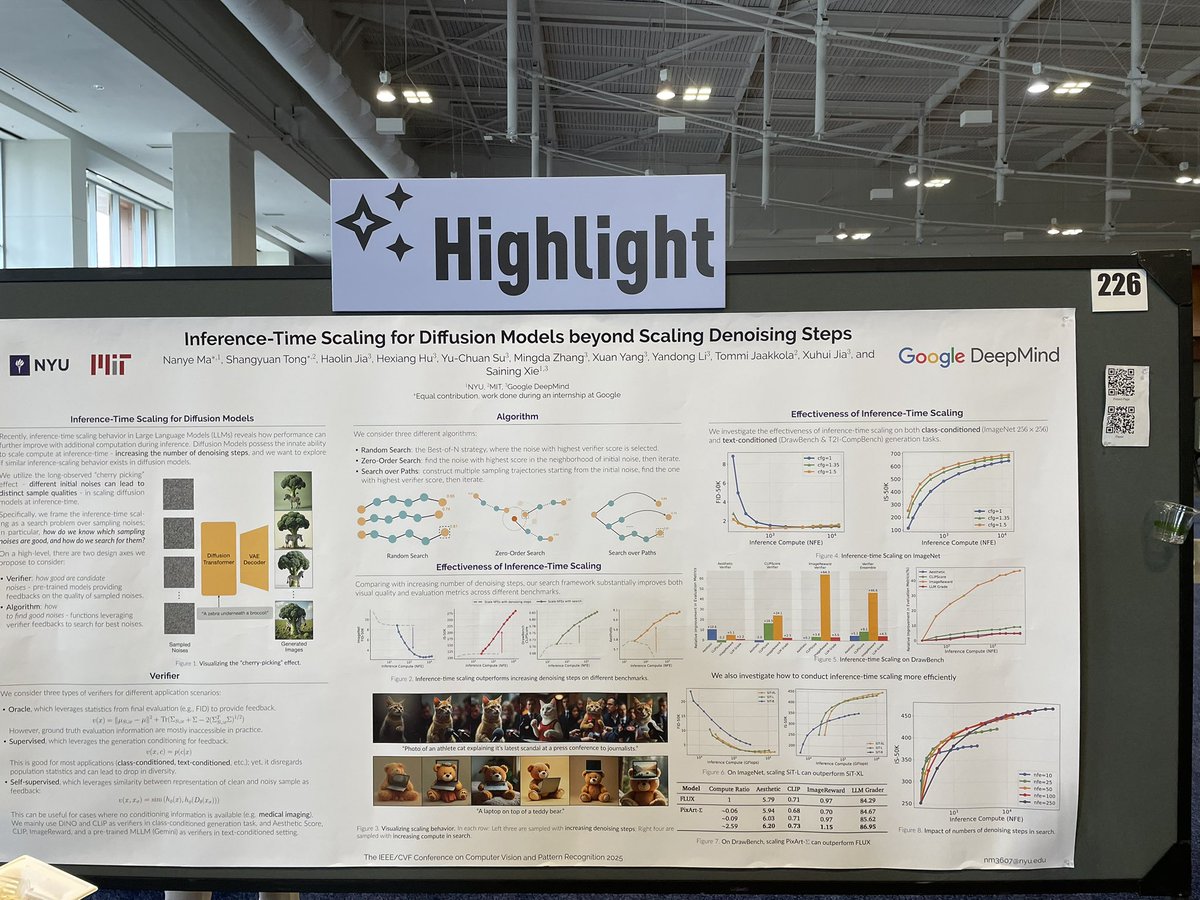

Excited to present “Thinking in Space: How MLLMs See, Remember, and Recall Spaces” at #CVPR2025 as an Oral paper alongside my amazing co-authors Shusheng Yang Jihan Yang (supervised by Saining Xie)! We’ll be speaking at 10:45am on Saturday, June 14, in the Davidson Ballroom!