Anirudh Goyal

@anirudhg9119

Thinking about thinking.

Spent time at @Berkeley_EECS, @MPI_IS, @GoogleDeepMind.

ID: 2816636344

https://anirudh9119.github.io/ 08-10-2014 19:47:25

901 Tweet

5,5K Followers

518 Following

Work from Danilo J. Rezende, Shakir Mohamed and Daan Weistra. proceedings.mlr.press/v32/rezende14.… Credit assignment is difficult.

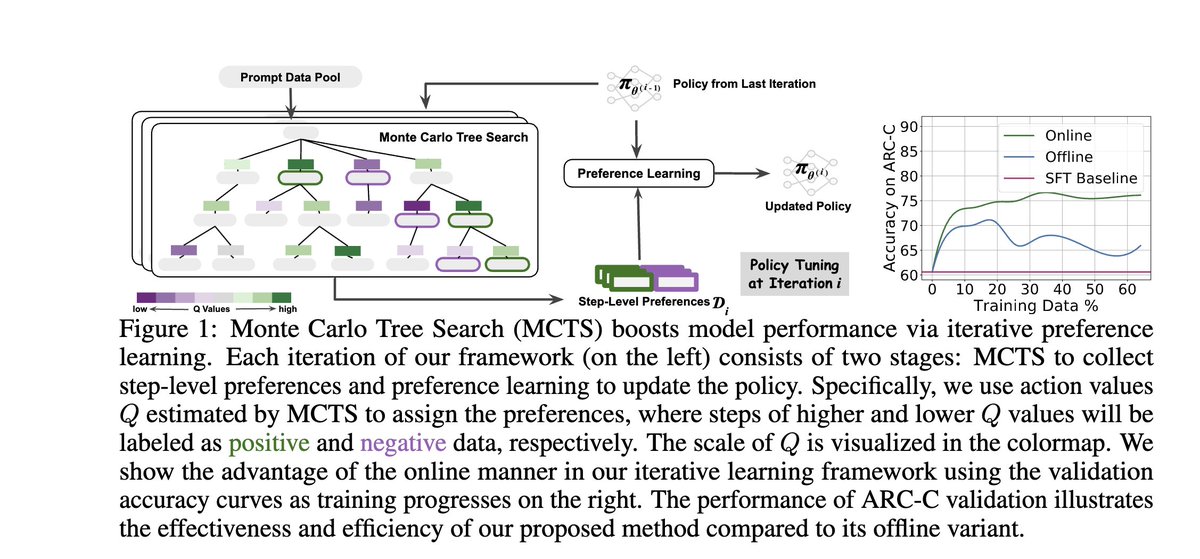

Interesting progress from Rafael Rafailov @ NeurIPS and FTP et. al following our work (applied to mathematical and commonsense reasoning): Monte Carlo Tree Search Boosts Reasoning via Iterative Preference Learning arxiv.org/abs/2405.00451 (Also discussed in Llama-3 paper, AI at Meta )