Andres Algaba

@andresalgaba1

@FWOVlaanderen Postdoctoral Researcher in AI, Coordinator in Generative AI, and Guest Professor at @VUBrussel and @DataLabBE | Member @JongeAcademie

ID: 1455086332334706688

https://www.andresalgaba.com/ 01-11-2021 08:16:52

157 Tweet

96 Followers

590 Following

Check out the talk of our PhD student Floriano Tori on “The Effectiveness of Curvature-Based Rewiring and the Role of Hyperparameters in GNNs Revisited” at 18.30 CET streamed here: youtube.com/@learningongra… Learning on Graphs Conference 2025

Join our team at Data Analytics Lab! We're excited to announce a new job-opening: a Postdoctoral Fellow in Computational (Social) Science and AI. Ideal for researchers motivated to innovate and work in a highly interdisciplinary environment. Deadline Jan 19. jobs.vub.be/job/Elsene-Pos…

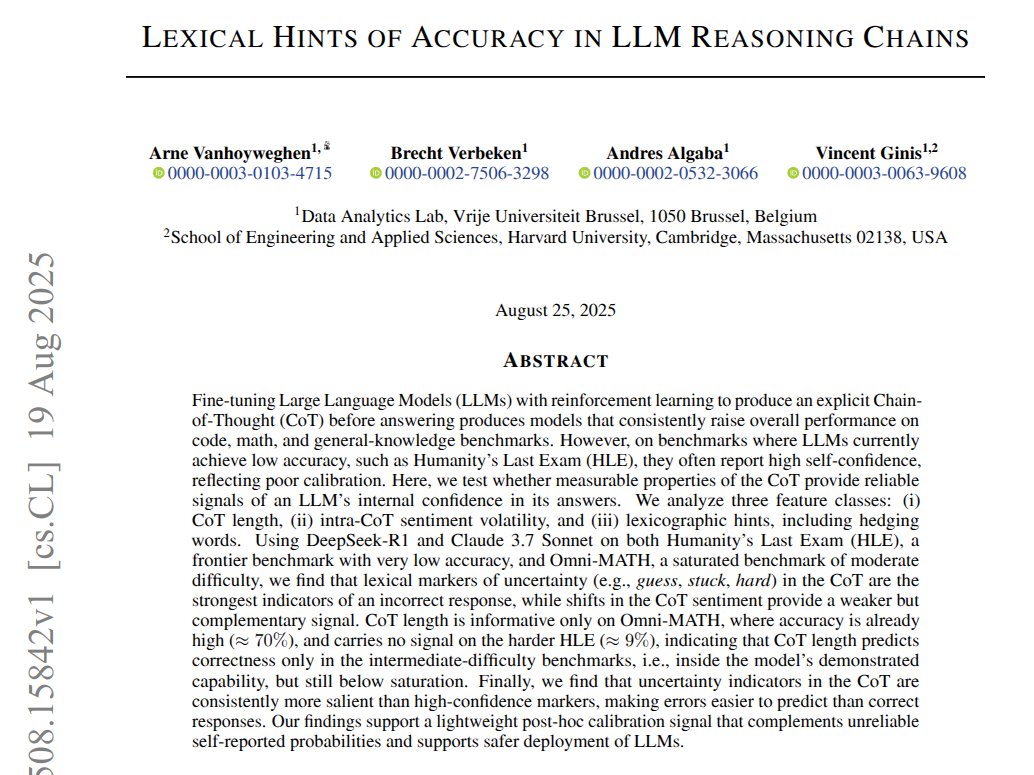

LMs are getting really good at reasoning, but mechanisms behind it are poorly understood. In our recent paper, we investigated SOTA models and found that 'Thinking harder ≠ thinking longer'! Joint work with Andres Algaba, Vincent Ginis Insights of our research (A thread):

Understanding how LLMs reason might be one of the most important challenges of our time. We analyzed OpenAI models to explore how reasoning length affects performance. Excited to take these small first steps with brilliant colleagues Marthe Ballon and Andres Algaba!

1/2 New blog post by Arne Vanhoyweghen about the Brussels Experiment. Put quantitatively trained people under time pressure, and force them to behave more intuitively. Result: they behave less like expected-value maximizers and more like long-term growth optimizers, EE-style.