Ammar Ahmad Awan

@ammar_awan

DeepSpeed-er @Microsoft, @MSFTDeepSpeed, Father, PhD, Wanna-be Professor, Technology Enthusiast.

ID: 372102004

http://awan-10.github.io 12-09-2011 04:03:46

475 Tweet

259 Followers

532 Following

Are you a #DeepSpeed user, fan, contributor, and/or advocate? Are you interested in meeting people behind @MSFTDeepSpeed tech? Are you interested in #AI? If yes, come and meet the team at our first in-person meetup in the Seattle area! Register here: developer.microsoft.com/reactor/events…

Thanks Stas Bekman! DeepSpeed team is hiring for various engineering and research roles! Come join us and steer the future of large scale AI training and inference.

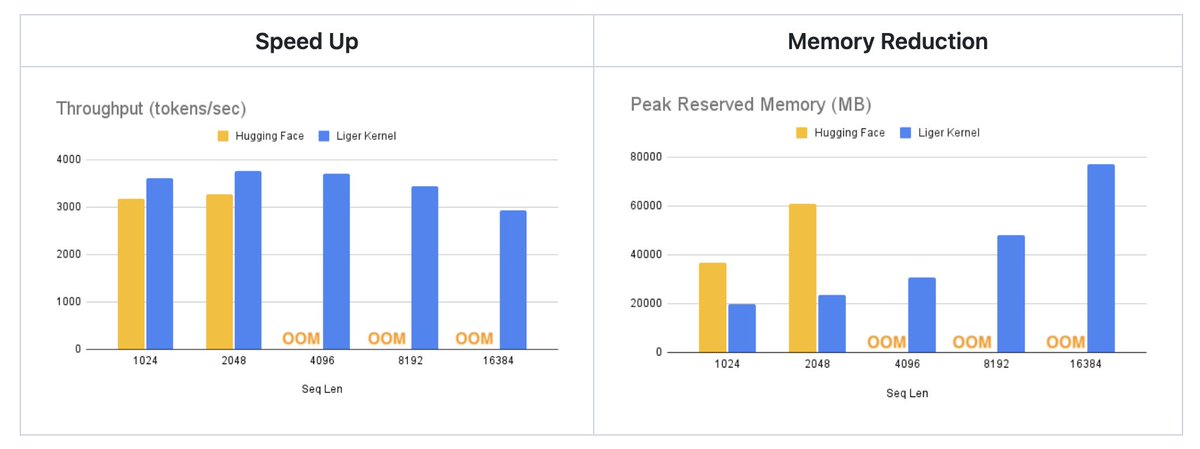

#DeepSpeed joins forces with University of Sydney to unveil an exciting tech #FP6. Just supply your FP16 models, and we deliver: 🚀 1.5x performance boost for #LLMs serving on #GPUs 🚀 Innovative (4+2)-bit system design 🚀 Quality-preserving quantization link: github.com/microsoft/Deep…

オハイオ州立大学で開かれたイベントで、メンバーのAmmar Ahmad Awan Ammar Ahmad Awan が、DeepSpeedの最適化に関する講演を行いました! オハイオ州立大学は分散並列処理の研究で広く知られており、DeepSpeedチームにも出身者が多くいます。

Felt great to be back at OSU. Thank you Dhabaleswar Panda, Hari Subramoni for inviting me and enabling me to share the awesome DeepSpeed work with MVAPICH team!