Amir Feder

@amir_feder

Incoming assistant prof. @CseHuji

postdoc @blei_lab // @GoogleAI

causality + language models

ID: 757255726553333760

http://www.amirfeder.com 24-07-2016 16:46:49

110 Tweet

520 Followers

189 Following

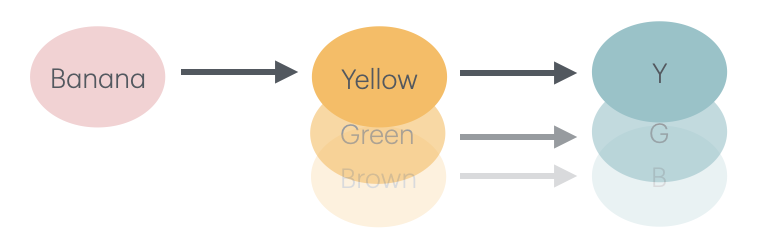

1/15 📣preprint📣 TL;DR We (Yair Gat Amir Feder Alex Chapanin Amit Sharma Roi Reichart) show (theoretically and empirically) that #LLM-generated counterfactuals produce faithful SOTA explanations of how high-level concepts impact #NLP model predictions! arxiv.org/abs/2310.00603

Attending NeurIPS Conference #NeurIPS2023 next week? Join us for an enthralling discussion with Max Katz (from Martin Heinrich’s office), Zachary Lipton, Hoda Heidari, Katherine Lee & the incredible Louise Matsakis on how researchers can better help policymakers when it comes to

Super excited to present this work as spotlight tomorrow (Wed) at #NeurIPS23 alongside Claudia Shi & Amir Feder 🗓️10:45 - 12:45, Poster: #1523

🧠🤖 How do LLMs think? What kind of thought processes can emerge from artificial intelligence? Our latest paper about multi-hop reasoning tasks reveals some new interesting insights. Check out this thread for more details! arxiv.org/abs/2406.13858 Ariel Goldstein Amir Feder