Alaa Youssef

@alaa_youssef92

Post-doctoral fellow at Stanford AIMI. Holds a PhD in Population Health and Data Science, University of Toronto. Research: Ethics of AI, clinical safety, HCI

ID: 328415178

03-07-2011 09:54:05

2,2K Tweet

592 Followers

1,1K Following

Sanjeev Sockalingam giving a plenary at #aadprt2020 on "Avoiding the Curriculum Carousel: Approaches to Curriculum" UIC Department of Medical Education The Wilson Centre

Big news! Our co-director Nigam Shah & teams published a groundbreaking paper on Evaluating Fair, Useful, and Reliable AI Models (FURMs) in Health Care. These open-source tools redefine AI evaluation in healthcare. Amazing work! More here: bit.ly/3Xyl6s2 #AIinHealthcare

Another update to the HELM benchmarks (MMLU, Lite, AIR-Bench) with new model versions. We do see some movement near the top even with these "minor" versions updates. crfm.stanford.edu/helm/mmlu/v1.1… Claude 3.5 Sonnet (20241022) Gemini 1.5 Pro (002) Gemini 1.5 Flash (002) GPT-3.5 Turbo

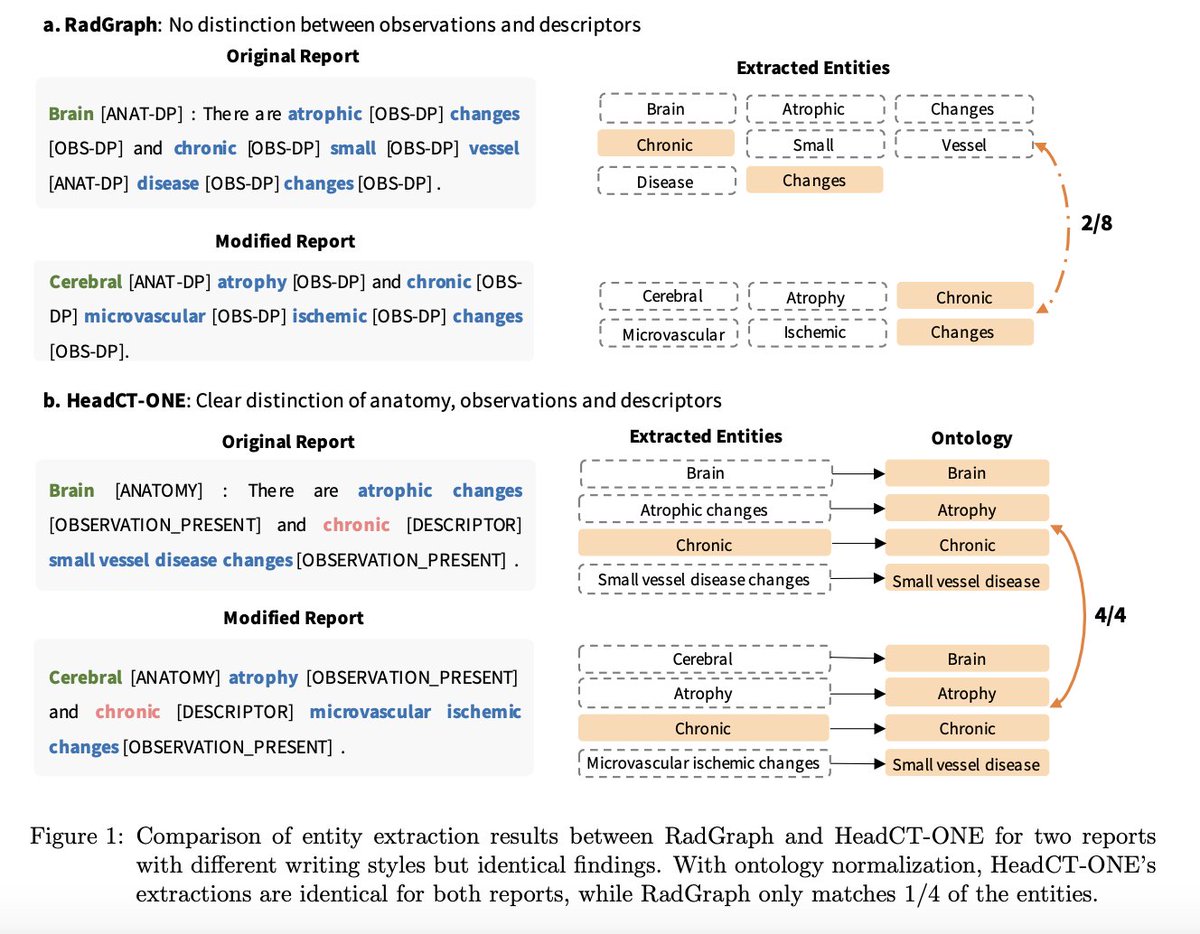

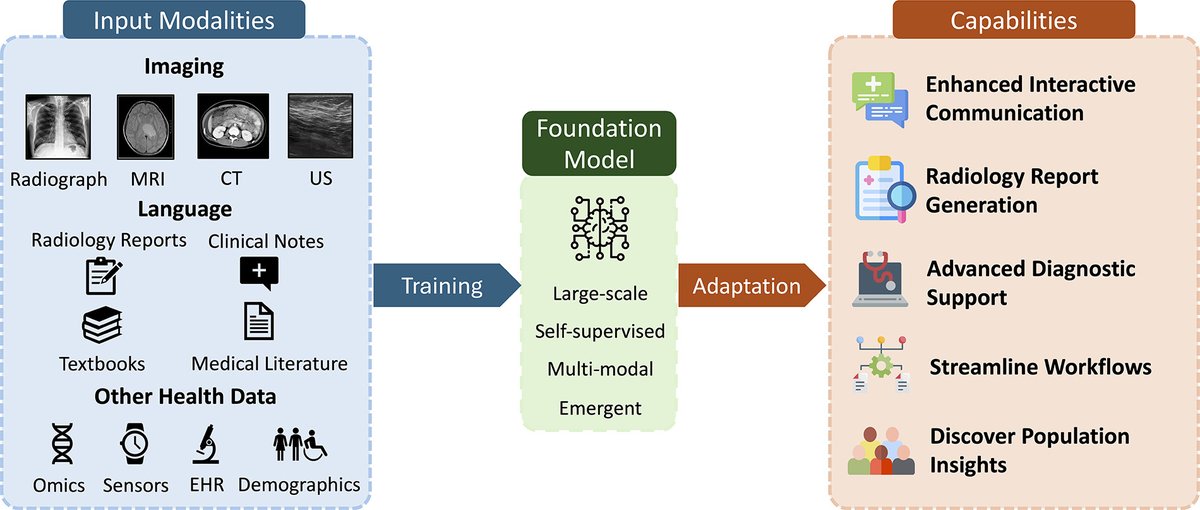

Learn about AI foundational models in radiology in this review article! Stanford AIMI Stanford Radiology Stanford Medicine Magda Paschali Zhihong Chen Maya Varma Louis Blankemeier Alaa Youssef Christian Bluethgen Curt Langlotz Akshay Chaudhari bit.ly/4jL4OGG

Agency > Intelligence I had this intuitively wrong for decades, I think due to a pervasive cultural veneration of intelligence, various entertainment/media, obsession with IQ etc. Agency is significantly more powerful and significantly more scarce. Are you hiring for agency? Are

🚀 We hosted an exclusive panel discussion on AI in Healthcare, featuring leading voices at the intersection of technology and life sciences! Moderated by Shiva Amiri, PhD, Partner and VP – Head of AI and Data Intelligence at Pivotal Life Sciences, the panel brought together:

Strong kick-off Stanford AIMI of the Inaugural AIMI AcademicxIndustry Summit 2025 on a nice fall morning Curt Langlotz #StanfordFacultyClub Stanford AIMI